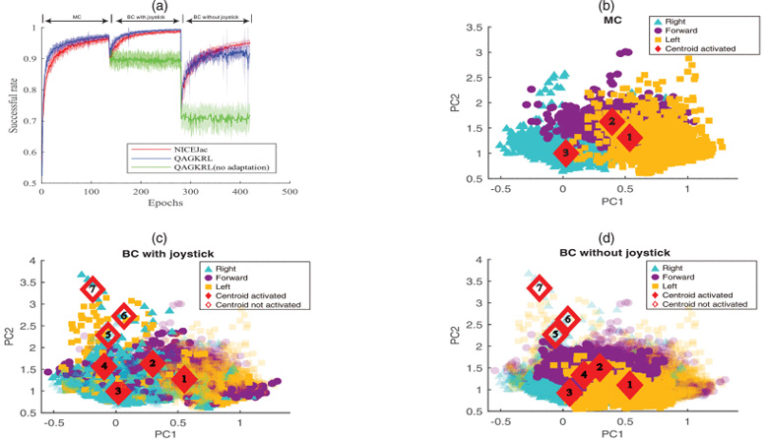

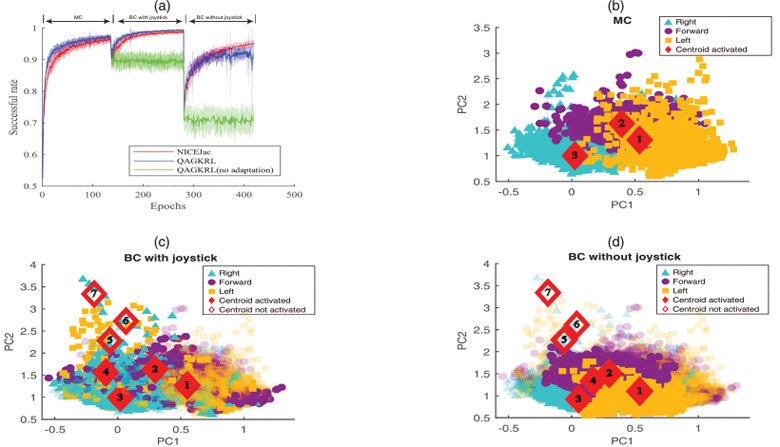

Neuroprosthesis enables the brain control on the external devices purely using neural activity for paralyzed people. Supervised learning decoders recalibrate or re-fit the discrepancy between the desired target and decoder’s output, where the correction may over-dominate the user’s intention. Reinforcement learning decoder allows users to actively adjust their brain patterns through trial and error, which better represents the subject’s motive. The computational challenge is to quickly establish new state-action mapping before the subject becomes frustrated. Recently proposed quantized attention-gated kernel reinforcement learning (QAGKRL) explores the optimal nonlinear neural-action mapping in the Reproducing Kernel Hilbert Space (RKHS). However, considering all past data in RKHS is less efficient and sensitive to detect the new neural patterns emerging in brain control. In this paper, we propose a clustering-based kernel RL algorithm. New neural patterns emerge and are clustered to represent the novel knowledge in brain control. The current neural data only activate the nearest subspace in RKHS for more efficient decoding. The dynamic clustering makes our algorithm more sensitive to new brain patterns. We test our algorithm on both the synthetic and real-world spike data. Compared with QAGKRL, our algorithm can achieve a quicker knowledge adaptation in brain control with less computational complexity.