Over the past 30 years, robots have become standard fixtures in operating rooms. During brain surgery, a NeuroMate robot may guide a neurosurgeon to a target within the pulsing cortex. In orthopedics, a Mako robot sculpts and drills bone during knee and hip replacement surgery. Dominating the general surgery field is the da Vinci robot, a multiarmed device that allows surgeons to conduct precise movements of tools through small incisions that they could not manage with their own hands.

But if their novelty has worn off, robotics’ promise has not. As technology matures, so does the ability of surgical robots, which are expanding their surgical repertoire in ways that allow surgeons to perform completely new procedures. “You can think of robotics as a way of projecting a person into a space and giving them capabilities they wouldn’t otherwise have,” says Gregory Hager, an expert in computer vision and robotics at Johns Hopkins University, Baltimore, Maryland (Figure 1). “Robotics is an amplifier of dexterity; it’s an amplifier of access; it’s an amplifier of ability to apprehend what you are doing,” he adds.

The multimillion-dollar da Vinci robot is widespread in the United States, assisting with hundreds of thousands of surgeries each year. As a surgeon manipulates grippers at a video-gamelike console, instruments can be moved with seven degrees of freedom, and more than two instruments may be working at the same time, plus a surgeon’s movements may be scaled down to make ever-smaller manipulations, and hand tremors filtered out to improve accuracy. These robots now constitute standard practice for urological and gynecological procedures, for example, accounting for 87% of prostate removals in 2013. Similar robots are under development, including the SurgiBot made by TransEnterix, which is smaller and cheaper than the da Vinci and expected to be approved for use in the United States sometime in 2016.

Although it remains controversial whether robot-assisted surgery outcomes are better than procedures accomplished by human hand, the clamor for robotic surgeries continues. Hospitals boast of their surgical robots, hoping to attract patients and, eventually, lower costs. And surgeons and engineers are creating innovative uses for robots in the operating room. New developments in the research stages allow superhuman sensing, such as seeing into organs during a procedure or transmitting subtle touch signals to the surgeon even though his or her hands are not directly probing the tissue. Redesigns of robotic surgical instruments may expand their reach, including tentacle needles than can navigate the complicated twists and turns of the lungs’ bronchi or remote-controlled minirobots that can be let loose into the eyeball to deliver drugs. Semiautonomous robots may release surgeons from the tedium of small tasks, such as suturing, or even enable remotely controlled surgeries in space.

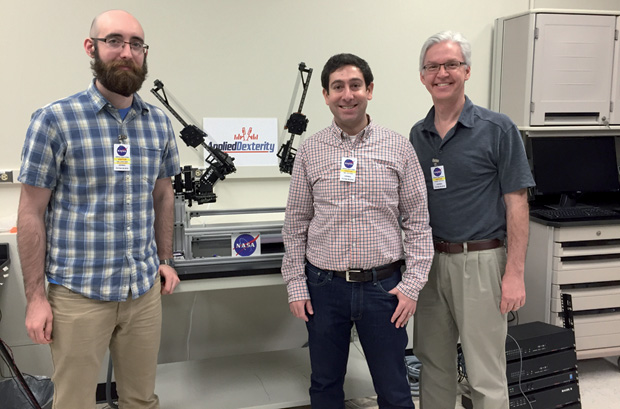

These developments do not threaten the demand for surgeons, experts say. “It’s going to be a long, long road before you see a surgical robot able to make the right sort of tactical decisions that surgeons do, as opposed to simply deploying some capability in the right place,” according to Hager. And, adds Blake Hannaford, director of the Biorobotics Laboratory at the University of Washington in Seattle (Figure 2), “Everybody wants a professional in charge of your surgery at all times. Our view is how to keep the surgeon in control but extend their capabilities through robotics.”

Surgical Superpowers

Surgeons never operate blind. They work, sometimes for hours, to completely visualize their target before operating on it. This means that, before deploying robots to reach out-of-the way places, surgeons need ways to image these recesses, usually through an endoscope. The current design of the da Vinci robot provides a stereoscopic display system, which allows depth perception of a surgeon’s field of view, but Hager and others want to bring more information into the picture. “I think, fundamentally, surgery is spatial manipulation, and so you want to give people the best possible spatial awareness,” he says.

Currently, a surgeon might work with two separate views—say, video from an endoscope on one monitor and an ultrasound or computed tomography (CT) scan on another—which necessitates combining the information from both images in his or her own head. But Hager’s group is developing a system that registers and merges these different imaging modalities into a single view so as to easily visualize the anatomical structures. This allows, for example, images of a liver tumor to be projected onto the organ’s surface, and these images change as the position of the endoscope or surgeon’s tool moves. This is the first step toward the longer-term goal of providing something similar to a Google map of the body. Hager also cofounded Clear Guide Medical, which recently received U.S. Food and Drug Administration approval for a system that can morph a preoperative CT scan into a live ultrasound image, accounting for changes in the body’s shape as it is pressed upon during surgery.

Fusing these images not only informs the surgeon but can also govern a robot. Hager foresees that, in ten years, robots will be able to interpret images and automatically select anatomic structures within them. “It’s not going to be perfect, and that’s why you’ll still need a surgeon in the loop,” he cautions. A surgeon will tell the robot which anatomic structures to find, check that they were located correctly, and set up no-fly-zones around vital structures, such as the carotid artery. Predicting when such automated visualization will enter operating rooms is difficult, however, given the time needed to commercialize the technology.

Surgeons also rely on their sense of touch while operating, but robotic surgeries have largely taken that away. The da Vinci robot can transmit large-scale forces, such as when surgical instruments collide. But the fine touch discrimination that helps a surgeon palpate an organ for a tumor or know how hard to pull a suture is lost. Visual feedback compensates for this to some extent, with surgeons learning to feel with their eyes. Researchers are also looking for ways to return some tactile, or haptic, feedback to surgeons operating at robotic consoles.

“For some procedures, it may be that there is nothing that can replace the natural feeling of touching something with your fingers,” explains Allison Okamura, a haptics researcher at Stanford University, Palo Alto, California (Figure 3). Providing haptic feedback means estimating the interaction forces between instruments and tissue then transmitting a force signal to the surgeon’s hands. Several groups, including robot-making companies, are looking for ways to do this noninvasively. But pushing on the surgeon’s fingers with small motors runs the risk of causing unintentional movements of the surgeon’s hands. As an alternative, Okamura and her team have found that lightly stimulating the skin can be a useful substitute. “Locally stretching the skin, rather than pushing wholesale on the hands, can trick the user into feeling like they’re feeling large forces,” she says (Figure 4).

Boldly Going Where No Surgeon Has Gone Before

Beginning in the 1990s, robots lent a helping hand in bone shaping during orthopedic surgeries, providing standardization and precision beyond that achieved by handheld tools that drill, hammer, and screw. Now, even more precise robots participate in procedures in which there is no margin for error, such as work near the spinal cord or drilling through the skull to place a cochlear implant for the hearing impaired.

Others, such as needle robots, allow minimally invasive access to new territories within the body. One tentacle-like needle robot developed at Vanderbilt University can wend its way through the lung’s bronchi or through nasal passages to reach tumors ensconced at the base of the brain. Okamura and her team have developed a different needle robot that is so minimally invasive it is no longer considered surgery because the tiny hole it leaves heals itself. The needle is extremely thin with a flexible tip yet rigid enough to burrow through some tissue. A robot inserts the needle and spins it, monitors how it bends as it encounters different tissue types, and changes trajectories as needed. This give-and-take guides the needle along a path to reach a target tumor without hitting the organ’s blood vessels. “It’s kind of like parallel parking a car,” Okamura notes.

Other researchers are developing microrobots that can be guided to their targets by magnetic fields, which could result in more precise applications of drugs. For example, to treat eye diseases such as age-related macular degeneration, ophthalmologists currently inject medicine into the front of the eyeball. The drug then diffuses to the back of the eye where it acts on the retina. “With microrobotics you can carry drugs to the source of the problem, which means you need much less drug,” says Bradley Nelson, a professor of robotics and intelligent systems at ETH Zürich, Switzerland (Figure 5). Nelson and his team have developed a cylindrical microrobot that is about one-third of a millimeter in diameter and one millimeter long, composed of magnetic material such as iron, and coated with biocompatible polymers. To the naked eye, it resembles an innocuous whisker or piece of dust (Figure 6).

These microrobots can be loaded with a drug, then directed to enter the eyeball by applying magnetic fields outside the head. The guidance system involves eight different electromagnets, through which electric currents must be precisely controlled via algorithms developed by the robotics community. Once they reach their retinal target, the drug begins to diffuse. So far, they have only been tested in animals, but a similar system has been used to guide tiny catheters into the heart to ablate arrhythmia in seven patients during a clinical trial in Switzerland.

Nelson is also developing microrobots that can change shape as they navigate different environments, in ways similar to how certain parasites enter into the bloodstream in a stumpy form but then take on a more streamlined shape to penetrate tissue. Such shape-shifting can also be found in so-called origami robots, including one recently demonstrated by researchers from the Massachusetts Institute of Technology at the International Conference on Robotics and Automation in Stockholm. Designed to retrieve small batteries from the digestive tract—a problem that occurs when young children swallow them—the robot was introduced in capsule form into an artificial digestive tract, where it then unfolded and was guided by magnetic fields to retrieve the batteries. From there, the idea is to guide the robot and its cargo to the large intestine for excretion, thus avoiding the need for surgery (see Ingestible Origami Robot at MIT News). “These are kind of crazy ideas, but they’re fun to figure out how to make,” Nelson says.

I, Robot

Current robots in the operating room are slaves to a surgeon master: every movement a da Vinci robot makes results from a human commanding it to do so. But automation looms on the horizon, with robots that can select and execute a plan without a surgeon directing every step of the way. This could free surgeons from tedious piecework such as suturing or tumor ablation.

In May 2016, researchers at Johns Hopkins University published a report on the capabilities of their Smart Tissue Autonomous Robot (STAR), which sewed the two pieces of a severed pig intestine back together [1]. Though taking longer than surgeons to perform the procedure, the robots mended the intestine satisfactorily and, in some ways, better than humans. Surgeons, however, had to intervene to adjust positioning for nearly half of the sutures.

Likewise, a semiautonomous robot called RAVEN can theoretically be used to remove brain tumor cells identified by fluorescent tumor paint [2]. RAVEN is a small, tabletop robot originally designed for battlefield surgeries (see Surgical Robots in Space: Sci-Fi and Reality Intersect from IEEE Pulse). So far, RAVEN has only been involved in simulated tumor ablations, but the idea is that the robot will image the tumor and come up with a plan. A surgeon would then approve the plan, or some fraction of it, leaving the robot to painstakingly ablate the agreed-upon fluorescent cells. This process would be repeated iteratively until the tumor is gone, leaving healthy brain tissue unscathed (Figure 7). “The surgeons will only approve the autonomy as they feel comfortable with the system,” notes Hannaford, who helped develop the RAVEN robot. “We want to make sure the surgeon’s judgment is still applied all the time.”

Autonomous robots could be particularly useful for teleoperations in space conducted by earthbound surgeons, Hannaford says. The great distances data need to travel between Earth and space create significant delays, resulting in as much as a five-second lag between Earth and the International Space Station, for example. During that interval, however, an automated robot could continue to work.

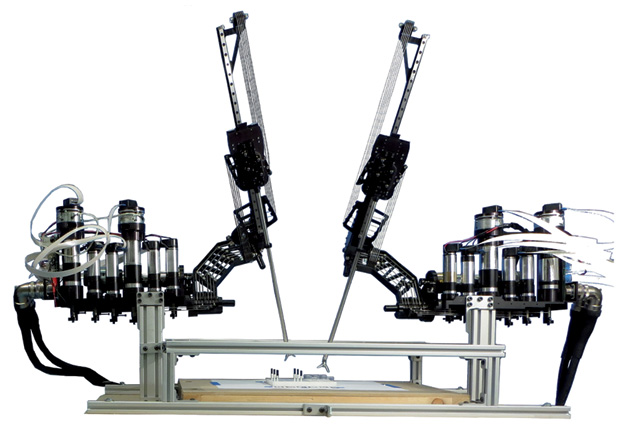

The first robotic space surgeries will likely be on mice, not humans. On the International Space Station, astronauts conduct many experiments, including one that dissects mice to understand the physiological effects of living in space. Doing the dissections remotely, with people on Earth controlling the robots, would free up already time-strapped astronauts. According to simulations run by Hannaford and colleagues, RAVEN copes well with five-second time lags, and this spring researchers with Applied Dexterity, a company cofounded by Hannaford, demonstrated the RAVEN robot to NASA at the Johnson Space Center in Houston (Figure 8). “We got a lot of excitement about it from them,” Hannaford says. “If we put a little robot up there and operate it from the ground to do most of this dissection task, then the mission can get more science done.”

References

- A. Shademan, R. S. Decker, J. D. Opfermann, S. Leonard, A. Krieger, and P. C. Kim, “Supervised autonomous robotic soft tissue surgery,” Sci. Transl. Med. vol. 8, no. 337, p. 337ra64, May 2016.

- D. Hu, Y. Gong, B. Hannaford, and E. J. Seibel, “Semi-autonomous simulated brain tumor ablation with Raven II surgical robot using behavior tree,” in Proc. IEEE Int. Conf. Robotics Automation, May 2015, pp. 3868–3875.