In a blog post in January 2014, Google unveiled one of its latest forays into the health market—a smart contact lens for diabetics. It was sleek and appealingly futuristic, with a minute microchip equipped with tiny glucose sensors, embedded in a soft, biocompatible lens material. Already, the company said, the prototype could measure tear glucose as often as once per second, and it may someday include tiny LED lights to signal warnings to the wearers when their blood sugar rises or falls to dangerous levels.

The company went on to partner with Novartis, earn patents on the technology, and appear in headlines from the BBC to The New York Times. But while Google is one of the most visible examples of researchers trying to harness the eye for the benefit of the rest of the body, it’s far from the only one.

In fact, the eye, with its visible blood vessels, close connection to the brain, and unexpectedly complex tear fluid, offers enormous potential for diagnostics and screening for everything from Alzheimer’s disease and multiple sclerosis to cancer, heart disease, and even certain parasitic infections. It’s not a new idea per se, but, until recently, it has been long held back by inconvenient technology or insufficient science. Over the past few years, however, this landscape has begun to shift, and now, in labs around the world, the search is on for the tools and knowledge that will allow them to someday turn the eye into as common a clinical tool as the blood pressure cuff or a vial of blood.

Get a Move On

Eye movements are the most frequent voluntary action we make in our waking day, occurring more times per second than our hearts beat. The predominant type of this movement is the saccade—the quick, ballistic action the eyes make as they move from point to point. These require a slew of brain areas working in tandem, including motor, memory, sensory, and executive and attention regions of the brain. As a consequence, dysfunction in these areas, whether from autism, Alzheimer’s, or concussion, can turn up in the form of telltale differences in a person’s gaze.

Indeed, so intimate is this eye–brain connection that a scientist who knows how to ask the right questions can pinpoint the location of a problem in the brain through eye movement alone. For instance, patients with Huntington’s disease can have trouble with tasks that require them to look at a location opposite a target or follow a target that jumps to and fro—but they do well in certain visual attentional tasks, hinting at problems in the frontal lobes of the brain but not the parietal.

Eye tracking has been a longtime staple in certain research areas like neuroscience, but it hasn’t translated well into clinics yet, due in part to cumbersome technology and tedious “following- the-target” tasks. David Crabb, a visual scientist at the City University London, England, studies degenerative eye diseases in aging populations. Even as recently as ten years ago, he says, the state of the art involved cameras positioned close to the eyes in a clunky, head-mounted device. “You can imagine the fun and games we had with elderly people, trying to clamp that kind of thing on their head,” he says.

But thanks to ever-shrinking cameras and developments in user-interface technology, better tools have evolved quickly. The eye trackers of today are much less intrusive and faster for both subject and researcher. “It can almost be done in a surreptitious way, once calibrated,” Crabb says.

Last year, he published a proof-of-concept experiment in the journal Frontiers in Aging & Neuroscience, describing a free-viewing system in which subjects simply watched television clips while a camera recorded their eyes from a distance. It was the next best thing to natural, aside from the chin rest that held them at precisely 60 cm from the screen. Yet it provided a wealth of eye-path data to which his team was able to apply computational modeling and machine learning, assembling viewing patterns that distinguished healthy patients from those with a neurodegenerative disease (albeit a traditionally ocular one—glaucoma) with a 76% sensitivity rate.

Meanwhile, at the University of Southern California, another group, led by Laurent Itti, has reported on a similar free-viewing and modeling system that he used to successfully differentiate among attention deficit hyperactivity disorder (ADHD), fetal alcohol syndrome, and healthy children, as well as Parkinson’s patients from healthy controls. And neurosurgeon Uzma Samadani, then at the New York University School of Medicine, now at the University of Minnesota, has developed an even simpler variation on the idea for traumatic brain injury and concussion, both notoriously difficult conditions to objectively quantify and diagnose. Her approach uses a setup that moves a film clip around a larger screen, quantifying how well a person’s eyes coordinate and follow the clip—a task, explains Samadani, that up to 90% of patients with even mild brain injuries can have trouble executing.

It’s not only the cameras that allow for this to happen, however— modern advances in computation have also been crucial to the success of such work. “At the beginning, we thought maybe those kids with ADHD were going to be more sensitive to motion, or maybe the Parkinson’s patients were going to be color blind,” Itti says. “But there is no simple story like that.” Instead, he explains, it was only through using combinations of very subtle differences in many measurements, all plugged into complex models, that the team was able to distinguish the patients. “But there is not a single [one] of those measurements that is grossly abnormal,” he says.

The teams are now working on validating their findings with larger data sets and making their tools even more accessible and portable. “We want to be on a device that’s already in everybody’s pocket,” says Samadani, who has since cofounded the start-up Oculogica [1], with the hope of seeing her tool field-side at sporting events or on the front, where military personnel might need a clear and rapid brain-injury measure. Eye tracking, she says, fits especially well with current medical trends that bring health care delivery and monitoring out of the hospitals and doctors’ offices. “People want answers wherever they are, they want to be treated more conveniently, and they want to have access 24/7,” she says.

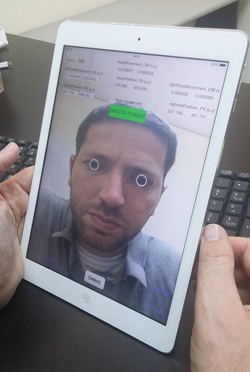

Eye tracking’s noninvasiveness lends itself particularly well to that idea of portable monitoring and diagnostics. And indeed, Israeli eye-tracking tech company Umoove has already developed eye-tracking software specifically for smartphone and tablet use, with no need for any additional technological accoutrements. The company is now collaborating with universities including Yale, the University of Pennsylvania, and Duke to develop compatible tests for various neurological conditions, including concussion, autism, and Alzheimer’s disease (Figure 1, right: Umoove’s application uses forward-facing cameras and minimized processing demands to create a mobile eye-tracking tool with no additional equipment. (Photo courtesy of Umoove.)).

In time, researchers believe that this type of approach could be routinely used to monitor the brain—by doctors in telehealth settings and possibly also by patients themselves. Many of the conditions that eye tracking seems to be able to tap are difficult to diagnose but important to catch, like autism and neurodegenerative disease. Something that’s inexpensive, easy to use, and widely available could help catch exactly these problems in time to begin treatment sooner than later and then even track the effects of that intervention.

“Whether it’s for a patient taking medication for Parkinson’s while the doctor can monitor him at home and see the data and see how he’s doing—or me at home, and playing football, and getting hit on the head and able to test on the field if I have a concussion and if I should stop playing and go to the doctor, and so on,” says Umoove Chief Executive Officer Yitzi Kempinski, “It’s taking this unbelievable knowledge of the eyes as a window to the brain and suddenly making that accessible.”

Through the Looking Glass

Nowhere is the idea of the eye as a window more apt than in the context of retinal imaging. The eye is the only place in the body where, with not much more than a light and a microscope, one can literally peer through a translucent lens and see a network of blood vessels—and, with more specialized equipment, see even deeper into single cellular layers of brain tissue. And it is genuine brain tissue in the eye: during embryonic development, the retina and optic nerve extend out from the diencephalon, the same area that yields brain regions like the thalamus and hypothalamus.

The phenomenal utility of this window has not gone unappreciated. Over the years, researchers have linked abnormalities in the tiny veins and arteries of the retina to diabetes, stroke, and cardiovascular disease. Neurodegenerative diseases like multiple sclerosis, Parkinson’s, Alzheimer’s, and recently even schizophrenia have been similarly tied to changes in thickness of the retinal nerve fiber layer—the axonal projection of the innermost retinal layer— retinal cell death, and, in the case of Alzheimer’s, the accumulation of telltale amyloid proteins. Even certain infections can show up in the retina—in a report last year, a team led out of the Malawi– Liverpool–Wellcome Trust Clinical Research Program in Africa reported the similarities in the vascular anatomy and progression of malaria in the retina and brain. The findings, the authors concluded, demonstrated the viability of the eye in helping researchers study and ultimately better treat pediatric cerebral malaria—a terrible form of malaria that can sometimes disable or kill children but is poorly understood due to the brain’s inaccessibility.

There are three main imaging technologies at play in this kind of work. The fundus camera is basically a microscope-and-camera that uses a flash of light to image the eye’s blood vessels; it’s a familiar tool to anyone who has ever visited an ophthalmologist. The scanning laser ophthalmoscope (SLO) does the same thing, but with a laser and the ability to cover more retinal real estate. Optical coherence tomography (OCT) is the newest of these tools and has been roundly hailed as a revolutionary technology. It performs a sort of noninvasive retinal ultrasound to visualize the layers of tissue in the back of the eye, down to a resolution of as little as 3 μm. The latest versions of these tools are not only much faster and higher-resolution than their earlier versions, but they’re also being increasingly combined with one another to create powerful multimodal technologies with better depth and range. The brand-new OCT-angiography (OCT-A), for instance, picks up blood flow in even the smallest blood vessels, the ones generally too minute and lying too deep to show up on a fundus camera image but which might be vulnerable to some of the earliest effects of a disease (Figure 2).

![Figure 2: Newer technologies that blend viewing tools like OCT with SLO allow researchers to scan across the retina and down into the different layers of the eye. This image was taken using a Heidelberg SPECTRALIS OCT device. [Image courtesy of the VAMPIRE Project (Universities of Edinburgh and Dundee, United Kingdom).]](https://www.embs.org/wp-content/uploads/2015/11/fischer02-2476575.jpg)

“Imaging technology has come of age,” says Baljean Dhillon, a clinical ophthalmologist at the University of Edinburgh, Scotland, United Kingdom. “And ophthalmology has been really lucky and is at the forefront of this kind of inquiry, where, in histological detail almost, we can now see the living retina in a safe and noninvasive way and capture enormous quantities of data.”

That kind of power poses a challenge, however, as researchers work to confirm the viability and usefulness of their biomarkers. Right now, as Australian researchers note in a 2011 review of the data, even some of the best-studied eye–body associations, like the connection between retinal blood-vessel size and heart-disease risk, have been limited and “disappointing” when it comes to improving existing non–eye-based risk measures [2]. The retina offers so many variables that it’s been difficult for researchers to find the best permutations. It’s a little like genetics in a way, because even as researchers develop these biomarkers, more data become accessible.

“It’s a data-processing problem,” explains Tom MacGillivray, also at the University of Edinburgh. Even with the simpler bloodvessel analyses, there’s the size of the vessels to consider, the geometry of their twists and turns, microaneurisms, bleeds, scarring, and new swaths of expanded retinal land to explore thanks to SLO.

The solution, as is so often the case, lies with very large studies. For retinal analysis, that means being able to analyze the images automatically. Earlier efforts focused on algorithms that could automatically detect and measure retinal features but are shifting now to connecting those abilities to clinically significant outcomes. “We can measure lots of things, but it’s [about] how we use that information, how do we start to piece together the jigsaw that will deliver us the useful bits of information that might be related to CVD (cardiovascular disease) or that might be related to dementia,” MacGillivray says. “Do we somehow summarize all the information in someone’s eye in some clever way yet to be determined? Or should we be looking at particular regions of people’s eyes, should we be focusing on the main blood vessels that we see, should we be focusing on the small vessels?”

Both Dhillon and MacGillivray are part of the Vascular Assessment and Measurement Platform for Images of the Retina (VAMPIRE) Project, a ten-institute effort led by Scotland’s Universities of Dundee and Edinburgh. The effort centers around semiautomatic software created to explore exactly these questions. The system so far encompasses multiple algorithms and computational filters to detect features like the size and fractal geometry of veins and arteries both within and outside the typically analyzed retinal regions (Figure 3).

![Figure 3: An image taken from an ultrawidefield SLO (P200C; Optos Plc, United Kingdom). The VAMPIRE team has developed automatic extraction of the blood vessels for this type of image to analyze vascular parameters such as vessel widths and pathways. [Image courtesy of the VAMPIRE Project (Universities of Edinburgh and Dundee, United Kingdom).]](https://www.embs.org/wp-content/uploads/2015/11/fischer03-2476575.jpg)

The goal is to be able to do deep dives into large data sets, confirming existing biomarkers and discovering new ones more efficiently and clearly than previous work. The team has already performed smaller studies with VAMPIRE software to identify retinal signatures associated with different types of stroke. Last year, they gained access to the U.K. Biobank, which has the largest existing prospective collection of retinal images and other biological and environmental measures, which will allow the team to continue hunting for reliable biomarkers and troubleshooting its software. Most recently, it’s involved in a new prospective clinical trial called PREVENT, looking at middle-aged subjects who are at high, medium, and low dementia risks, and bringing to bear all of the technologies, from the fundus camera to OCT-A. The intent is to build upon existing evidence of changes in the retinal blood vessels in dementia and Alzheimer’s patients in hopes of determining whether those changes appear early enough and are predictable enough to help identify patients well before more serious changes show up in their brain and, ultimately, helping people begin treatments or change their lifestyles sooner.

“We’re trying to break it down into manageable steps,” says MacGillivray. “That’s what we’ve got to nail down, those telltale signs. But I think we are, us and other groups, slowly piecing those jigsaws together.” People have talked about the potential of retinal imaging for the body and brain for a long time, he says. “I think we need to start delivering on that potential.”

It’ll All End in Tears

Natacha Turck has spent a decade wading deep into bodily fluids in search of biomarkers that could help doctors better diagnose brain diseases like stroke, multiple sclerosis, and sleeping sickness. Blood and cerebrospinal fluid (CSF)—the liquid that bathes the brain—were her two primary resources for a long time, but they had their drawbacks. Blood is so rich in albumin and immunoglobulin proteins that other, more interesting compounds were often overshadowed. CSF, one of the gold-standard means by which to diagnose diseases like multiple sclerosis, requires a lumbar puncture to obtain—“a nightmare for the clinician, the researcher, and for the patient,” says Turck from her lab at the University of Geneva, Switzerland. She wanted another option. And then, about three years ago, she realized she had one: tears.

“Tears are not just the liquid surrounding the eye,” Turck explains. Tears have multiple layers, comprised of sugars, electrolytes, neurohormones, enzymes, several hundred types of lipids, and up to 1,543 different proteins. The diagnostic potential is dazzling. A few years back, researchers from the University Medical Center of the Johannes Gutenberg University Mainz, Germany, discovered a panel of tear proteins that could discriminate patients with breast cancer from those without. And in a paper last year, Turck and her team noted the discovery of a specific protein called alpha-1 antichymotrypsin, which, of the 185 identified in the assay, was elevated in both the tears and the spinal fluid of multiple sclerosis patients—a CSF correlate, perhaps, without the lumbar puncture [3].

Research like this is on a steep climb. According to a recent review of the field, nearly half of the papers on tear biomarkers for systemic diseases (as opposed to ocular diseases like dry-eye) of the last decade appeared in the past two years. The authors, a team out of the University “G. d’Annunzio” of Chieti-Pescara, in Italy, cite the role of improved “omics” assays in helping open the field—tear samples are vexingly small, generally less than 5 μL a sample, which has made them difficult to analyze. Sensitive proteomic analyses targeting the tear film have been around for about a decade, but comprehensive corresponding metabolomic and lipidomic investigations have only recently been approached.

But it’s one thing to sift through the molecular markers in tears, and another to make practical use of them. Compared to other routes into the eye, like retinal imaging and eye tracking, this is easily the furthest behind in terms of use. Consider glucose: of all the chemicals in tears, glucose ranks among the longest studied, with an over 80-year history. Google and others have recently zeroed in on it for smart contact lens technology, and they’re not the only ones (see “Moving Toward Psychiatry” and “Making Contact”). Despite all of this work, however, the science behind all these efforts has yet to be fully established.

[accordion title=”Moving Toward Psychiatry”]

In addition to neurodegenerative disorders and traumatic brain injuries, eye-tracking technology may also bring a new objectivity to psychiatry.

In 2012, researchers at the University of Aberdeen, Scotland, reported that a small set of eye-tracking tasks could discriminate healthy subjects from schizophrenics with a success rate of over 98% [1]. The study included a series of short tests and free-viewing of scenes to gauge different abilities and preferences— like how well they could keep their gaze steady or where they focused on pictures of facial expressions or landscapes.

“There was almost a constant difference between the healthy people and the patients’ eye movements, irrespective of whichever eye movement parameter you measured,” says Philip Benson, a psychology researcher and lead author on the paper.

Since then, the researchers have successfully applied the approach on a range of disorders, including bipolar and major depression, creating a visually based task system to distinguish among each disease. “These are tasks that tap into different systems in the brain, like brainstem function, survival mechanisms, attention and frontal lobe,” Benson explains. “And all of these different circuits in the brain are affected in different ways and to different degrees by different illnesses.”

The team is validating its results on a larger pool of patients, and Benson anticipates that the first test for schizophrenia will roll out to researchers and teaching hospitals in the next year and a half. The goal, he says, is to create a fixed, quantifiable battery of tests that a psychiatrist could use as an aid during diagnosis. “Typically, if you go and see your doctor, he’ll have available a thousand different tests that he can pull off the shelves—blood count, lipid panel, echocardiogram. That’s great, that provides evidence so they can devise the best treatment,” he says. “But in psychiatry, there’s nothing like that; they still have no objective tests.”

At the University of Toronto in Canada, eye-tracking researcher Moshe Eizenman is thinking along similar lines. His research begins with the concept that eye movements reflect a person’s worldview— what the brain expects to see, what the person pays attention to. In neuropsychiatric disorders, he says, “The model that people have of the world is not consistent with the model of the world that normal people have.”

Using an example from his own research, Eizenman explains that a person with an eating disorder will view pictures of cars or the environment exactly the same as a healthy individual would because those aren’t very relevant to the disease. But show him or her a series of body shapes, and his or her eye patterns will be very different.

He foresees a future where psychiatrists will be armed with sets of images, each tapping into different psychological diseases. “You might be able to monitor or detect disorders that are associated with models of the world that are not consistent with normal models,” he says. “And by combining all of this you can create a profile associated with the person that will have a multidimensional quality.”

Reference

- P. J. Benson, S. A. Beedie, E. Shephard, I. Giegling, D. Rujescu, and D. St. Clair, “Simple viewing tests can detect eye movement abnormalities that distinguish schizophrenia cases from controls with exceptional accuracy,” Biol. Psychiatry, vol. 72, pp. 716–724, 2012.

[/accordion]

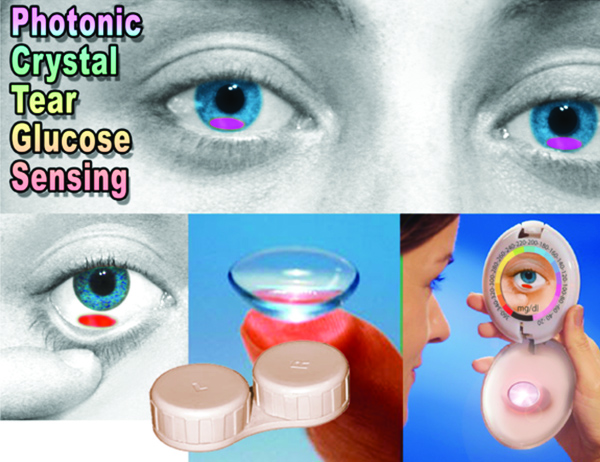

[accordion title=”Making Contact”]

Noninvasive, conveniently ubiquitous, and in constant contact with tear fluid, contact lenses are one of the most appealing ways to monitor chemicals in tears.

This technology could be used not only for chronic conditions but also for after surgery or during the course of treatment. During a clinical trial, a specific chemical could be continuously monitored,” says Ali Yetisen, who recently reviewed the field of diagnostic contact lenses.

A basic lens has to meet a certain number of criteria. It needs to be made of a soft, comfortable material like a hydrogel. It can’t cause irritation, it needs to allow for a certain amount of oxygen and tear fluid to pass through to the eye, and it has to be able to withstand constant physical stresses from the wearer’s eye movements and blinking. A smart lens designed to monitor physical variables needs to be able to do all of that, with the added challenge of making sure the sensor is protected, has access to tears, and can’t irritate the eye lest it throw off the concentration of chemicals in the tears.

Several years ago, Sanford Asher’s group at his lab at the University of Pittsburgh designed a simple, glucose-sensing lens based on photonic crystals that functions without requiring power. It works a little bit like an opal, he explains. “If you have an opal, it’s composed of an array of particles of sand, and it shows ‘fire.’ But if you were to change the array spacing, that directly changes the color diffracted and the perceived color would change.” For his lens, he embedded a similar array of particles in a soft hydrogel lens, out of the visual field. The gel contained chemicals able to bind to glucose, and once bound, they forced the lens to expel water, changing the array spacing, thus changing the perceived color of the lens. It was quick, reversible, and lasted at least a week—a typical length of time for contact use. Supply a wearer with a color chart for comparison, and, in theory, he or she would be able to peek in a mirror, compare the color of the lens, and quickly assess the state of his or her glucose concentration. “It’s actually simple chemically and simple in terms of the interaction with light,” Asher says (Figure S1).

Other types of sensors that have emerged in recent years, based on holographic and fluorescent sensors work similarly to Asher’s crystals. Electrochemical sensors, on the other hand, tend to be a little more complicated. Babak Parviz, an affiliate professor at the University of Washington and cofounder of Google’s Smart Contact Lens program (he is now with Amazon.com), reported in 2011 on his work with another type of glucose-sensing contact lens [1]. This prototype consisted of a soft polymer lens, outfitted with an enzyme-based sensor to detect and produce an electrochemical reaction in response to glucose, plus tiny metal electrodes laid out in a pattern of concentric rings around the lens. Accompanying the lens, the lab also reported on a preliminary wireless power delivery system that used electromagnetic radiation delivered from up to 15 cm away. The researchers anticipate someday also using wireless mechanisms to download the sensed information. Google and Novartis have explicitly detailed their intent to wirelessly connect their smart lens to mobile devices, and several of Google’s contact lens patents already include this step.

Although as Yetisen cautions, all of that is still far in the future. “The fundamental science is not there yet,” he says. “We don’t want to put the cart before the horse.”

Reference

- H. Yao, A. J. Shum, M. Cowan, I. Lähdesmäki, and B. A. Parviz, “A contact lens with embedded sensor for monitoring tear glucose level,” Biosens. Bioelectron., vol. 26, no. 7, pp. 3290–3296, 15 Mar. 2011.

[/accordion]

“Tears are not simple—if you make someone cry, you’ve got a new source, or alternative reservoir, for tears, and the glucose concentration can be different,” explains Sanford Asher, a chemist at the University of Pittsburgh, Pennsylvania. The chemical makeup of tears, including glucose concentration, differs by the kind of tear in question. The basic tear film is not equivalent to tears caused by irritation, which are not equivalent to tears caused by emotion. This, Asher explains, makes measuring tears a delicate affair. “Some people’s eyes will start to water just talking about tear fluid; you don’t have to do anything to them,” he says.

Most of those decades of tear glucose analysis produced inconsistent results that vary by as much as 1,000-fold. Asher performed some of the first genuinely reliable measurements of tear glucose concentrations in 2007, using careful sampling with a microcapillary tube and mass-spectrometry method specifically developed in his lab. He found lower average glucose levels than most previous reports as well as a rough correlation to blood sugar, with a possible lag of 20 minutes. But he also found that, in some cases, different eyes tracked blood glucose differently— possibly due to undetected irritation in the eye. In one case, the difference was roughly fivefold between the eyes.

Today, it’s still not a given that tear glucose is a useful proxy for blood glucose. Evidence suggests that it probably is, but would it be enough to be useful? It’s still not entirely clear where the glucose is coming from, whether via blood leaking in from the nearby epithelial cells or whether actively carried there via transporter proteins. “Fundamental research needs to be carried out before technology development,” says Ali Yetisen, a researcher at Harvard Medical School and Massachusetts General Hospital. “Because you can develop a sensor and you can put it in the eye, but if it does not really reflect the concentration of, for example, glucose in blood, it won’t be useful.”

The field needs much larger, more cautious studies with many more people and many diabetics facing various conditions, possibly with hyperglycemic clamps to hold the blood sugar steady while researchers sopped up their subjects’ tears. And yet, even now, according to the Italian review, there are still no standard methods of tear collection or storage. Microcapillary tubes like Asher’s are actually comparatively rare; paper strips are more common, though also more likely to also cause irritation.

And of course, these are just the efforts and challenges for glucose, one of the most well-established tear markers so far. Other markers emerging from studies like Turck’s and the many other researchers who have joined the field may require similar levels of scrutiny. “We still don’t know the exact composition of tears,” Turck reminds us. “Until now, very few studies investigated them, and very few compared tears to blood, CSF, saliva, or urine.” And yet, she says, she has great hope for the area. “It’s not invasive, it’s very quick, and there is no risk associated with sampling to the patient,” she says. “It’s really a very promising diagnostic tool for the future.”

Looking to the Future

It’s clear that the eye is tantalizingly easy to observe, and research to date suggests that it could be a powerful diagnostic aid for some of the most difficult to assess diseases, like Alzheimer’s, autism, stroke, and traumatic brain injury.

Researchers foresee a day when eye-based technologies are in every doctor’s office—if not in every patient’s smartphone. Not necessarily as a stand-alone diagnostic, but as early warning or monitoring devices. Essentially, they would be just another tool in the diagnostic routine.

Many of the needed technologies are either already here in some form or another—the contact lens prototypes and their electric circuits and photonic crystals; OCT and fundus cameras and automated detection of retinal features; free-viewing eye trackers; and the computational power that allows all of these to happen. The science is the rate-limiting factor—finding the biomarkers and establishing them through the large clinical trials needed to ensure their viability.

“I think we’re still quite far from looking at the retina with any of the current imaging and using it as a crystal ball,” Dhillon says. But, he adds, as each new layer of technology is brought to bear, from blood vessels to OCT to combined, multimodel tools like OCT-A, these “will enable us to be more and more accurate about our predictions about the state of health of the individual, the state of brain blood vessels, and the state of systemic disease likelihood. And that’s where the field is heading.” He is speaking about retinal imaging, of course, but much the same can be said just as easily about tear analyses and eye tracking.

“As you know, there is the saying of the eyes as windows to our soul,” Turck says. “This is exactly the same—the eyes are as windows to our health.”

References

- Oculogica [Online].

- G. Liew and J. J. Wang, “Retinal vascular signs: A window to the heart?” Rev. Esp. Cardiol., vol. 64, no. 6, pp. 515–521, 2011.

- C. Salvisberg, N. Tajouri, A. Hainard, P. R. Burkhard, P. H. Lalive, and N. Turck, “Exploring the human tear fluid: Discovery of new biomarkers in multiple sclerosis,” Proteomics Clin. Appl., vol. 8, no. 3–4, pp. 185–194, Apr. 2014.