It is predicted that the global shipment of smart wearables will approach 302.2 million devices in 2023, increasing from 222.9 million devices in 2019 [1]. It was also forecast in 2019 that the number of ear-worn devices—so-called hearables—would rise to 105.3 million in 2023, from 72 million in 2019 [2]. Given the relatively fixed position of the head with respect to the brain and vital organs in most of the daily activities, hearables provide more consistent recordings compared to more mobile parts of the body, such as the wrists. This allows for robust recordings of the both electroencephalogram (EEG) [3], [4], [5], electrocardiogram (ECG) [6], and photoplethysmogram (PPG) [7], together with the derived measures including the heart rate (HR) [4], respiratory rate [8], blood oxygen saturation (SpO2) [7], and blood glucose levels [9].

Benefiting from the form factor of an earplug with embedded sensors, hearable devices allow for portable, discreet, unobtrusive, and user-friendly 24/7 continuous monitoring of human physiological signals in the real world. This makes them a promising technology for ambulatory monitoring, sports science, sleep monitoring [10], and occupational medicine [11]. However, their more widespread deployment requires means to deal with the relatively weaker signals compared to standard wearables [12], together with enhanced user comfort and suppression of the effects of motion artifacts (due, e.g., to jaw movement, head swings, or walking) which corrupts the measurements.

While hearables have been typically considered based on their individual modalities, like ear-EEG [3] or ear-SpO2 [7], a multimodal design that has the true potential to transform recreational and health application alike [13], is still largely an uncharted territory. To this end, we here introduce a novel multimodal hearable device that is capable of recording multiple physiological variables from the ear area, including ear-EEG, ear-ECG, and ear-PPG signals. The embedded movement and behavioral sensors (accelerometer and microphone) may serve to infer behavioral cues or as a reference in order to remove artifacts in real time [14], [15], while a wireless Bluetooth connection provides connectivity to smart environments—all in all making our device a standalone real-world monitor of the state of body and mind.

Materials and methods

Ear biometrics vary from person to person, which makes it challenging to design a hearable device that can fit the general population while ensuring proper electrode-skin contact, user comfort, compactness, and stability. To this end, we first developed a rigorous biophysics model of signal propagation from vital organs and the brain to the ear canal [16], [17]. This made it possible to pursue an informed, comfortable, and stable design of the shape, material, and size of the earplug and the embedded sensors, thus allowing for prolonged unobtrusive measurements such as in sleep monitoring [18].

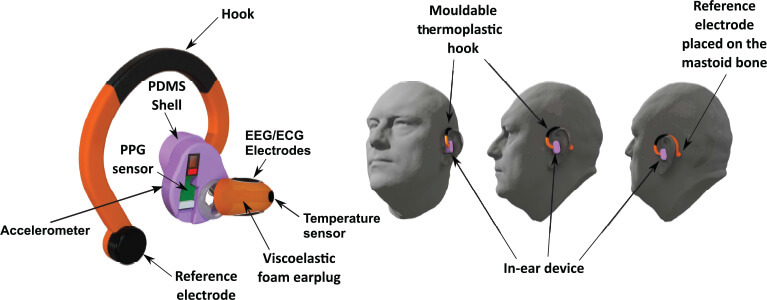

Our hearable device, depicted in Figure 1, is composed of a viscoelastic foam earplug, a silicone ear shell, and a thermoplastic hook. Three electrodes (two in-ear silver cloth electrodes and one gold cup on the mastoid) and four other sensors [temperature, PPG, microphone, and accelerometer (IMU)] are integrated into the device, allowing for both EEG, ECG, and PPG recording and artifact characterization. The ear shell houses the PPG sensors, taking advantage of the prominent vascularization of the auricle [19]. The hook further stabilizes the design by extending around the auricle up to the mastoid bone, and hosts a reference electrode for EEG recordings (Figure 1).

Figure 1. The multimodal hearable device with multiple embedded sensors (electrodes, PPG, accelerometer, microphone, and temperature) for recording neural function, vital signals, and artifacts (left), together with different views of device in operation (right).

Design and signal validation

Ten healthy volunteers, five males and five females aged between 20 and 30, participated in a study to validate our device. The study was conducted under the approval of the Imperial College London Ethics Committee (JRCO 20IC6414), and all subjects provided full informed consent. To reduce electrode-skin impedance, a conductive gel was applied to the cloth electrodes before insertion, and both ear-EEG, ear-ECG, and ear-PPG were recorded. These recordings were benchmarked against simultaneously recorded standard measurement methods and sites, including scalp EEG, lead I ECG from the arms, and PPG from the finger. To examine the comfort and long-term wearability of the design, the participants were required to wear the device for 3 hours while engaging in routine daily and sports activities.

The EEG, ECG, PPG, accelerometer, and microphone signals were simultaneously recorded in order to benefit from the collocated position of the sensors and the supporting data/sensor fusion principles in signal detection and denoising. For rigor, the in-ear bioimpedance was recorded throughout the recordings using a g.tec HIAMP amplifier, while scalp-EEG was recorded using a g.tec UNICORN EEG cup with dry electrodes. Previous work [6], [16] has demonstrated the feasibility of detecting ECG from different locations around the head and inside the ear canal [20]. The in-ear ECG signal was found to be about 50 times smaller in amplitude than lead I ECG from the chest; this was expected given the longer propagation path to the head surface compared to the upper torso [16] and the cavities along the pathway (windpipe and mouth). The evaluation of the SpO2 levels was calculated indirectly through PPG measurement of red and infrared light absorption, whereby the ratio between the red and the infrared component was used in conjunction with an empirically derived linear approximation to calculate the SpO2 value as a proxy for blood oxygen saturation [7]. Relative motion between the electrodes and skin—so called motion artifacts—was recorded using an IMU and an ECM microphone; these served as a reference for artifact removal during jaw clenching, chewing, or head movement.

Results

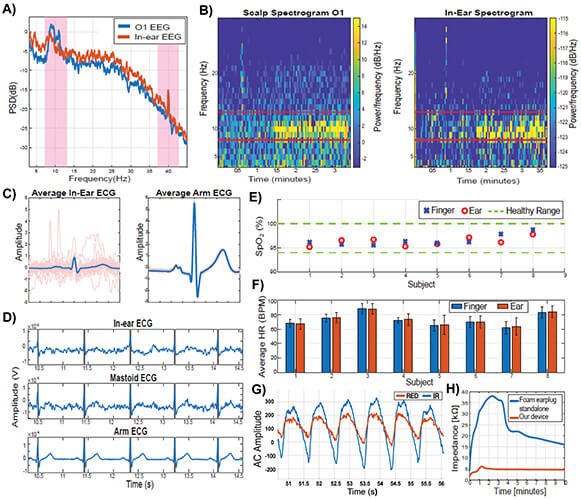

The ear-EEG validation was performed against scalp-EEG through alpha wave attenuation auditory steady state response (ASSR). The corresponding power spectra are shown in Figure 2(a) and the time–frequency spectrograms in Figure 2(b). Observe a perfect match between the on-scalp and in-ear recordings, including the eyes-closed related increase in the alpha band power in EEG, and the preserved respective ASSR peaks at 40 Hz. The spectral coherence between the corresponding in-ear and scalp O1 power spectra had a mean of 78.61% ± 1.4% over the subjects.

Figure 2. Signal quality from our multimodal hearable device. (a) Power spectra for ear-EEG and scalp-EEG during the alpha-attenuation and ASSR conditions. (b) Corresponding time-frequency spectrograms. (c) Average cardiac cycles for ear-ECG and arm-ECG. (d) Time waveforms for ear-ECG, mastoid ECG, and arm ECG. (e) Blood oxygen levels from ear-PPG and finger-PPG. (f) HRs estimated from ear-PPG and finger-PPG. (g) Red and infra-red AC waveforms for ear-PPG, bandpass filtered to 0.9–30 Hz. (h) Time evolution of the skin-electrode impedance for our multimodal hearable device, against standalone ear-EEG.

The cardiac cycle was estimated by averaging the data segments across several consecutive time windows (0.8 s for each time segment). Figure 2(c) shows an average cardiac cycle for one subject for both ear-ECG and arm-ECG. Figure 2(d) further provides a comparison between segments of simultaneously recorded in-ear ECG, mastoid-ECG and lead I ECG from the arms. Observe a perfect alignment between the respective R-peaks which demonstrates the feasibility of accurate HR estimation from ear-ECG.

Figure 2(e) shows the estimated blood oxygen levels by means of SpO2 from the concha cymbal and the index finger. All the SpO2 values were within the physiologically healthy range of 94%–100%, while across all subjects we observed a mean difference of 0.23% (max 1.2%, min 0.05%) with the root mean square value of 1.21%. We hypothesize that in-ear recordings are more accurate as the ear is not subject to the vasoconstriction of blood vessels, typically exhibited on other body sites. The results for HR estimation from PPG are shown in Figure 2(f), with the mean root mean square difference between the ear and finger results of 0.47, and the root mean square error of 0.96. The excellent match between the ear-HR and finger-HR is supported by Figure 2(g) which represents the AC waveforms of the PPG detected in the concha cymbal highlighting the high signal-to-noise ratio (SNR) for ear recordings.

Finally, since the contact between the skin and sensors plays a crucial role in obtaining a good quality signal, in Figure 2(h) we evaluated the electrode-skin bioimpedance of our multimodal hearables device against a standalone ear-EEG. At the steady state after 10 min, our device achieved an impedance of 5 kΩ, against 16 kΩ of the standalone ear-EEG, while the required time to reach stable operation was 2 min, against 10 min for standalone ear-EEG.

The success of hearables in real-world physiological recordings has also highlighted that motion artifacts (arising from, e.g., head/jaw movement or walking) represent the main obstacle to their reliable 24/7 operation in the real world.

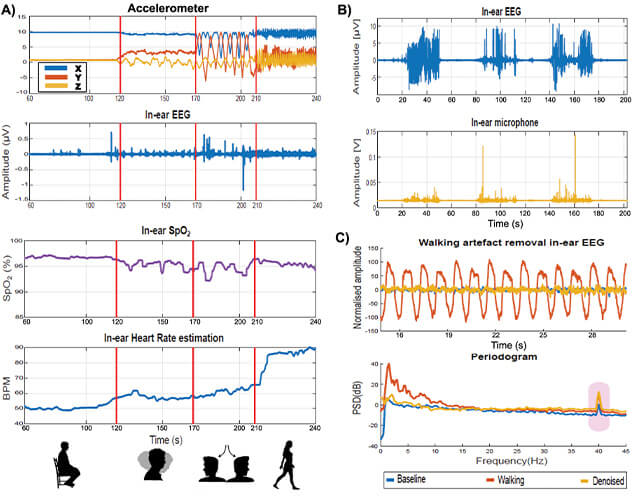

Figure 3(a) shows the readings of the accelerometer within our hearable device (shown in Figure 1) during various head movements, and the effects of the corresponding motion artifacts on physiological recordings. Observe from Figure 3(a), how the ear-EEG, ear-SpO2, and ear-HR were particularly stable in the first 120 s when the subject was sitting still, while in the last 140 s motion artifacts made the physiological recordings invalid. Figure 3(b) shows the effects of chewing artifacts on ear-EEG measurements, against the artifact reference from the embedded microphone. This suggests the feasibility of online artifact removal in future recreational and eHealth applications.

Figure 3. Effects of artifacts on our multimodal hearable device. (a) Artifact due to head movements, detected by the accelerometer. After 120 s of sitting still, the subject performed various head swings (from 120 to 170 s, and from 170 to 210 s), followed by walking after 210 s. Observe the deteriorating effect of the so induced motion artifacts in ear-EEG, ear-SpO2, and HR from the ear. (b) Chewing artifacts represented in the EEG trace (top) and detected by the embedded microphone (bottom). (c) Example of successful removal of motion artifact due to walking, using the accelerometer as a reference. Observe the maintained ASSR peak at 40 Hz.

Indeed, the multimodal nature of our hearable device allows for the information from the embedded nonphysiological sensors to be used as a reference in artifact removal. This is illustrated in Figure 3(c) where the top plot shows the effects of a walking artifact on ear-EEG, while Figure 3(c) (bottom) shows successful walking artifact removal from ear-EEG using the accelerometer signal as a reference. Observe that the denoised signal (in amber) is very close in the power spectrum to the clean baseline signal (in blue), for both background EEG and the ASSR peak (at 40 Hz in EEG). The achieved artifact reduction was at 20 dB on average.

Wearable technology is envisaged to revolutionize the way we approach health and well-being. However, for this to become a reality, multiple physiological sensors must be integrated into a discreet, comfortable, unobtrusive, and nonstigmatizing device. Our hearables prototype is no bigger than a common sports headphone, and represents the first device to simultaneously record EEG, ECG, and PPG signals from the ear—thus providing a stand-alone and noninvasive means for continuous monitoring of the state of body and mind. The device could also promote awareness of the importance of monitoring and storing personal health-related information, together with allowing for the derived measures such as blood pressure, blood glucose levels, calories intake, calories burned, sleep patterns, and physical activity. It is our hope that hearables, in conjunction with the trends in lifelong learning and artificial intelligence, will eventually help us to better understand psycho-physiological processes in a range of scenarios, including stress, wellbeing, and the future eHealth.

Acknowledgments

This work was supported by the Racing Foundation, Dementia Research Institute at Imperial College London, and the USSOCOM MARVELS Grant. The work of Edoardo Occhipinti was supported by UK Research and Innovation (UKRI Centre for Doctoral Training in AI for Healthcare Grant no. EP/S023283/1).

References

- IDC Quarterly Wearable Device Tracker. (2020). Earwear and Watches Expected to Drive Wearables Market at a CAGR of 7.9%, Says IDC. [Online]. Available: https://www.idc.com/getdoc.jsp?containerId=prUS45271319

- N. Niknejad et al., “A comprehensive overview of smart wearables: The state of the art literature, recent advances, and future challenges,” Eng. Appl. Artif. Intell., vol. 90, Apr. 2020, Art. no. 103529.

- D. Looney et al., “The in-the-ear recording concept: User-centered and wearable brain monitoring,” IEEE Pulse, vol. 3, no. 6, pp. 32–42, Nov. 2012.

- J.-H. Park et al., “Wearable sensing of in-ear pressure for heart rate monitoring with a piezoelectric sensor,” Sensors, vol. 15, no. 9, pp. 23402–23417, Sep. 2015.

- P. Kidmose et al., “A study of evoked potentials from ear-EEG,” IEEE Trans. Biomed. Eng., vol. 60, no. 10, pp. 2824–2830, Oct. 2013.

- G. Hammour et al., “Hearables: Feasibility and validation of in-ear electrocardiogram,” in Proc. 41st Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. (EMBC), Jul. 2019, pp. 5777–5780.

- H. J. Davies et al., “In-ear SpO2: A tool for wearable, unobtrusive monitoring of core blood oxygen saturation,” Sensors, vol. 20, no. 17, p. 4879, Aug. 2020.

- K. Taniguchi and A. Nishikawa, “Earable POCER: Development of a point-of-care ear sensor for respiratory rate measurement,” Sensors, vol. 18, no. 9, p. 3020, Sep. 2018.

- G. Hammour and D. P. Mandic, “An in-ear PPG-based blood glucose monitor: A proof-of-concept study,” Sensors, vol. 23, no. 6, p. 3319, Mar. 2023.

- T. Nakamura et al., “Hearables: Automatic overnight sleep monitoring with standardized in-ear EEG sensor,” IEEE Trans. Biomed. Eng., vol. 67, no. 1, pp. 203–212, Jan. 2020.

- M. Masè, A. Micarelli, and G. Strapazzon, “Hearables: New perspectives and pitfalls of in-ear devices for physiological monitoring. A scoping review,” Frontiers Physiol., vol. 11, p. 56886, Oct. 2020.

- I. Zibrandtsen et al., “Case comparison of sleep features from ear-EEG and scalp-EEG,” Sleep Sci., vol. 9, no. 2, pp. 69–72, Apr. 2016.

- C. K. H. Ne et al., “Hearables, in-ear sensing devices for bio-signal acquisition: A narrative review,” Expert Rev. Med. Devices, vol. 18, no. 1, pp. 95–128, Dec. 2021.

- G. M. Hammour and D. P. Mandic, “Hearables: Making sense from motion artefacts in ear-EEG for real-life human activity classification,” in Proc. 43rd Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. (EMBC), Nov. 2021, pp. 6889–6893.

- E. Occhipinti et al., “Hearables: Artefact removal in ear-EEG for continuous 24/7 monitoring,” in Proc. Int. Joint Conf. Neural Netw. (IJCNN), Jul. 2022, pp. 1–6.

- W. von Rosenberg et al., “Hearables: Feasibility of recording cardiac rhythms from head and in-ear locations,” Roy. Soc. Open Sci., vol. 4, no. 11, Nov. 2017, Art. no. 171214.

- M. C. Yarici, M. Thornton, and D. P. Mandic, “Ear-EEG sensitivity modeling for neural sources and ocular artifacts,” Frontiers Neurosci., vol. 16, Jan. 2023, Art. no. 997377.

- T. Nakamura, V. Goverdovsky, and D. P. Mandic, “In-ear EEG biometrics for feasible and readily collectable real-world person authentication,” IEEE Trans. Inf. Forensics Security, vol. 13, no. 3, pp. 648–661, Mar. 2018.

- F. Tilotta et al., “A study of the vascularization of the auricle by dissection and diaphanization,” Surgical Radiologic Anatomy, vol. 31, no. 4, pp. 259–265, Apr. 2009.

- M. Yarici et al., “Hearables: Feasibility of recording cardiac rhythms from single in-ear locations,” Roy. Soc. Open Sci., vol. 11, no. 1, Jan. 2024, Art. no. 221620.