The girl looks about 10 years old. She lies quietly on the emergency room exam bed, her eyes wide. Half an hour ago she was swinging—a little too wildly—from monkey bars in the park. Then, she fell.

“Hey kiddo,” says Dr. Srihari Namperumal. “I’m just going to do a quick exam, ok?” The girl nods. “Does your head hurt?” She nods again. “Ok, I’m sorry,” the doctor replies. “Does anything else hurt?” She shakes her head “no.”

Dr. Namperumal looks at the monitor—her heart rate is stable. He brings a stethoscope to her chest and listens. He then takes an ultrasound probe and moves it over her belly. He sees her kidney and liver, with no signs of internal bleeding in the abdomen. He moves the probe to the heart—no evidence of trauma there. Suddenly, the heartbeat monitor ticks up. The girl has rolled onto her side and appears to be having a seizure.

“Dr. Ribeira! Please get 5 mg of midazolam stat.” Dr. Ribeira hustles over to the patient, pulls on a pair of glasses and delivers a shot of midazolam to the girl’s thigh. Her heartbeat slows and the seizure lifts. For the moment, she appears stable.

But there is another angle to this scene. If you look at the exam table from across the room, the girl is not there. The two doctors attending to her are just pawing the air. From this angle, there is actually no patient present—just some medical equipment and two men wearing augmented reality eyegear. This is where fact meets fiction: it is the scene of a medical simulation. There never was a real person lying on the exam table being examined for injuries by Dr. Namperumal, but there may have been a girl, once, who slipped from monkey bars, hit a pole, and got a headache so fierce her mother rushed her to the emergency room. That girl’s accident became a case, and that case was tapped for inclusion in SimX, an alpha-stage augmented reality technology that provides holographic patients on which physicians can practice (see Figure 1). Drs. Namperumal and Ribeira exist. They used actual stethoscopes and syringes in the simulation to treat the girl with the head injury. However, they were not in an emergency room; they were on a stage in Seoul, South Korea, at the 2014 Tech Plus Forum, demonstrating the possibilities of their simulation technology, still in development.

A Safer Way to Make Mistakes

This is one scene, among many, playing out in the world of medical simulation—a relatively young, but fast-growing area in health care. In medical simulation, one of the most powerful insights about how we learn—that our sharpest learning occurs in the wake of mistakes—is being fleshed out in the forms and figures of new or reinvigorated technologies. The role for simulation in health care training can be summarized this way: to become skilled at practicing medicine takes practice. Practicing entails making mistakes (the more the better, really, because mistakes are how people learn). And mistakes are better made on fake patients than on real ones. No one will argue that last point. What has been slower to grow in the minds of educators and practitioners is conviction in the fidelity of a simulation to the real thing and its validity in teaching the range of learning objectives at play in any given medical scenario.

Reducing the number of mistakes made in treating patients took on a greater urgency in 1999 when the Institute of Medicine published “To Err Is Human,” which estimated the number of preventable patient deaths due to medical error at a minimum of 44,000, and potentially as high as 98,000 [1]. Despite national efforts of the last 15 years to lower those numbers, a 2013 study by The Journal of Patient Safety found the number of deaths in hospitals due to preventable harm may in fact be much higher: anywhere from 200,000 to 400,000 a year [2] (making it the number three cause of death among Americans [3]).

The “old school” apprenticeship model of “see one, do one, teach one” was revealing its fissures (especially when it comes to low-frequency/high-acuity events). Meanwhile, innovations in medical technology also proved there was a need for more places and ways to practice outside of the operating room and emergency room, as happened with the advent of laparoscopic surgery in the late 1980s. Health care saw how new technologies begot new opportunities for error. Laparoscopic technology entailed a new set of skills and proved complicated to learn, resulting in a high incidence of error, even in the hands of seasoned surgeons. “You learned by doing operations and you learned by making mistakes along the way. It was part of the risk of medicine,” recalls Jeff Berkley, chief executive officer of Mimic Technologies, a company that develops simulation technology for surgical robotics.

Over the past two decades, simulation programs were implemented in growing numbers and the methods and tools used grew in sophistication. Insurers took note of these simulation programs. As just one example among many, in response to the rising number of laparoscopy-related malpractice cases, Controlled Risk Insurance Company, Ltd. (CRICO), the insurance carrier for the Harvard Medical Institutions, introduced a premium rebate to any general surgeons who underwent “Fundamentals of Laparoscopic Surgery,” a simulation-based program produced by the Society of American Gastrointestinal and Endoscopic Surgeons (SAGES) [4].

This need for training to keep up with technological innovation is not limited to minimally invasive surgery. “With the fast pace of innovation and disruptive technologies we need a surrogate, we need another way of learning…You want the surgeon, the anesthesiologist, the nurses—you want the whole team to practice on plastic and pixels, not on human beings,” quips Dr. Robert Amyot, president of CAE Healthcare, a leading manufacturer of simulation products.

The Quieter and EVer-Afflicted Patient Simulator

Since the publication of “To Err Is Human,” the plastic and pixels of simulators have become ever more “human.” Harvey has come a long way since 1968—and so have the rest of his plastic kinfolk. Harvey was one of the first plastic patients, a cardiopulmonary simulation manikin designed by Dr. Michael Gordon and introduced to the world in 1968. Today, Harvey has evolved to the point that he can simulate 30 disease states, replicate normal and abnormal breath sounds in six areas, and even “talk” so that students can take down his history. Simulation manikins now are portable and distinctly quieter than their forebears. “Even ten years ago,” says Lance Baily, who authors the simulation resource website healthysimulation.com, “the manikins were very chained down to the external compressors, which were very noisy.” The compressors, which enabled the chest to rise and fall to mimic breathing, have now been internalized in the manikin.

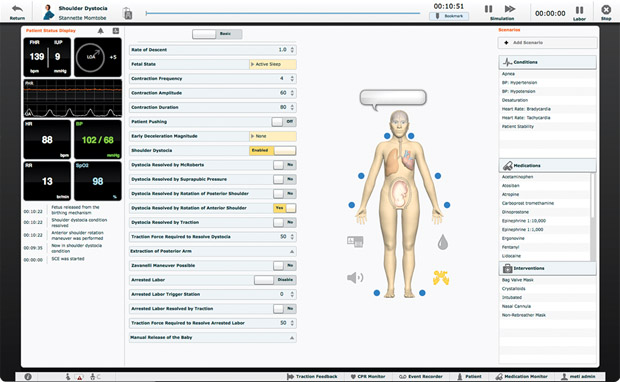

Recent improvements to the industry’s leading birthing simulators, such as CAE’s “Lucina” and Gaumard’s “Victoria,” include more realistic skin and joints that articulate so that obstetrician trainees can practice positioning the legs in specific positions for labor (see Figures 2 and 3). They look and feel less like puppets, observes Baily. Victoria’s eyes blink and dilate, and her abdomen hardware can be changed out to simulate different childbirth complications and obstetrical emergencies, such as a c-section, postpartum hemorrhaging, and shoulder dystocia. Lucina can now also be outfitted with a nonpregnant abdomen. This means she can double as a patient with different issues—five simulated clinical experiences, to be exact. Other possible simulations include sepsis with hypotension, brain attack with thrombolytic therapy, and motor vehicle collision with hypovolemic shock. What would be a trauma-riddled and exceptionally unlucky set of experiences for a real person makes for a more cost-effective and learning-rich menu of crises when bundled into a single patient simulator.

Innovations in Surgical Simulation

When Jeff Berkley founded Mimic Technologies in 2001, he saw that most learning was happening on patients. “When robotics came on the scene,” he remembers, “we saw this as an opportunity.” Here was an even more complex approach to surgery—using a robot to assist in minimally invasive surgery. The robot’s interface allowed for greater precision; for example, it could scale motion so that for every 5 cm the surgeon’s hand moved, the robot “hand” would move 1 cm. When the surgeon’s hand rotated right, the tool would rotate right. With this increased control came, well, more controls. “The only problem now is you have seven foot pedals to control all this energy,” explains Berkley. “There are concepts such as clutching and scaling. You’re now controlling your own camera; somebody else isn’t holding it. So there’s a lot more to learn.” The surgeon console was looking more like a fighter cockpit in terms of instrumentation. And the robot surgeons needed to practice on a US$1.8 million tool that most hospitals could not afford to reserve for training time.

“Before, with laparoscopic surgery…you could grab a couple tools, a shoebox, and a ham hock. You didn’t even have to use a real camera; you could put a Webcam in there and for a thousand bucks or so you could practice suturing,” Berkley recalls. With the robot, by contrast, you would be lucky to get limited access to practice on it nights and weekends when it was not in use for surgeries.

This second factor—the scarcity of access to train on the tool—prompted Berkley to develop a low-cost simulator of the da Vinci Surgical System, Intuitive Surgical’s robotic platform for minimally invasive surgery. In addition to training on specific operations, the simulator, called the dV-Trainer, offers a module on advanced surgical skills in general, such as needle control and driving, suturing and knot tying, applying energy, and dissecting (see Figure 4). Berkley, whose expertise includes continuum mechanics-based tissue modeling, says virtual reality in simulation entails more physics, therefore, it takes less of a video game approach. To fool a person’s vision into perceiving an animation of muscle tissue being pulled, the screen has to update 30 to 60 times per second. For touch to be perceived as real, the update rates are much higher. Soft tissue must update 300 times/second, and rigid objects, at 1,000 times/second.

“It’s one thing to have a good model where you can predict what’s going to happen, but can you solve it fast enough that you can use it in real-time simulation?” Berkley asks. This question is one of several fueling development work at Mimic Technologies as they iterate on the degrees realism in their simulator’s tissue modeling (see “’How Am I Doing?’: The Simulator Gives Feedback”).

[accordion title=”How Am I Doing?: The Simulator Gives Feedback”]

In addition to the economic argument for simulation (training on the US$100,000 robotic simulator versus the US$1.8 million robot, for example), it can also do something besides train students. Simulators can provide objective feedback on any user’s performance against a set of metrics. In other words, it can be used to measure skills maintenance, improvement, or decay in already-practicing surgeons. So, for example, as an individual “performs” a partial nephrectomy or a hysterectomy on the dV-Trainer, it can measure the time to complete the operation and the user’s economy of movement. Jeff Berkley explains that the simulator is capturing information that tells us: “Are you moving from point A to point B in a straight line, or are you moving on a very erratic path? What about the force you’re applying to the tissue?”

A scoring feature of this type (called MScore in the dV-Trainer) “incorporates experienced surgeon data to establish proficiency-based scoring baselines” [7] and can reduce some of the subjectivity of performance evaluation. A surgeon developing a hand tremor or loss of visual depth perception who continues to practice might prompt a colleague’s subjective assessment that he or she should not be doing surgery. “That’s very difficult to do,” Berkley says, “certainly very difficult for a nurse or resident or a fellow in training to tell a lead surgeon who’s got a very high reputation in the hospital or globally that ‘maybe your skills have diminished and you shouldn’t be doing surgery’.” For much of the field’s admittedly short history, simulators most often played the role of patient. In this case, the simulator becomes a colleague.

Simulation from Multiple Angles

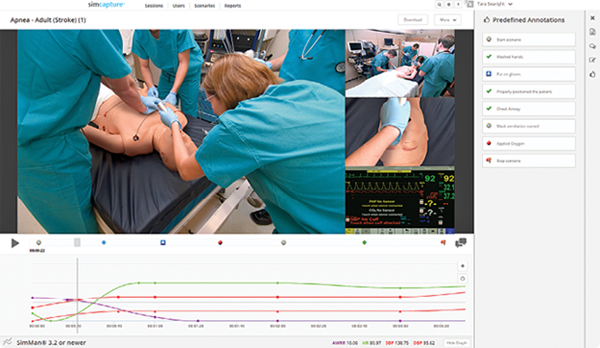

There are other ways that simulation allows health care practitioners to gain an outside perspective on their performance. Video recordings of simulations have been integral to the participants’ debriefs following the session. More accurate than memory, they record what actually happened: In what order did we take those three steps? Did you ask me to do that, or did I just do it? How did that instrument end up in the wrong place? The video recording removes some of discrepancies in perception and the practice is as valid now as when it was first introduced decades ago. Only now the technology for capturing a training is more sophisticated and less labor-intensive. Digital platforms such as SimCapture CAE’s Replay, and SIMStation act as the audiovisual documentary backbone to a range of simulation types (virtual reality, manikin-based, and actors), capturing video of the trainings that instructors can annotate afterward with feedback. SimCapture recently integrated Google Glass into the platform, which extends the possible video perspectives to include the first-person view from the trainees themselves or those of the patient actors or other team members (see Figure S1).

In a demonstration video for SIMStation, another AV recording and debriefing system, a doctor-in-training remarks during a simulation debrief how interesting it is to see himself from the outside—“what I act like, how I give orders to people.” It may sound like a simple enough observation—something anyone who’s seen themselves on video might say, but the importance of communication on health care teams has come to the forefront as a focus area for reform in the effort to reduce preventable patient deaths (failures of teamwork and communication are estimated to account for about 75% of medical errors and injuries [8]). Beyond the Checklist: What Else Health Care Can Learn from Aviation Teamwork and Safety details the ways that aviation transformed its culture of communication through analysis and retraining of its crews using the Crew Resource Management approach. The book insists that a similar communication reboot is urgently needed in health care, where admitting to an error is seen as a weakness. Alternately, if someone else makes a mistake, colleagues often don’t speak up for fear of reprisal. All of these negative connotations to error in medicine’s culture ironically conspire against patient safety. As part of the efforts to shift this mindset, many simulation programs are increasingly geared toward and include practice with team communication (TeamSim by Surgical Science is one example). TeamSTEPPS, the national standard for team training in health care, allows teams to safely rehearse decision making as they respond to simulated clinical scenarios in order to teach them better information sharing, conflict resolution, and awareness of roles and responsibilities.

[/accordion]

Not “Should We Use Simulation?”, But “How Can We Use it Better”?

Dr. Michael Gordon, who brought patient simulator Harvey to “life” in the late 1960s, received the Pioneer in Simulation Award at the 2015 International Meeting for Simulation in Healthcare. One of his mantras about simulation effectiveness is: “It’s not the simulator, it’s the curriculum.” Sidney Dekker, who spoke at the conference hosted by the Society for Simulation in Healthcare, made a similar point. Author of The Field Guide to Understanding Human Error, Dekker cautioned the audience to “not conflate the fidelity of the simulation with the validity of the training.” It’s easy to get caught up, he said, in perfecting the fidelity of a simulation, in trying to get it closer and closer to the real thing. But—and he was emphatic—the validity of a simulation is what matters: to what degree are the skills being learned in a simulation applicable in the target situation? It seems like a simple equation—make the training as much like the real thing as possible and you will be able to handle the real thing. And yet there’s evidence that some low-fi solutions have worked as well or better than their hi-fi counterparts, and stories of using a simulator from one field to effectively teach nontechnical skills in another (Dekker spoke of an aviation training that used a maritime simulator with worthwhile results).

This may help explain why Dr. Ribeira, CEO of SimX, is okay with the fact that trainees do not have anybody or anything to actually touch when practicing with SimX’s holographic patient. In simulations the doctors can, if so inclined, swipe their hands clean through the abdomen of the virtual patient without any realistic consequences. “The patient just stares at you,” he laughs. In the cases they design, he says, the point is not learning where or how to touch the patient—third- and fourth-year medical students know how to place a stethoscope, for instance. “What’s important for them to learn,” says Ribeira, “is in that moment, it’s important for me to listen to their heart…In reality, when you’re training physicians, a lot of what you train them on is decision making.”

Today, the question is no longer if we should use medical simulation, but “how can we use it most effectively?” This shift in the conversation, says an editorial in the Annals of Surgery, will allow medical educators to focus on integrating simulation, everything from when to initiate it, coordinating the skills laboratory with clinical experiences, what simulators to acquire, and how to use it for objective assessment and skill upkeep [5]. And, to add to all of that, we could slide in this addendum: how could simulation be used to think more experimentally and boldly to find health care solutions?

“Simulation is a great tool for exploring new concepts,” Baily remarked. Simulation, for all its focus on replicating reality to the point of precision and wonder, also has the ability to depart from strict realism. So while it may not be highly realistic for a patient to embody dozens of diseases or change their personality on a dime from “sullen” to “loquacious,” [6] when the patient simulator can do that, it is truly instructive. Simulation’s excess—the chance that it departs from reality and lands in another realm—is easily a form of super power rather than a flaw.

References

- To err is human: Building a safer health system. [Online].

- J. T. James. (2013). A new, evidence-based estimate of patient harms associated with hospital care. J Patient Saf. [Online].

- M. Allen. (2013). How many die from medical mistakes in U.S. hospitals? [Online].

- J. D. Robert Hanscom, “Medical Simulation from an Insurer’s Perspective,” Acad Emerg Med., vol. 15, pp. 984–987, 2008.

- D. J. Selzer and G. L. Dunnington. (2013). Surgical skills simulation: A shift in the conversation. [Online]. vol. 257, pp. 594–595.

- The Virtual Standardized Patient Project at USC’s Center for Creative Technologies. [Online].

- DV-Trainer. [Online].

- S. Gordon, P. Mendenhall, and B. B. O’Connor, Beyond the Checklist: What Else Health Care Can Learn from Aviation Teamwork and Safety, Ithaca, NY: Cornell University Press, 2013.