Over the years, air safety has improved greatly because the skills of pilots, and the ability of teams to work together in a crisis, are regularly reinforced through simulator-based training and assessment. In medicine, simulation methods increasingly show promise for attaining similar results. According to the United Kingdom’s Chief Medical Officer’s (CMO) report of 2008, studies of simulation training for surgical skills have shown that surgeons trained in this way make fewer errors and carry out technically more exact procedures. However, at that time, there were 3,200 pilots in the United Kingdom with access to 14 high-fidelity simulators. By comparison, there were 34,000 consultants and 47,000 doctors in training, including 12,000 surgeons, with access to only 20 high-fidelity simulators.

The CMO at the time, Sir Liam Donaldson, even went so far as to recommend that simulation-based training should be fully integrated and funded within training programs for clinicians at all stages.

“We had high hopes after the CMO’s report was published in 2008,” said Dr. Andrew McIndoe, vice chair of the Bristol Medical Simulation Centre (BMSC) (Bristol, United Kingdom), which at the time was one of only a handful of dedicated medical simulation centers in the United Kingdom. “But because of the way the National Health Service works, staff wanting to attend simulation courses struggle to be released from service commitments and so take-up of simulation-based training has been slower than anticipated.”

Currently, the BMSC gives health care professionals access to 12 medium-/high-fidelity patient manikins in four audio-visual-monitored simulation suites, including a fully equipped operating theater, anesthetic room, collapsible ambulance, and six-bed high-dependency ward (Figure 1). It offers 107 different courses and provides training to around 1,300 candidates each year. But McIndoe admits that, for many disciplines, simulation is a relatively expensive way of training health care workers.

“There are many procedures that you would not want to test on real patients, so the candidates benefit enormously from being able to practice on manikins, especially our high-fidelity manikins, which have everything you would expect from a real patient such as cardiovascular and respiratory systems,” said McIndoe. “But each one costs upwards of £150,000, and they are expensive to maintain. For example, we regularly have to replace the heads, which cost around £10,000 each. The high-fidelity manikins are also not mobile, so health care workers have to come to our facility to do the training.”

Even so, getting hands-on practice with manikins has its advantages. Students can listen to heartbeats, take blood pressure, move and manipulate the manikins, and even get covered in blood or other bodily fluids (Figures 2 and 3). But manikins have their limitations too. They cannot interact as perhaps an actor or real patient would, and some of the internal organs of the manikins are not well represented.

Virtually Real

This is where another type of simulation technology comes in. Virtual reality used to be the domain of the gaming industry but is increasingly finding its way into medical training. The BMSC does not have any virtual reality training tools, but McIndoe admits that, for certain applications, this technology could have its advantages.

“Training for robotic and laparoscopic procedures now often takes place on virtual reality training tools,” said McIndoe. “But, as with any surgical training tool, it is essential that the haptic feedback is realistic.”

For example, the da Vinci Surgical System from Intuitive Surgical (Sunnyvale, California) uses high-resolution three-dimensional (3-D) cameras to enable doctors to perform delicate operations remotely, manipulating tiny surgical instruments (see also Sarah Campbell’s article “Fidelity and Validity in Medical Simulation” in this issue of IEEE Pulse). When developing the system, the company focused on giving surgeons better vision because the necessary touch for operating on soft tissue, such as organs, was beyond the capability of haptics technology. Training on how to use the da Vinci Surgical System is often carried out using virtual reality, allowing users to practice using the system before performing real operations. In both the virtual reality training and the real operations, the haptic feedback technology is improving. And rivals are coming on the market. The Italian company SOFAR (Milan, Italy) has launched the Telelap ALF-X, which features haptic feedback, allowing the surgeon to indirectly “feel” the tissues that are being manipulated. The system also tracks the surgeon’s eye movements, positioning the camera so that the field of view is centered where the eyes are looking.

Additionally, other technology developed for gaming that promises to revolutionize medical training is immersive virtual reality. This is where the user feels as if he/she is physically in the situation, moving around and manipulating a virtual world. Until recently, this type of technology was expensive and developed only in well-funded research laboratories, but the Oculus Rift has changed all that. This immersive gaming headset, which was launched using Kickstarter crowdfunding back in 2012, has opened up an entirely new world of opportunities for a variety of industries including medical training.

Surgevry (Paris, France) is a company that has been set up specifically to use the Oculus Rift for training surgeons. Surgeons usually learn by watching colleagues and then performing an operation with guidance from a senior colleague, but watching someone else does not give you the same perspective as actually performing the operation (Figure 3). So Rémi Rousseau, development lead at Surgevry, and his colleagues decided to attach stereoscopic cameras to a surgeon and film an operation from his perspective. These data are then viewed by the trainee surgeon using an Oculus Rift headset.

“While this is not simulation, it is a powerful tool as it immerses the user within the situation,” said Rousseau. “When the user turns their head, they get a different view. The system can also be used to visualize additional information such as MRI scans. It is a highly mobile system, and we have had some great feedback from surgeons who have tried it out.”

Research has shown that, like athletes who visualize winning a race before starting it, surgeons perform better when they have visualized a procedure. “We hope that our technology will help improve the way surgeons are trained,” said Rousseau.

On the Battlefield and in the Hospital

Anyone with a development kit can develop applications for the Oculus Rift. This includes Dr. Harry Brenton, director of the start-up company BespokeVR (Cambridge, United Kingdom). “There are five things that make virtual reality feel real: resolution, tracking, optics, latency, and image persistency, the length of time an image is exposed to the retina. The Oculus Rift is the first commercial device that has a realistic chance of solving these challenges and to give the user a feeling of ‘presence,’” said Brenton. “It is also affordable, easy to use, and developing applications for the Oculus Rift is relatively straightforward.”

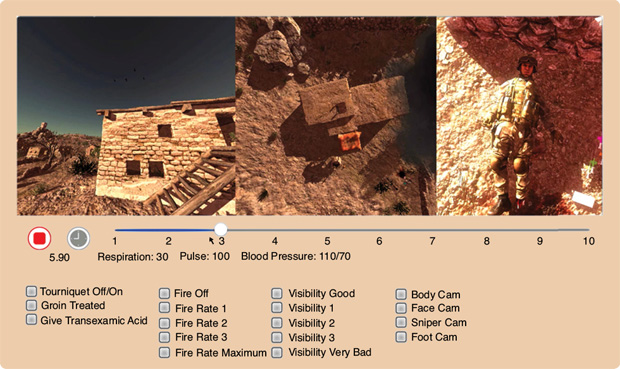

Brenton is working on a variety of projects all aimed at the medical field. For example, he previously collaborated with Plextek Consultancy (Great Chesterford, United Kingdom) and the U.K. government’s Defence Science and Technology Laboratory (DSTL, Porton Down, United Kingdom) to create an immersive simulation training system for the military using the Oculus Rift. This smart solution can simulate prehospital care on the battlefield and allows trainees to negotiate and prioritize clinical needs, teaching teamwork and decision-making skills within high-stress “under-fire” scenarios.

Using the Oculus Rift, soldiers can look all around their surrounding environment, navigating with a handheld controller to head across the virtual battleground and attend to casualties (Figure 4). Multiple trainees can be located within the same setting together, and a chosen “simulation controller” can then select particular complications to add to either the wounded soldier or the environment around him/her, including decreasing the consciousness level of the injured solider, worsening respiratory distress, or adding gunfire, an enemy sniper, or a bomb attack.

Brenton is also working on a virtual stroke patient in collaboration with researchers from Imperial College London (Figure 5). “Manikins cannot be used to simulate [a] stroke and they cannot have a conversation with the clinician. Actors also struggle to convey the symptoms of a stroke,” said Brenton. “Our virtual stroke patient can be used to train clinicians to diagnose different types of stroke. At the moment, our prototype simulates the facial and body movements of a stroke patient and also allows the clinician to see beneath the skin, at the anatomy of a stroke, and to translate anatomical theory into human movement and behavior.”

Stroke patients often have impaired speech, so Brenton has concentrated on simulating body language, but he believes a virtual stroke patient could eventually be trained to have a conversation with a clinician. “But simulating natural language dialogues is a challenging task,” says Brenton. “It could take up to a year to program a virtual patient to have a convincing, free-flowing one-minute conversation.”

Both the military and stroke projects are at the prototype stage, but progress is fast. “Until recently, it was not possible to see your own hands while using the Oculus Rift,” said Brenton. “But after purchasing a simple hand-tracking controller—the Leap Motion—I have been able to incorporate the users’ hands into the stroke application relatively quickly. This would also be ideal for other medical applications such as surgery.”

Regional Accuracy

The virtual stroke patient is an excellent example of virtual reality doing something that other forms of simulation or training cannot achieve. Another example is the Regional Anaesthesia Simulator and Assistant (RASimAs) being developed by a consortium of 14 academic, clinical, and industrial partners from ten different European countries.

“Regional anesthesia should be applied more frequently than it currently is,” says Prof. Thomas Deserno from the Department of Medical Informatics at Uniklinik RWTH (Aachen, Germany), who is leading the project. “Patients recover more quickly from a regional anesthetic than a general one, and it’s also a more cost-effective form of anesthesia. But administering regional anesthesia is challenging, and it is difficult to learn. The drug needs to be delivered within the body close to the nerve, but the nerves, arteries, and veins must not be perforated. If the needle is not close enough, there is no effect, but if the needle is too close, the nerve may be harmed, resulting in irreversible loss of mobility or sense. We are developing a simulator that will train anesthetists to effectively administer regional anesthetics.”

The procedure requires the clinicians to locate a specific nerve with a needle inside the patient, which is usually guided by ultrasound or electrical stimulation (Figure 6). Currently, it is taught on cadavers, manikins, or simply learned by doing on the patients.

“Our simulator uses virtual physiological human (VPH) models, and we combine these with scan data from the patient,” said Deserno. “During the procedure, the anesthetist can additionally use our virtual assistant, which maps real-time ultrasound measurements to the patient-specific model.”

For the haptic feedback that is essential for delicate procedures such as this, Deserno and his colleagues are using an off-the-shelf haptic device—the Omni device from Sensable (Wilmington, Massachusetts). “We learned from a previous project that haptic feedback is even more important than visual feedback in procedures like this,” said Deserno. “This hardware is available and it’s not very expensive; all we have to do is adapt it for our requirements.”

The main challenge faced by the researchers in this project is refining the generic virtual physiological human models and combining them with patient-specific scan data. For example, when a patient has an MRI scan, his/her arm is by his/her side. But when an axillary block is performed, this arm is moved, so the human body model needs to be reposed virtually.

“We aim at completing the simulation and assistance systems by the end of this year,” said Deserno. “And then, we plan to conduct clinical trials of either the components to prove that clinicians trained or assisted with our system perform more successful procedures than those educated conventionally.”

At the University of Bournemouth in the United Kingdom, researchers are also using haptic feedback in the development of an advanced epidural simulator. Epidural analgesia and anesthesia are used in pain management in operative, chronic, and childbirth pain. The simulator being developed at Bournemouth will help train anesthetists by realistically simulating the complete procedure, starting with the needle advancing through the skin and ligaments, between the vertebrae and the epidural space. The first prototype uses a Novint Falcon haptic device connected to a 3-D modeled graphical simulation.

For this project and others, such as RASimAs, to succeed, it is important that they have accurate physiological models of the human body. Initiatives such as the Virtual Physiological Human Institute for Integrative Biomedical Research in Belgium (VHP Institute) are attempting to do just that. The VPH Institute is an international nonprofit organization whose mission is to ensure that the virtual physiological human is fully realized, universally adopted, and effectively used in both research and clinics.

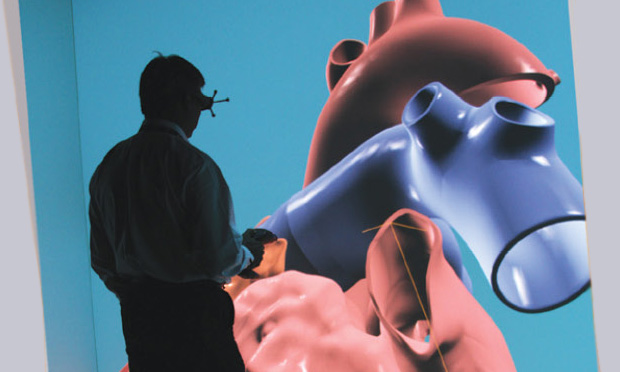

The French company Dassault Systèmes is involved in modeling an important part of this virtual human—the heart. Through its Living Heart Project, the company hopes to revolutionize cardiovascular science. Steve Levine, director of the Living Heart Project, believes the technology could be rolled out to medical device developers and used to evaluate real patients by early 2016.

“We can now simulate a basic heartbeat in less than two hours on a workstation that sits by my desk,” says Levine (Figure 7). Such a realistic model can quickly explore a variety of treatment options that can be evaluated by the simulation, and the software will identify the best ones to use. The doctors would then use their judgment to decide the ultimate course of action, but the software would have filtered out 98% of the options.

While progress on projects such as these has been staggering in recent years, we are still a long way from the Holy Grail of a true virtual physiological human. As these simulation techniques become more refined and more health services see the advantages of training with simulation techniques, perhaps this technology will become fully integrated and funded within training programs for clinicians at all stages, just as the U.K. CMO recommended more than seven years ago. Isn’t it time this industry caught up with the training standards of the aviation industry? After all, both professions hold people’s lives in their hands.

For More Information

- Bespoke VR. [Online].

- VHP Institute. [Online].

- Dassault Systèmes. [Online].

- RASimAs. [Online].

- Surgevry. [Online].

- Bristol Medical Simulation Centre. [Online].