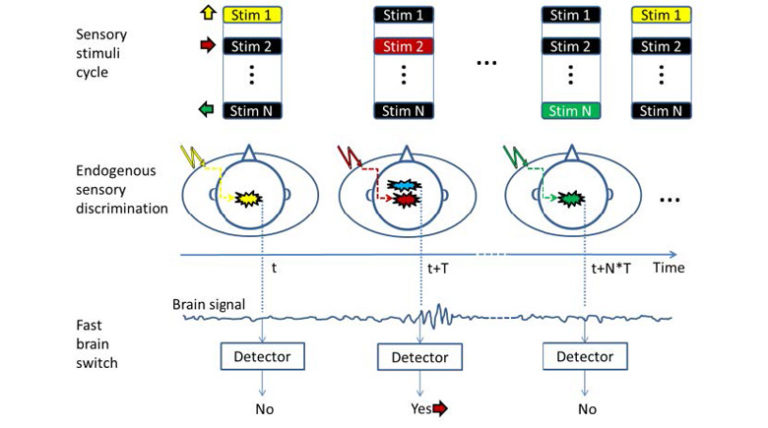

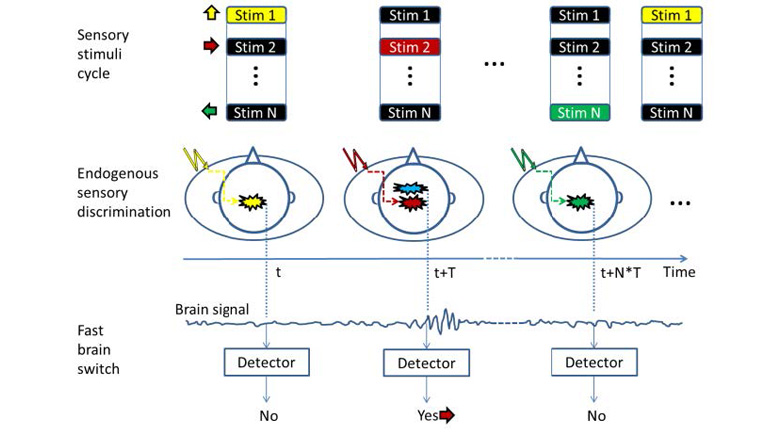

In this study, we present a novel multi-class brain-computer interface (BCI) system for communication and control. In this system, the information processing is shared by the algorithm (computer) and the user (human). Specifically, an electro-tactile cycle was presented to the user, providing the choice (class) by delivering timely sensory input. The user discriminated these choices by his/her endogenous sensory ability, and selected the desired choice with an intuitive motor task. This selection was detected by a fast brain switch based on real-time detection of movement-related cortical potentials from scalp EEG. We demonstrated the feasibility of such a system with a 4-class BCI, yielding a true positive rate of ~80% and ~70%, and an information transfer rate ~7 bits/min and ~5 bits/min, for the movement and imagination selection command, respectively. Furthermore, when the system was extended to 8-classes, the throughput of the system was improved, demonstrating the capability to accommodate a large number of classes. Combining the endogenous sensory discrimination with the fast brain switch, the proposed system could be an effective, multi-class, gaze-independent BCI system for communication and control applications.