In a 2013 TEDxMidAtlantic talk, a robot wheels onstage, displaying the face of Henry Evans, a mute and paraplegic technology enthusiast located 3,000 miles away. In 2002, Evans, a former Silicon Valley executive, suffered a stroke at age 40 that left him unable to move or talk.

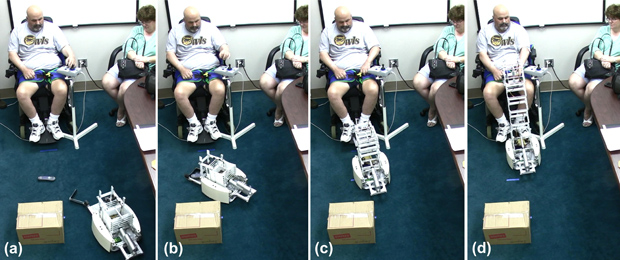

Gradually, Evans began using various technologies to translate his slight head motions to a computer cursor, allowing him to speak through an electronic voice and navigate the Internet. But in 2010, Evans found himself wanting more. That year, he began to collaborate with California-based open-source robotics company Willow Garage and other researchers to develop Robots for Humanity (R4H), which aims to adapt robots to assist the severely disabled. Through R4H, Evans has learned to fly drones and maneuver an armed, wheeled robot called PR2 (below) as his surrogate, allowing him to take tours of remote locations and shave himself for the first time in 10 years.

“R4H is about using technology to extend our capabilities, fill in our weaknesses, and let people perform at their best,” Evans says.

The allure and promise of robots—physical machines able to perform tasks with some degree of autonomy and intelligence—have long captured the human imagination. From space exploration, to factory work, to home cleaning and companionship, robots have the potential to make our lives easier. And as electronics have steadily improved while dropping in price, more robots are moving into the marketplace for consumers.

“The field of human-robot interaction (HRI) is poised to revolutionize society over the next 40 years in the same way computing did over the last 40 years,” says Chad Jenkins, an associate professor at Brown University who specializes in HRI and who works with Evans through R4H.

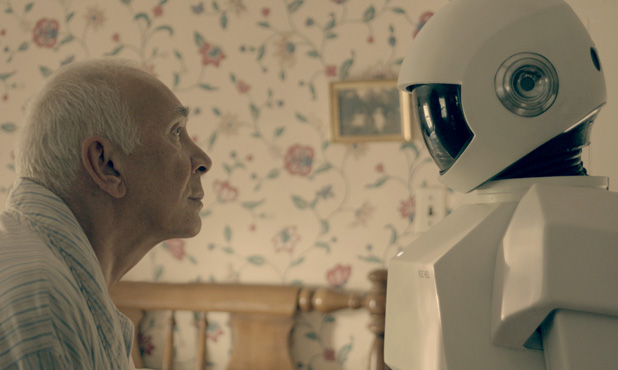

Healthcare robots for consumers, in particular, are picking up stride. As the overall population of the world ages, the number of elderly, ill, or disabled people requiring healthcare is growing fast. Rather than rely on an overwhelmed group of caretakers and specialists, robots may be able to fill the gap to connect people to the services they need, such as providing virtual check-ups, assisting with physical tasks, and even offering emotional support and encouragement.

Checking in from Afar

Healthcare robots span the spectrum: researchers have been developing bionic limbs, exoskeletons, and even automatons that can pick people up and carry them to a hospital bed. And commercially, a range of robots populate the clinical setting, from sophisticated arms that help doctors perform minimally invasive surgery (like the da Vinci surgical system), to exoskeletons that can function as rehabilitation tools, to robotic runners that deliver medication and other supplies throughout a hospital (like Pittsburgh-based Aethon’s Tug or Switzerland-based Swisslog’s RoboCouriers).

One type of robot that seems particularly well positioned to meet rapidly expanding healthcare demands are those that function as a surrogate (similar to what Evans uses). These “telepresence” robots, as they are called, allow specialists to diagnose remote patients or to check in with patients at home. They can also help protect healthcare workers from disease exposure.

Telepresence robots—generally consisting of a monitor, camera, and speakers that can be operated remotely—are more than just a conference call on wheels. Typically, they have some autonomous or semi-autonomous navigation system to prevent collisions and can often be used via a phone app or web browser. In the healthcare realm, telepresence robots need a particularly secure platform to access and share data in a way that protects patient confidentiality.

In January 2013, Massachusetts-based robotics giant, iRobot, and California-based telemedicine company InTouch Health, announced that their RP-VITA robot (above) was the first telepresence robot to receive FDA clearance. The robot, equipped with a map of its hospital, uses lasers, sonar, and other sensors to autonomously move throughout a space without bumping into obstacles to reach its destination, allowing doctors to tell the robot to visit a certain patient’s room without navigating it each step of the way. RP-VITA also has the ability to connect to a stethoscope, ultrasound, EKG, and other medical diagnostic tools.

“Our systems allow medical experts to be placed at the point of need by a patient’s side instantly in cases where otherwise it is very difficult to get that expert there quickly,” says CEO of InTouch Health, Yulun Wang, and adds that the company’s suite of telepresence systems is now in 1,100 hospitals and growing by a hospital a day. The company’s main application is stroke, which affects 800,000 people a year and is a leading cause of death and disability in the United States, according to the Centers for Disease Control and Prevention.

“If you’re at the right place at the right time, you can get the best healthcare in the world. But if you’re not, you don’t have access to quality healthcare or it costs too much,” says Wang. “This technology is about enabling the delivery of consistent, high-quality care, which then drives the costs of our healthcare system down.”

So what is the real advantage of a telepresence robot over, say, a computer monitor and conference call? The combination of advanced technologies and situational awareness given through the embodiment of a robot minimizes barriers to remote care, says Wang, allowing the physician to, for example, turn the robot’s head to look around, move away from the patient to have a private conversation with a nurse or family member, and even zoom in for a high-resolution real-time view of a person’s pupil.

The New Hampshire-based VGo company also develops telepresence robots for a variety of uses, including business, education, and healthcare. Its healthcare customers—ranging from Boston Children’s Hospital to the United Arab Emirates-based Amana Healthcare—use the robot to check in on patients.

New Jersey-based Children’s Specialized Hospital, for example, sends a VGo robot home (right) with medically compromised children during the first week of their return, so healthcare professionals can check in on the child’s vitals whenever needed. And Christopher Haines, Chief Medical Officer at Children’s, wrote in a recent blog post about how the VGo system allowed him to quickly evaluate a pediatric patient remotely.

VGo has also explored different kinds of health applications outside of the hospital. In September 2014, a local hospital began a pilot program with Dartmouth College to use the VGo telepresence robot during football games to monitor potential concussions. The VGo robot gives the Dartmouth-Hitchcock Medical Center a way to provide remote and real-time clinical assessments of Dartmouth players who may have suffered a concussion. And in West Seneca, New York, a boy with life-threatening allergies attended his classes at Winchester Elementary School through a VGo surrogate.

“I believe robot telepresence will really be the first entry point and building block for personal robotics in widespread use,” says Jenkins, who has begun a new project by partnering with gerontologists and a local nursing home to see how robot telepresence could help the elderly by connecting them with their loved ones, providing timely access to medical expertise, and avoiding unnecessary trips to the hospital.

While such robots sounds like a boon for the medical industry and consumers, they come at a steep cost: currently the RP-VITA is $4,000-6,000 a month for a hospital to use, including technical support and its cloud-based platform. The Willow Garage robot Evans used in his TED talk goes for approximately $300,000. VGo runs about $6,000, plus a yearly subscription of around $1,000.

Aside from cost, healthcare telepresence robots may also encounter difficulties around health insurance reimbursement (especially if the doctor and patient are in different states), and a lack of compatibility with hospitals’ varied and proprietary electronic medical records and data systems. Also—as anyone who has experienced a dropped Skype call can imagine—other frustrations may emerge, along with the potential for patients to feel that they are getting second-rate care.

However, there is reason to think telepresence robots will take off in the healthcare realm. Telepresence bots have already successfully helped us to explore space travel (such as the Mars Rovers), disarmed bombs (such as iRobot’s PackBots), and surveyed the Fukushima Daiichi nuclear disaster. Telepresence robots could have another benefit—protecting healthcare workers from exposure to deadly infectious diseases, such as Ebola.

In response to the growing Ebola epidemic in 2014, the White House Office of Science and Technology Policy organized a series of brainstorming meetings for researchers, industry specialists, and medical professionals to discuss how to use technology to minimize the spread of the disease. The first meeting was held 7 November 2014 at four locations: Worcester Polytechnic Institute (WPI) in Massachusetts; Texas A&M University; the University of California, Berkeley; and in Washington, D.C.

“The robotic solution is likely not replacing humans; robots would still need a human operator. But the idea is to put a system in between the virus and the healthcare worker that can still do some of the caretaking tasks,” says Taskin Padir, an assistant professor of robotics at WPI who is one of the organizers of the brainstorming sessions.

Padir says that, while the technology is far from having robots go into the home to take care of quarantined patients, tele-operated systems could still be effective in the structured environment of a hospital. Aside from delivering medicine and having doctors remotely check in on patients, robots could also be deployed to disinfect materials or sterilize facilities. However, many challenges may arise, including that the technology will need to operate in hot, humid locales without reliable Internet connections and will need to integrate with mission-based teams who may not have the hours needed to train with a robot.

Despite the barriers, telepresence robots are already being deployed to fight Ebola. A Massachusetts-based company called Vecna (through its extension, Vecna Cares) has traveled to countries in Africa to deliver electronics records and other health technology, including a VGo robot. And according to Wang, the Robert Wood Johnson hospital in New Jersey is planning to use a RP-VITA in the isolation ward with future Ebola patients.

“Robotics has been growing exponentially and robots that can work with humans are a national focus here in the United States,” Padir adds. “We want to think about addressing these problems now so we can also make an impact when the next disease outbreak occurs.”

Your Home Assistant, and a Friend

Outside of the hospital, the greatest potential for healthcare robots to make a difference is in the home. Robots that can operate entirely independently—as opposed to a telepresence robot—could help with housekeeping tasks and provide monitoring and support for those who are elderly, disabled, or sick.

“Robots with social capabilities and/or specialized manipulation capabilities, such as transporting objects on a tray, can make a positive impact via consumer products in the near term,” says Charlie Kemp, professor and director of the Healthcare Robotics Lab at Georgia Tech and a collaborator at R4H. Kemp developed a robot called Dusty, (right, below) which can pick up objects from flat surfaces (such as the floor) to deliver them to people. He also created a tactile-sensing robotic arm that can gently brush past obstacles to reach its goal, which would let a robot operate in a realistic, cluttered home environment. His lab has also looked at the potential for robots to deliver water and medicine to people at home to improve hydration and adherence to drugs.

Kemp is optimistic about home assistant robots with arms, but says because of the greater complexities and costs, these will take longer to become widespread than products like the telepresence robots.

“In collaboration with others, my lab has demonstrated the feasibility of general-purpose human-scale robots for 24/7 in-home assistance. We’ve also found that the participants in our studies, including older adults, nurses, and people with disabilities, are remarkably positive about this type of robot providing help,” says Kemp. “However, feasibility is not enough. We are continuing to investigate how to make these robots more useful and easier to use, for example, we are working to endow robots with more common sense about the world.”

So far, efforts to develop a bionic butler or housekeeping robot haven’t moved far beyond the research realm, although there have been impressive results. In addition to Kemp’s fetching robots, other research groups have developed bots that can fold towels or wash dishes. While these ongoing successes in robotic vision, processing, and dexterity are critical, few robots have made it as commercial home assistants successfully (aside from iRobot’s Roomba).

Aside from carrying out physical tasks, other efforts have focused on home robots that act more as a friend to discuss fitness goals or encourage users to take medicine, for example. Developing robots that act and respond more humanly—that is, by imitating and interpreting emotional and social cues—could bridge the gap in personal and health robotics, making them more efficient, enjoyable, and useful.

Japan has been notable in its attempts to incorporate friendlier, emotion-eliciting robots. Paro, a robot that approximates a baby seal, has been used in nursing homes to provide comfort to the elderly, with mixed results. And in June 2014, Japan-based Softbank announced its companion home robot called Pepper, which will start to sell in Japan in 2015 at around US$2,000. With the ability to navigate, arms and hands for gesturing, and an array of sensors and voice recognition for communication, Pepper is the newest among a line of several other similar humanoid robots, such as the Nao and Honda’s Asimo. Pepper is equipped to tell jokes and banter with humans, beginning to incorporate social behavior.

Cynthia Breazeal, an associate professor at Massachusetts Institute for Technology (MIT) and leader in the field of social robotics, believes that if done right, robots can leverage social cues to enhance communications and become more effective in helping humans. In July 2014, she announced what she calls the first social robot for the home, dubbed Jibo. (below) In a widely publicized video, Jibo is seen automatically detecting faces and taking photos at a party, responding to inquiries, telling stories to kids and, in the future, potentially ordering take-out.

“Jibo is the commercialization of a meta concept of what I’ve been discovering during my many years of research,” says Breazeal. “While the traditional view of robotics focuses on a technology that does physical work, I’ve found that robots are also a profound social and emotional experience that can support people more like a someone than a something.”

After a crowd-funded campaign, Breazeal expects to deploy the first round of open-platform Jibos for hundreds, rather than thousands, of dollars at the end of 2015 to third-party developers before a wide release the following year. Similar to Autom, a social robot developed in her lab that was later commercialized with limited success for weight-loss assistance, Jibo could someday have a health-coaching component, says Breazeal, as well as many other possible new applications.

Ultimately, Breazeal believes social robots can enhance, rather than intrude on, interpersonal relationships to improve well-being. “Rather than technology that’s always beeping and buzzing at us we need technology that fits into our human values,” she says. “These robots can feel more like someone who has your back versus a tool you poke at. It’s a fundamentally different experience that can lead to improved human performance and outcomes.”