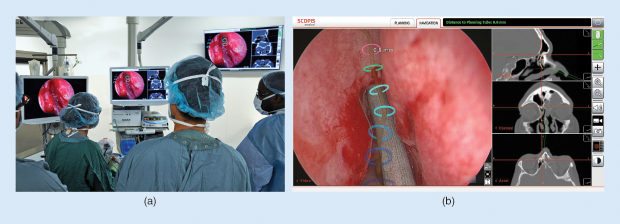

In March 2017, Dr. Marc Tewfik performed a delicate operation to remove cancerous tissue from a patient’s sinus cavity, something that he has done many times before at the McGill University Health Center (MUHC) in Montréal, Canada. But this time, things were a bit different. Before performing such a minimally invasive surgery using a thin laparoscopic device inserted through the nose, the exact surgical plan was worked out in meticulous detail. Tewfik (Figure 1) used magnetic resonance imaging (MRI) scans to plot a pathway for the surgical tools to reach the target tissue without endangering arteries or nerves in an area just fractions of an inch from the optic nerve and from the brain itself.

A Preplanned View of the Procedure

Preplanning is always a part of such operations, but this time, Tewfik had a new advantage: as he wielded the instruments by watching the live image feed from the laparoscope for the first time, he was seeing the preplanned pathway, in color, overlaid in precise registration with the live image. Any tiny deviation of his movements from the surgical plan was instantly highlighted in a different color, making it readily apparent.

As he worked, a group of medical students watched the whole process in real time, witnessing exactly the same view the doctor was seeing and hearing his comments as he proceeded with the surgery (Figure 2). Thanks to the augmented reality (AR) system, overlaying real, immediate imagery with stored digital data, both the doctor and the students received a clearer, more immediate view of precisely what happened.

And that’s not all. Every detail of the process was also recorded, so not only this group of students but also many others for years to come can share and relive that same surgical experience and learn from one of the leading practitioners of this particular procedure.

“It’s going to be very useful,” Tewfik says of this AR- enhanced surgery, which used a system developed by Berlin-based Scopis (Figure 1, above). The system—which Tewfik was the first surgeon in North America to use for such procedures—will help, he explains, “… in terms of teaching surgeons how to safely navigate through the sinuses, where you’re working very close to a lot of important structures… The potential for really devastating complications in this surgery is always present.” That makes it hard to teach such procedures well, he says; “You need to be able to teach these techniques, but you also need to maintain patient safety while you teach the new surgeons. This will really help with teaching what the important structures are, and where to put the instruments.”

AR For Educational Applications

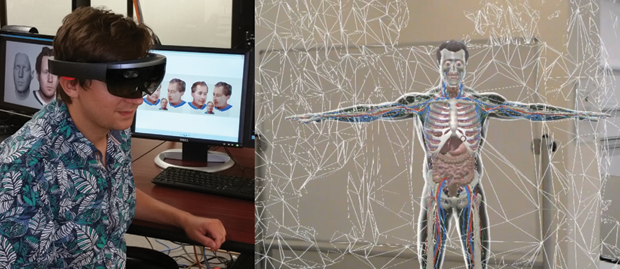

The idea of using some form of AR for surgical applications and medical education has been around for a long time, but the technology has really only begun to catch up to that vision within the last few years—and even now, most of the systems that are being touted are still in the prototype stage or undergoing testing. While using such systems in actual surgery requires the highest levels of safety and reliability, the educational potential in situations that don’t put patients at risk is already very real and beginning to have an impact. “I believe that education of health professionals is going to be the area where we are going to see the most traction for [AR] within the next few years,” says Anya Andrews, the director of research initiatives and an associate professor of medicine at the University of Central Florida (UCF) College of Medicine (Figure 3, right) in Orlando.

Andrews explains that AR “… has a tremendous potential to create powerful, contextually rich learning experiences for students and trainees. The AR presents an opportunity to develop a new understanding through exploration, which supports both knowledge acquisition and skill development.” But, she continues, there are still limitations even in this area. “Today, most learning solutions involving [AR] follow the traditional style of ‘see one, do one, teach one.’ For instance, the most recent integrative review of [AR] in medical education by a team of researchers from Europe and China has concluded that most current solutions (a lot of which were prototypes) lack explicit pedagogical frameworks that would guide the design of the applications.”

But there are some promising educational uses already taking place, she adds. At UCF, “Our educational technology team recently created a ‘Human Body’ app using Oculus Rift, where students take a journey through the bloodstream during their anatomy course” (Figure 4). Similarly, students at Case Western Reserve University are taking an AR- enabled anatomy course, which takes advantage of the Microsoft HoloLens, a head-mounted AR platform, to enable them to study the human body’s different systems in 3-D, adding or removing the muscles, nerves, and circulatory systems at will to see how they interrelate (Figure 5).

Surgical Applications

For surgeries like the recent ones at McGill, which Tewfik says he has now performed about ten times, the transition is easier and the application more immediate because such surgeries are already carried out through the use of a computer screen to display the placement and use of the surgical instruments. Adding AR overlays is relatively straightforward—the key technological issue being proper registration of the images, a task that has various solutions depending on the parts of the body being worked on.

Nearby in London, Ontario, Terry Peters is working on a system to help guide delicate heart surgery, involving repairs to the mitral and aortic heart valves. Peters, who is a professor of medical imaging, medical biophysics, and biomedical engineering at Western University, is developing an AR system based solely on ultrasound imaging overlaid on the minimally invasive surgery screen. He hopes this system, still in the early stages, can ultimately be applied to other areas, such as brain surgery.

Tewfik compares such systems to the digital aids that people are increasingly using to navigate their travel. Like a car’s global positioning system, these tools provide real-time, turn-by-turn guidance. But in this case, like a heads-up display projected onto the car’s windshield, you can consult the directional information “without ever taking your eyes off the road,” he says.

Overall, besides its clear advantages for teaching, using the system also means its precise positioning information “can help you speed up the surgery a lot. These are multiple-hour surgeries,” so reducing the time is better for patients and surgical staff alike. And yet it fits in seamlessly with the real-time imagery on a display screen that surgeons have been using for decades for laparoscopic surgery. “It’s just sort of an evolution of the existing navigation system,” Tewfik notes.

Although it’s not appropriate for every kind of procedure, he says, “For probably 40% of all the surgeries I do, this could be quickly adopted as an extra technology.” And it could extend to other kinds of surgery, including brain surgery, he continues, though that may take a bit longer. “It’s just starting, it’s in its infancy really.”

But one thing the system does very well, he says, is to provide a simple and intuitive user interface, making it easy to learn. “Any surgeon who isn’t particularly gifted with computers will be able to learn this easily,” he concludes.

Scopis is also developing a head-mounted version of the system, which could be used for more traditional surgeries with an open incision, allowing the MRI, computed tomography scan, or other imagery to be seen from the surgeon’s perspective, as if it’s projected onto the body itself—and the color and transparency of that overlay can be adjusted to make it easy to compare the real scene with the imagery.

Other Developments with AR

A variety of different devices and software systems along those lines are being used or developed to provide AR experiences for different kinds of surgery and surgical education. Many use existing consumer-oriented platforms, such as the now-discontinued Google Glass or the recently introduced Microsoft Hololens. A company based in New York offers a system called AccuVein, which assists with injections by projecting an image of the patient’s vasculature right onto the skin.

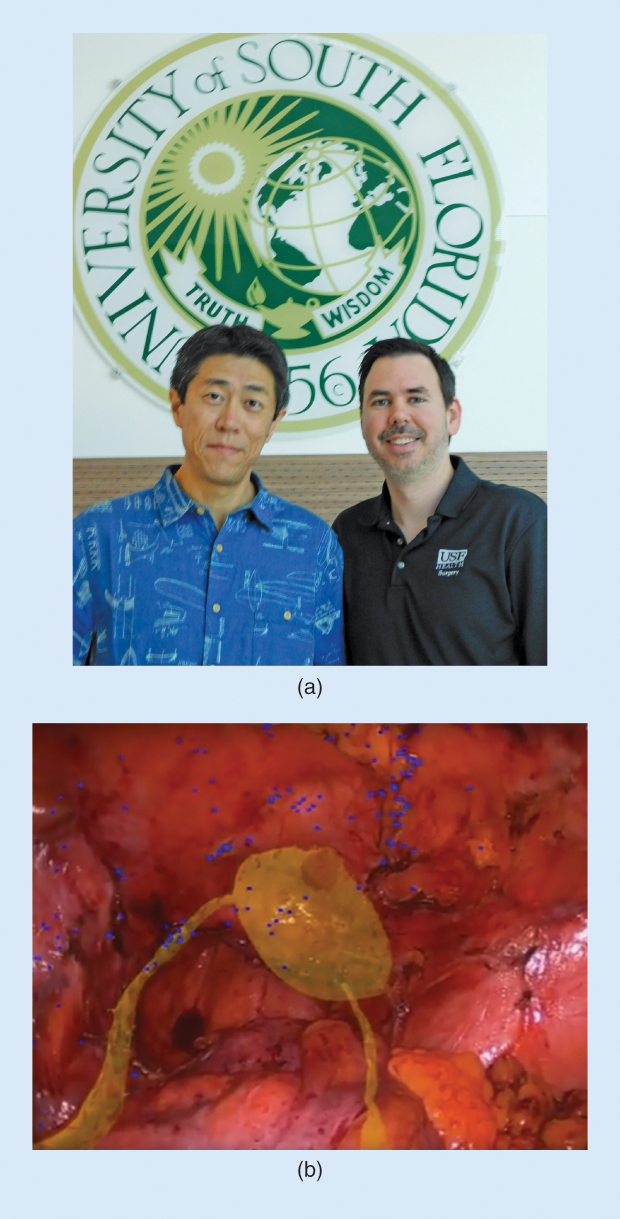

Over at the University of South Florida’s Morsani College of Medicine in Tampa, Dr. Jaime Sanchez [Figure 6(a)] is part of a team that has been developing another version of an AR surgical system designed to be flexible enough to use in many different kinds of surgery, including on relatively soft and flexible tissues, such as the abdominal cavity. It uses a novel system for identifying reference points that can be used to tie together the real patient with the stored imagery. The system, parts of which have been patented and others pending, uses an original approach to identifying specific structures within the pattern of branching blood vessels, which can function rather like the identifying points in a fingerprint to provide reference markers for alignment.

One of their early uses, according to Sanchez, was to model planned surgeries in a patient’s abdomen—also in close proximity to many important and delicate organs and structures. For such surgeries, he says, “When I operate on a colon, one of the first things we do is look for these structures that we need to be sure to avoid. Sometimes we spend an hour or more on that. But with this system, if it’s in register, you can see these things in place, as an overlay, right on the laparoscopic screen” [Figure 6(b)]. At the same time it allows students to see not just what’s actually happening, as with present systems, but to see in real time how that compares to the plan and to the known locations of blood vessels, nerves, and organs without having to distract the surgeon with questions.

The system is especially useful for locating and homing in on a target area, such as a tumor to be removed. The opacity of the overlay can be varied continually, Sanchez explains, so “as you get closer, the overlay gets more transparent, until you’re actually just seeing the real structure of interest in front of you.”

The USF team has done some initial studies that suggest such overlay systems provide “one of the best ways to learn” to perform such surgeries, Sanchez says. But the work is still in early stages, having been carried out using a taped feed of an operation rather than one in real time. “We’re looking forward to doing testing in animal models,” he explains, as a next step.

Although it would have to go through the necessary protocols for any human testing, when they eventually use it with real patients, “I think that this would be very safe,” he claims, in part “because the surgeon doesn’t have to depend on this. It can be turned on and off. Then you can see if you can find things faster… Human trials will be very low risk.”

Sanchez says the same visualization system could be useful in surgery on solid organs as well, such as the liver. While today the surgeon can’t see anything of the internal structure until an incision is made, the AR overlay means “being able to visualize something that’s in the center of this mass, without cutting in yet… If I can look down and see what’s inside, that would be a game changer.”

Simon Karger, at Cambridge Consultants in the United Kingdom (Figure 7, right), has also been leading the development of an AR system for use in surgery and medical education applications. Unlike systems intended for laparoscopic surgery in which an overlay is added to a screen that is already the standard means for guiding surgical tools, Karger’s system uses a head-mounted display, the HoloLens, to allow the surgeon, and students, to see the internal organs and imagery projected right onto the patient’s body itself, not only during the surgery but also before any incision is made. So far, the system has been demonstrated using surgical mannequins.

“What we tried to do, using AR, was to give surgeons X-ray vision,” Karger says. And by doing so, he continues, it may be possible to train new surgeons faster and more effectively.

For medical education, he says it has great potential, enabling a combination of the experience of working with a real patient, or a realistic mannequin and allowing students to walk around the patient with the ability to select exactly what internal systems to reveal or hide. They could view the whole muscular system in place, or the vascular system, or the nervous system, or the digestive system, or individual organs. For planning a surgical route, that ability to turn portions on and off could make it easier to visualize the hidden portions of a complex route through the body.

Future Challenges

But doing all that in real time for surgical applications rather than just education will take some time, Karger explains. “It’s way beyond the capacity of software today.” He says that “this kind of visualization can offer a highly enhanced surgical process,” but that’s still probably a few years off. How many? “Less than ten, more than two,” Karger estimates.

For now, the educational potential of AR systems seems to hold great promise, but it, too, is in its infancy. “Educators see the potential, but they are trying to figure out how to go beyond the ‘wow’ factor, to develop content that can be shown to really enhance the educational process,” says Andrews. “Content is always king, and we don’t have a lot of high-quality [AR] content yet.”

Even so, a study by doctors at Baylor University in Texas found that, in a group of 30 medical students exposed to an AR-enhanced training program for a specific procedure, 81% said they would want such a system in their residency program, and 93% expect to see such technology in the operating room of the future.

Andrews sees a bright future for such technology. “Definitely, the potential is tremendous,” she says. “The early examples that we’re seeing today are already phenomenal.”