Novel imaging devices and algorithms—including low-field MRI, smaller cameras, and better cancer grading—help to deliver more useful information

Since the invention of the X-ray in 1895, biomedical imaging has come a long way and the pace of advances in the field are only accelerating. Research groups are progressing on many fronts through improvements to existing technologies and the development of novel imaging devices and algorithms that not only deliver better pictures, but also more—and more useful—information from the image data.

Three of the many research groups pushing medical imaging in new and interesting directions include one that is helping to develop low-field magnetic resonance imaging (MRI) machines that produce high-resolution pictures; another that is using its own version of light-sheet microscopy to generate 3-D prostate images for easier and more accurate identification of aggressive cancers; and a third group that is designing a camera the size of a coarse grain of salt, but that yields ultrahigh-quality images with the potential for medical and many other applications.

Lower field, better access MRI

MRI machines seem to always be moving to higher power, even 11-plus Tesla [1], but a movement is afoot to develop low-field MRI of less than 1.5 T instead. In fact, some 350 scientists came together in March 2022 for a first-of-its-kind International Society for Magnetic Resonance in Medicine Workshop on low field MRI, according to workshop co-organizer Orlando Simonetti, Ph.D. (Figure 1), who is also research director of cardiovascular magnetic resonance, professor of internal medicine and radiology, and John W. Wolf professor in cardiovascular research at Ohio State University (OSU).

Figure 1. Ohio State University research groups of Orlando Simonetti (shown standing) and Rizwan Ahmad used a technique called compressed sensing to make dramatic improvements to the quality of low-field magnetic resonance images. Compared to traditional MRI machines, low-field versions can be lighter and less expensive to purchase and install, have larger patient bores (openings), and could provide safer and more effective imaging for patients with pacemakers and other implanted metallic devices. Simonetti is shown here with technologist and collaborator Heather Hermiller during an MRI at The Ohio State University Wexner Medical Center. (Photo courtesy of OSU.)

The interest in lower field strength stems from its advantages over today’s high-power MRI versions. For instance, lower-field MRI machines greatly lessen the image-distorting visual artifacts that occur near pacemakers and other implanted metallic devices; they cost less to make and install; and they can permit a larger patient-bore size (tube diameter), Simonetti said. The big hurdle, however, has been a poor signal-to-noise ratio that generates less-than-optimal images, but his group in conjunction with that of OSU biomedical engineer Rizwan Ahmad, Ph.D., have found a way to greatly improve the quality of low-field images, including those in Simonetti’s focus area of cardiac imaging.

With cardiac imaging, the OSU researchers sought to obtain a clear snapshot of the heart in its contractile position, a challenging proposition because the heart continues to beat and to move with respiration during an MRI. Their solution was to look to a technique called compressed sensing, which is used commercially and clinically to accelerate data acquisition, or basically to reconstruct images from less data, Simonetti explained [2]. Since cardiac imaging occurs in a dynamic fashion and collects a series of images over time, the researchers realized that much of the image data from frame to frame is redundant. “We used compressed sensing to exploit that redundancy and extract a lot more signal to noise,” he said. “In fact, the signal-to-noise we get with this technique is outstanding—to the point that it’s hard to tell the difference between our low-field images and those acquired at a higher field strength.”

The OSU group shared its work with Siemens Medical Solutions, of Malvern, PA, USA, which had begun pursuing low-field MRI. “I think our evidence gave them added confidence that this is a way to go,” Simonetti said. Siemens went on to develop its 0.55T MAGENTOM Free.Max scanner, received Food & Drug Administration (FDA) approval for clinical use in July 2021 [3], and installed one of the first of these scanners in the United States at the OSU Wexner Medical Center. The new scanner has an 80 cm patient bore, compared to the 60–70 cm bores in conventional MRI machines, which makes it more suitable for large patients and those who are claustrophobic (Figure 2).

Figure 2. OSU Wexner Medical Center is one of the first in the nation to install the new, FDA-approved Siemens MAGNETOM Free.Max MRI machine that has a lower magnetic field, as well as large patient bore (opening) better suited than traditional machines to accommodate obese and claustrophobic individuals. (Photo courtesy of OSU.

Simonetti’s group is now beginning studies with interventional cardiologist Dr. Aimee Armstrong of Nationwide Children’s Hospital of Columbus, OH, USA, to assess the capabilities of low-field MRI for heart-catheterization procedures, something that wasn’t possible with higher-power MRI because it heated the metal guide wires and catheters too much. “We’ve run a few experiments in a large animal model (pigs), where we’re doing catheterizations right in the 0.55 T scanner with real-time imaging, and testing different devices to try to improve the visibility when deploying stents or placing a catheter in a certain location to make a pressure measurement. So far, it’s gone very well,” he said. Concurrently, his group is continuing to perfect the imaging techniques, collaborating with several companies to optimize devices for visibility at low-field strength (e.g., iron oxide and other markers visible below 1.5 T), and beginning a five-year, National Institutes of Health-funded study to develop low-field imaging techniques for the severely obese population, a group that has a high risk of heart failure.

“It has really been fascinating to watch this field over the past five years or so, because as MRI goes toward higher field strength, and the cost and complexity of those machines ratchets up exponentially, we have this pivot to the low fields where the systems are much lighter, smaller, and less complex,” Simonetti said, noting that the company Hyperfine of Guilford, CT, USA, has developed a portable low-field MRI system that can wheel down a hospital hallway and into a patient’s room [4]. He added, “Low-field MRI really changes the game, and it will be interesting to see what comes next.”

Prostate in 3-D

For prostate cancer, pathologists must decide whether the cancer is the aggressive and potentially fatal type that benefits from radiation, surgery, or other treatments that carry potential side effects, or if it is the low-risk, indolent type that needs no treatment. Currently, pathologists make their determinations based on a visual examination of the glandular architecture within a small number of thin 2-D sections of biopsied prostate tissue or surgical specimens. That is not good enough, contends Jonathan T. C. Liu, Ph.D., professor of mechanical engineering, bioengineering, and laboratory medicine and pathology at the University of Washington (Figure 3).

One concern with this method is that the pathologist-reviewed sections represent only about 1% of the prostate biopsy, so critical areas can be missed, Liu said. “Besides that, only 2-D cross-sectional images are analyzed, and this technique is destructive of the tissue biopsy or specimen, which should ideally be preserved for genomics or other types of assays.”

A better option would be to view the entire sample in 3-D, he said. “If you can look at the whole tissue specimen, you have much more information, plus the 3D context to accurately assess the complex, branching-tree, glandular network of the prostate, which is currently the basis for grading the severity of the disease. And then if we can quantify those tissue structures using machine-learning tools, we can potentially make a much more accurate determination about disease aggressiveness to guide treatment decisions,”

Figure 3. Jonathan T. C. Liu (shown here) and his University of Washington research group are combining their innovative “open-top” light-sheet microscopes with machine-learning tools to provide a 3-D view and quantitative understanding of the prostate glandular network, which they have shown can help distinguish between lethal and indolent cancers. This is an important distinction because inaccurate grading can lead to potentially avoidable cancer progression and death, or to unnecessary treatments (and resulting side effects) for cancer that actually needs no treatment. (Photo courtesy of Mark Stone/University of Washington.)

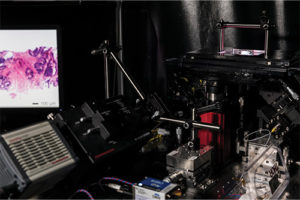

Liu said [5]. Liu and his group figured out how to do it. Their approach employs a so-called “open-top” light-sheet microscope, which is their version of a light-sheet microscope that uses single-plane laser light to illuminate samples (Figure 4). “Light-sheet microscopy is amazing technology, and it has been around and used by developmental biologists for 15–20 years, but it typically required extremely tedious tissue preparation. You could spend hours mounting a single Drosophila embryo, which was OK for a biologist’s lab, but not for a clinical setting in which hundreds and hundreds of biopsies need to be imaged each day,” he noted. To that end, they created a fluorescent analog of H&E staining (the histological stains, hematoxylin and eosin, that pathologists typically use for current 2-D analysis) that is fast, simple, and cheap, he said, “and can provide high-quality images that look like what the pathologists are used to seeing.” They also designed the microscope to be quick and easy to use. “All the pathologist needs to do is set the tissue sample on top of a glass plate, hit go, and the microscope scans through the tissues,” he said. Details of the fourth generation of their open-top light-sheet microscope were published in 2022 [6].

Figure 4. This early version of the open-top light-sheet microscope (at right) shows the tissue sample at the upper right and an example image of a prostate biopsy on the computer screen to the left. The Liu research group has since completed several iterations, publishing their most recent version of the microscope in May 2022. (Photo courtesy of Mark Stone/University of Washington.)

Most recently, Liu’s group has begun adding machine learning to the mix. “We’re trying to steer clear of the end-to-end, black-box deep-learning approach, and instead adopt an explainable step-by-step approach based on the structures that pathologists already use and trust for their determinations,” he said. “For instance, we segmented out the 3-D branching-tree network of prostate glands and quantified various geometric parameters (3-D features) to train a machine classifier to predict disease aggressiveness. We were able to show that it is a lot more accurate—5%–10% more accurate—than machine classifiers based on similar 2-D features” (Figure 5) [7].

Figure 5. This artistic rendering shows the various stages (left to right) of image processing that Liu’s group does to segment out the 3-D glandular network of the prostate: the prostate biopsy is stained with a fluorescent analog of H&E (left); deep learning-based image translation converts the H&E dataset into a synthetically immunolabeled dataset highlighting a biomarker (brown) that is expressed by the epithelial cells in all prostate glands; this enables 3-D segmentation of the prostate glands, as shown for the epithelium (yellow) and lumen spaces (red), as well as the basic 3-D configuration (skeleton) of the branching-tree gland network (magenta). Using quantitative features derived from these segmented 3-D structures, the researchers train a machine classifier to distinguish aggressive versus indolent cancer. (Image courtesy of Jonathan T. C. Liu.)

Liu and his group are continuing to refine the technology for prostate cancer, including adjusting it for different populations (e.g., African American vs. Caucasian men), as well as investigating other tissue structures that might be helpful for determining disease aggressiveness. They are also exploring its use for other cancers, such as imaging lymph nodes for improved staging of breast cancer [8], detecting and grading esophageal neoplasms (dysplasia and cancer), and for imaging diseases beyond cancer. As this continues, a spinoff company co-founded by Dr. Liu and his clinical collaborators (Alpenglow Biosciences of Seattle, WA, USA) are working with researchers and pharmaceutical companies to obtain and analyze 3-D pathology datasets for diverse applications.

Figure 6. Research group of Felix Heide (shown here) at Princeton University, and researchers at the University of Washington are combining metasurface technology, neural nano-optics, and computational design to build a tiny, lightweight camera. (Photo courtesy of Princeton University, Sameer A. Khan/Fotobuddy.)

“For me, what’s most exciting is to make a clinical impact: helping patients to get the right treatments at the right time, while keeping other patients from getting treatments they don’t need so they don’t suffer avoidable side effects and financial burdens,” Liu said. “Besides these important health benefits, we can also learn a lot from these large datasets about things like the heterogeneity in cancer and how the immune system is involved in the progression and treatment of such cancers. That’s where I think the big data that we can provide is really transformative. We can interrogate large amounts of tissue and develop quantitative methods to analyze that big data, which can ultimately change our understanding of biology and medicine [9].”

Tiny camera, big view

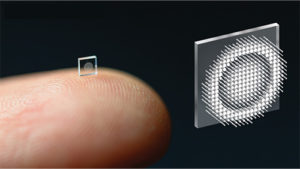

Today’s cameras are better than ever, but they continue to be built the old-fashioned way with bulky refractive lenses or with lens elements stacked one on top of the other. Researchers from Princeton University and the University of Washington are changing that. Using metasurface technology, neural nano-optics, and computational design, they have constructed a thin, lightweight camera small enough to balance on a fingertip, but with image capability rivaling that of a conventional compound camera lens [10].

Figure 7. Ultrasmall camera, shown here on a fingertip, is studded with millions of nanopillars that act like miniature and subwavelength antennas. This allows the modulation of light—the incoming wavefront—to produce a highly accurate image. (Photo courtesy of the researchers at Princeton University.)

“We started out by asking ourselves if we could compress the entire stack into a single surface, but to do that, we needed a lot of degrees of freedom and that’s where metasurfaces come in. They are arrays of millions of nanopillars that act like little antennas, and they are subwavelength, so we can really modulate the light, or incoming wavefront, at an unprecedented accuracy,” summarized Felix Heide, Ph.D., assistant professor of computer science at Princeton (Figure 6). To get just the right spatial arrangement of the nanopillars, which look like rows of tiny cylinders arising from a flat plate (Figure 7), they developed an artificial intelligence method to direct the design.

That wasn’t all. They also devised computational-reconstruction algorithms to do final refinement for a super-sharp image. “Overall, what we’re doing is treating this entire signal-processing chain as one big trainable algorithm with parameters that we can optimize over. In other words, we can jointly design optics and computation together,” Heide said.

The spinoff company Tunoptix Inc. of Seattle, WA, USA, is beginning work to commercialize the camera, which Heide described as designed for manufacturability. “Basically, we piggyback on top of existing chip-fabrication techniques, so this could be done on already-existing code processors,” he said.

As that proceeds, the university researchers are exploring a number of applications for the technology. For example, they hope to build a medical endoscope that is the size of a few optical fibers, and are also considering very small optics equipment, including any necessary illumination, for far-less-invasive surgeries or diagnostics, Heide said.

Figure 8. Compared to previous micro-sized cameras (left), the new camera yields far clearer photos, similar to those produced by a conventional compound camera lens. (Photo courtesy of the researchers at Princeton University.)

Beyond biomedical uses, they are considering scaling to ultralarge metaoptics perhaps as large as 1 meter across, which could decrease the size and weight of large space telescopes; bundling computation with the optics for such uses as object detection for self-driving cars; or applying their reconstruction algorithms to remove aberrations from conventional camera optics, which could result in clearer images (Figure 8), and if applied to the cameras in smartphones, possibly even thinner phones. Heide remarked, “We are just at the start of this technology, but there is a lot of potential and many exciting areas to explore.”

References

- French Alternative Energies and Atomic Energy Commission (CEA). (Sep. 2021). The Most Powerful MRI Scanner in the World Delivers Its First Images! Accessed: Apr. 25, 2022. [Online]. Available: https://www.cea.fr/english/Pages/News/premieres-images-irm-iseult-2021.aspx

- K. Gil et al., “Rapid cardiovascular magnetic resonance protocol utilizing compressed sensing real-time imaging during the COVID-19 pandemic,” Eur. Heart J.-Cardiovascular Imag., vol. 22, no. 2, pp. 1–2, Jul. 2021.

- Siemens Healthineers. (Jul. 6, 2021). Siemens Healthineers Announces FDA Clearance of MAGNETOM Free.Max 80 cm MR Scanner. Accessed: Apr. 25, 2022. [Online]. Available: https://www.siemens-healthineers.com/en-us/press-room/press-releases/fdaclearsmagnetomfreemax.html

- Hyperfine Portable MR Imaging. (Feb. 16, 2022). Queen’s University Radiology Receives Hyperfine Portable MRI to Improve Access to Care for Canadian Patients in Remote Northern Communities. Accessed: Apr. 25, 2022. [Online]. Available: https://hyperfine.io/queens-university-radiology-receives-hyperfine-portable-mri-to-improve-access-to-care-for-canadian-patients-in-remote-northern-communities/

- A. K. Glaser et al., “Multi-immersion open-top light-sheet microscope for high-throughput imaging of cleared tissues,” Nature Commun., vol. 10, no. 1, Dec. 2019, Art. no. 2781.

- A. K. Glaser et al., “A hybrid open-top light-sheet microscope for versatile multi-scale imaging of cleared tissues,” Nature Methods, vol. 19, no. 5, pp. 613–619, May 2022.

- W. Xie et al., “Prostate cancer risk stratification via nondestructive 3D pathology with deep learning–assisted gland analysis,” Cancer Res., vol. 82, no. 2, pp. 334–345, Jan. 2022.

- L. A. Barner et al., “Multiresolution nondestructive 3D pathology of whole lymph nodes for breast cancer staging,” J. Biomed. Opt., vol. 27, no. 3, Mar. 2022, Art. no. 036501.

- J. T. C. Liu et al., “Harnessing non-destructive 3D pathology,” Nature Biomed. Eng., vol. 5, no. 3, pp. 203–218, Mar. 2021.

- E. Tseng et al., “Neural nano-optics for high-quality thin lens imaging,” Nature Commun., vol. 12, no. 1, Dec. 2021, Art. no. 6493. Accessed: Apr. 28, 2022. [Online]. Available: https://pubmed.ncbi.nlm.nih.gov/34845201/