Enhancing quality, expanding access, and measuring outcomes with the aid of artificial intelligence means better care for patients

A growing number of researchers and startup companies are looking to integrate artificial intelligence (AI) into perhaps the most human of places in health care: the therapy room [1]. The hope is that AI can help mitigate the time and expense it takes for humans to rate and code elements of the patient-provider interaction in a therapy session, a process that is vital for training new therapists, evaluating therapy quality, and conducting research studies and clinical trials of psychotherapy.

Detecting empathy—and more

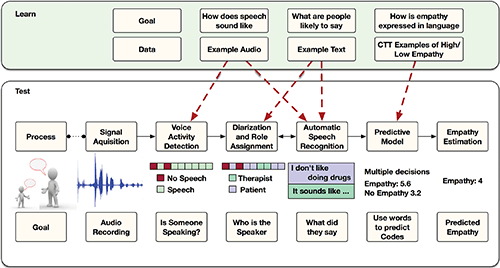

When Zac Imel, Ph.D., professor and director of clinical training at the University of Utah and co-founder and chief science officer at Lyssn, first considered integrating AI with psychotherapy, he began with a proof of principle study (Figure 1). Could AI be used to rate how empathetic a therapist was in a given session—a vital component of effective therapy? To test this, Imel and his colleagues trained a machine learning model using human ratings of counselor empathy and several hundred counseling session transcripts and recordings [2] (Figure 2). They found that the model could detect empathy from transcripts with a reliability similar to human raters, paving the way for more studies using machine learning to detect other therapy skills.

In 2017, Imel and a group of other researchers founded Lyssn, a company which uses automatic speech recognition and AI to support the training and supervision of behavioral health specialists via a Health Insurance Portability and Accountability Act (HIPAA)-compliant cloud-based platform. Over 20,000 human-rated psychotherapy sessions were used to train Lyssn’s algorithms.

Figure 1. Zac Imel, Ph.D., professor and director of clinical training at the University of Utah and co-founder and chief science officer at Lyssn. (Photo courtesy of Z. Imel.)

When fed a recording or transcript of a therapy session, Lyssn’s AI automatically detects more than 54 research-backed metrics of therapy quality, such as provider empathy, active listening skills, and engagement. Lyssn can also detect elements of evidence-based practices, including motivational interviewing and cognitive behavioral therapy (CBT), and can create session summaries and clinician notes.

Technical advancements in AI—in natural language processing and deep learning in particular—over the last five to six years have made Lyssn’s detailed analytics possible. “The revolution that’s happened in natural language processing is [that] we figured out ways to learn from unlabeled data and then transfer that learning to a specific problem,” says Michael Tanana, Ph.D., co-founder and chief technology officer at Lyssn (Figure 3). This advance means Lyssn’s AI is able to perform subtle, complicated tasks—like detecting empathy and writing clinical notes that look like they were generated by a person.

By integrating AI into the clinic, Lyssn gives therapist trainees more opportunities to practice skills and receive immediate feedback and expands opportunities for quality monitoring of therapy. The platform allows supervisors to see detailed information about the performance of individual therapists such as how their listening skills have varied from session to session. Lyssn is also working with state governments to help evaluate evidence-based practices administered through federally-funded programs. “There were already mandates for measuring what was happening,” says Imel. “Instead of having to pay a motivational interviewing expert thousands of dollars to grade just a few sessions, we can get the cost per provider down quite low, and we can give them maximum visibility into the quality of services across their systems.”

Now that this type of quality assurance evaluation is possible, it may lead to changes in mental health care administration. “There was no scalable way for people to demonstrate quality, and we’re starting to get into situations where payers are thinking about how can we actually pay for good quality services,” says Tanana. “As just a health care consumer, I think that’s what gets us out of bed in the morning.”

Looking forward, Lyssn’s next big horizon is providing therapists with real-time suggestions for improving their interactions with patients. “We’re still not in the middle of the interaction, and we have the technological capability to help in real time with the conversation,” says Imel. “We’ve done some internal proof of concept work on that and are really excited about where that might go.”

Expanding access to therapy

Another problem in mental health care that AI may help tackle is expanding access to care. That’s been the goal of ieso, a company based in the U.K. that has been providing assessment and treatment of mental health conditions primarily to patients within the National Health System (NHS) for the past 10 years.

Figure 2. Overview of processing steps for moving from audio recording of session to predicted value of empathy. The lower portion of the figure represents the process for a single session recording, whereas the upper portion represents various speech signal processing tasks, learned from all available corpora (as indicated in the text). (Image and caption from [2]; Creative Commons Attribution License.)

Figure 3. Michael Tanana, Ph.D., co-founder and chief technology officer at Lyssn. (Photo courtesy of M. Tanana.)

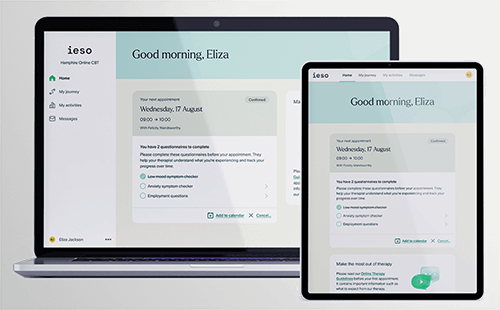

“In order to solve the access problem, the first thing we did is to provide therapy services online,” says Valentin Tablan, Ph.D., chief AI officer at ieso (Figure 4). Rather than rely on videoconferencing, therapists working for ieso provide patients with CBT via a text-based platform [3].

CBT therapists must transmit a lot of information to their patients, so ieso made the choice to record all therapy sessions so patients could review them later on. Over the past 10 years, ieso has seen some 100,000 patients and delivered—and recorded—more than half a million sessions of therapy. This ended up being a rich dataset for ieso to use AI to explore what works in therapy.

Because ieso measures symptoms at every point of contact with a patient, the company is able to track how symptom intensity changes throughout treatment (Figure 5). “From a data science point of view, that’s an ideal situation. You have the full record of the intervention that was given to the patient and you have a measurement of the outcome, how the symptoms changed as a result of that intervention,” Tablan says.

Figure 4. Valentin Tablan, Ph.D., chief AI officer at ieso. (Photo courtesy of ieso.)

In 2019, ieso researchers published a study where they used a deep learning model to automatically tag therapy sessions for different types of content and clinical events that occurred in the conversation between patient and therapist [4]. The researchers then looked at how the composition of the therapy session and the presence or absence of various elements of care correlated with outcomes for patients. For example, content related to therapeutic praise, discussing perceptions of change, and planning for the future were positively associated with improvement.

ieso took the results of this study and integrated them into a tool for the clinical supervision team that oversees ieso therapists. The tool analyzes every session of therapy, scores it against criteria of therapy effectiveness, and displays it on a data dashboard. Supervisors can use this information to check in with therapists who have patients who are not making the expected progress.

Tablan says this consultation between human supervisor and therapist is key for understanding the nuances of a particular case. “The system may say that this therapist is not doing this particular element of CBT that we know is supposed to be good for patients, but there may be a good reason for that,” he says

Figure 5. User interface for the ieso therapy platform. (Image courtesy of ieso.)

This AI-mediated feedback may be leading to better care for patients. For 2021, ieso reports a recovery rate for depression of 62% compared to the national average of 50%. For generalized anxiety disorder it was 73% versus a national average of 58%.

The hope is that AI tools can help clinicians offer more effective and efficient therapy, but that alone isn’t enough to adequately expand access to mental health care. “There are not sufficient therapists around the world,” says Tablan. He notes that the NHS is currently working toward a 25% access target—meaning that one in four people who need help will actually get it.

With that in mind, ieso has been working on a digital therapeutics program that will deliver care via virtual “conversational agents.” Tablan predicts that some patients may be able to receive all of their care through a conversational agent—and may actually prefer it—while others may use an agent as a supplement to their regular therapy, reducing the amount of time a therapist needs to work with a particular patient so they can help more people.

Computerized CBT programs have been available for decades but have suffered from lack of patient engagement. Tablan says ieso is building something different. “We don’t start from the CBT book and deliver that in a digital format,” he says. “We start from what actually happens between real patients and real therapists, and we emulate that.”

The company is currently testing a virtual support tool called the CBT Connect app. While the study is not yet done, engagement data looks promising. “Patients use the app to an amount of time that’s pretty much equivalent with an extra therapy session,” says Tablan. “It’s a way of increasing the dose without increasing the time demands on the therapist.”

Training the next generation

Clinicians say that psychotherapy is both an art and a science, but sometimes the science gets lost in the shuffle. Could AI help bring it back?

Figure 6. Katie Aafjes-van Doorn, DClinPsy, assistant professor of clinical psychology at Yeshiva University and co-founder and clinical lead of Deliberate. (Photo courtesy of K. Aafjes-van Doorn.)

“As a trained clinical psychologist and as a clinician, I’ve always been surprised by how unscientific the field is,” says Katie Aafjes-van Doorn, DClinPsy, assistant professor of clinical psychology at Yeshiva University and co-founder and clinical lead of Deliberate (Figure 6). “It seems like we do a lot on intuition, what feels right, how the patient responds to you, but it seems like science and clinical practice are quite distant, or at least not working well together always.” Aafjes-van Doorn says she thinks this might especially be true for less regimented forms of therapy like psychoanalysis and psychodynamic therapy, which are her preferred modalities.

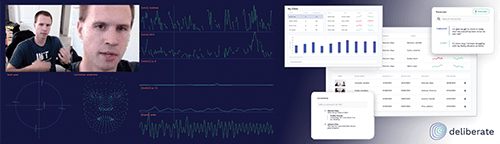

To better integrate science with the practice of psychotherapy, Aafjes-van Doorn and a group of other researchers formed a company called Deliberate, which provides an AI-backed platform for analyzing objective measures for mental health diagnosis and treatment from video recordings and patient-provided ratings (Figure 7). The platform can be used to train and evaluate therapists as well as for clinical trials and research studies.

For clinicians, Deliberate allows for routine outcome monitoring based on session-by-session patient ratings of symptoms and the therapeutic relationship. The platform uses this information to flag patients who might be getting worse. “That is something that we know as clinicians we’re so blind [to],” she says. “We have no clue. We think we help everyone so this is something where we need help.”

Figure 7. Example of data collection and user interface in the Deliberate platform. (Image courtesy of K. Aafjes-van Doorn.)

When using this tool in her training clinic, Aafjes-van Doorn has learned that it is important to educate clinicians about the role of the AI-mediated feedback in their training and practice. “There are a million different reasons why symptoms might go up. It might be that the patient is actually more aware of their problems once they start talking about it, and that’s actually a good thing,” she says. “It is quite hard to explain to people that it’s not about their competency or being a bad therapist, but it’s actually just another data point that we can consider and think about.”

In a research project, Aafjes-van Doorn is using AI to detect the interpersonal skills that evidence suggests are the most important for all therapists to cultivate—regardless of the type of therapy they are delivering. These common factors are called Facilitative Interpersonal Skills (FIS) and include elements like focusing on the therapeutic relationship, showing empathy, and creating hope and positive expectations [5].

A common training module for FIS involves showing trainees video clips of patients, asking them to respond as they would in a session, and then rating their skills. Aafjes-van Doorn’s group is working to automate this process by using the Deliberate platform to collect a large sample of recorded therapist responses with high-quality video that can be used to train a machine learning model to predict FIS.

Aafjes-van Doorn says that analyzing both movement and audio metrics will likely be key for creating a successful machine learning model and something that hasn’t been done in other attempts to use AI to automate FIS training. “We can look at head movement, body movements, even hands movement, and also the synchrony between the therapist and the patient and how they are attuned in their movements,” she says.

However, not all therapists are on board with adopting AI-based tools. Privacy and ethical concerns are barriers for some. That is one advantage of using a platform like Deliberate, which is HIPAA-compliant. Deliberate is also developing feature extraction methods that would allow researchers and clinicians to use interesting features from a video therapy session without being able to identify the patient or therapist. “That could change the field a lot if people can be totally certain that they wouldn’t be identified,” she says.

Other clinicians may be concerned that they will one day be replaced by an AI therapist. While Aafjes-van Doorn can see a role for digital therapeutics in helping people with low-level symptoms, she does not anticipate an end to human therapists altogether. “The relational aspect of being in a room or even being in a zoom room together and feeling what it’s like to be with you so that I can understand what your interpersonal world might be like—I think that is still something that psychotherapy can offer that is not necessarily replaceable,” she says.

Yet, it is a concern she has heard from others when talking about her work. “I think that is a fear that we do need to tackle and take seriously,” she says.

Aafjes-van Doorn hopes that these concerns can be adequately addressed so that more clinicians can benefit from the fruits of AI. “What I hope at least is that it’s heading toward not just being a fancy tool for some psychotherapy researchers,” she says. “I would hope that it’s a far more common practice with clinicians seeing that these clinical tools actually will help them and will help their patients. Because that’s ultimately what we want to do.”

References

- K. Aafjes-van Doorn et al., “A scoping review of machine learning in psychotherapy research,” Psychotherapy Res., vol. 31, no. 1, pp. 92–116, Jan. 2021. Accessed: Jul. 15, 2022, doi: 10.1080/10503307.2020.1808729.

- B. Xiao, Z. Imel, P. Georgiou, D. Atkins, and S. Narayanan, “‘Rate my therapist’: Automated detection of empathy in drug and alcohol counseling via speech and language processing,” PLoS ONE, vol. 10, no. 12, 2015, Art. no. e0143055. Accessed: Jul. 15, 2022. [Online]. Available: https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0143055

- D. Kessler et al., “Therapist-delivered internet psychotherapy for depression in primary care: A randomized controlled trial,” Lancet, vol. 374, no. 9690, pp. 628–634, 2009. Accessed: Jul. 15, 2022, doi: 10.1016/s0140-6736(09)61257-5.

- M. P. Ewbank et al., “Quantifying the association between psychotherapy content and clinical outcomes using deep learning,” J. Amer. Med. Assoc. Psychiatry, vol. 77, no. 1, p. 35, Jan. 2020. Accessed: Jul. 15, 2022, doi: 10.1001/jamapsychiatry.2019.2664.

- T. Anderson et al., “Therapist effects: Facilitative interpersonal skills as a predictor of therapist success,” J. Clin. Psychol., vol. 65, no. 7, pp. 755–768, Jul. 2009. Accessed: Jul. 15, 2022, doi: 10.1002/jclp.20583.