For people without disabilities, technology makes things easier.

For people with disabilities, technology makes things possible.

Advances in medicine have led to a significant increase in human life expectancy and, therefore, to a growing number of disabled elderly people who need chronic care and assistance [1]. The World Health Organization reports that the world’s population over 60 years old will double between 2000 and 2050 and quadruple for seniors older than 80 years, reaching 400 million [2]. In addition, strokes, traffic-related and other accidents, and seemingly endless wars and acts of terrorism contribute to an increasing number of disabled younger people.

These and other causes have increased the population of disabled patients, who mostly end up in clinics and hospitals—places that are already overcrowded. Consequently, alternatives for care and rehabilitation need exploration. Rehab interventions involving virtual reality technology [3], Microsoft Kinect-based exercise and “serious” games [4], and other similar proposals have been developed around the world with encouraging results, especially in the case of stroke survivors, for whom age is a significant risk factor.

Domestic care is a novel and promising area, supported by the use of biomedical sensors and physiological monitoring through ECG portable devices, inertial sensors, video recording, alarms for fall detection, and so on [5]. Patients can be monitored using wearable sensors that provide early warning of health deterioration. The demand for technologically advanced methods of home-based care may easily expand, so that expectations for wide-scale adoption are not merely well intentioned dreams. Moreover, almost daily, new smart home products appear that can be adapted to special needs, even when the companies don’t specifically market them to disabled patients.

Assistive technologies have emerged as a useful aid to people with disabilities. They aim at improving residual functions or capabilities, allowing the command of several devices. The main goal of these technologies is to support the independence of people with chronic or degenerative limitations in motor or cognitive skills and to aid in care of the elderly. The most cited needs for this population are those related to the activities of daily living and communication.

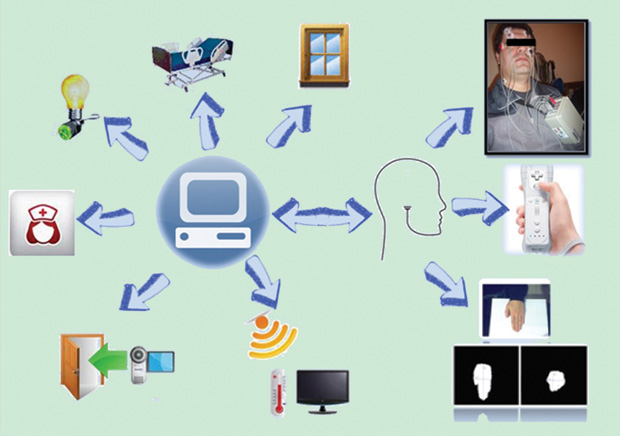

In this article, we present an assistive home care system specially designed for people with severe brain or spinal injuries and for poststroke survivors who are immobilized or face mobility difficulties. The main objective is to create the easy, low-cost automation of a room or house to provide a friendly environment that enhances the psychological condition of immobilized users (Figure 1).

System Overview

At the system’s core is a personal computer (PC) and ichnographic software (referred to as SICAA, for the Spanish Sistema Integrado de Control y Automatización Asistida) that allow the patient to interact with the environment and peripheral devices, such as a webcam, intercom, lights, and electrical blinds. Several human–computer interfaces (HCIs) were developed and adapted for this system, according to the residual capability of different users. The SICAA software has three main elements: environment control (operating blinds, lights, an orthopedic bed, an air conditioner, a television, and an intercom), communication (nurse call and voice synthesizer), and computer access (to the Internet, for chat and games, and to text processors). Preliminary tests were well received by the patients and their families, as well as by healthy volunteers who tried this system. The entire system is a low-cost solution for people with disabilities, and the project is intended for implementation in the patient’s house.

Human-Computer Interface

Regarding the HCI, the major requirements are noninvasiveness, low cost, robustness, and adaptability for users with similar disabilities. For many years, we have developed HCIs that were essayed as control input with acceptable results, such as a conventional mouse, an adapted keypad, a Nintendo Wii console, a vision-based interface (VBI) using hand detection, and an electrooculogram (EOG)-based interface.

The conventional mouse-keypad is the simplest and most essential piece of the whole system; however, some patients cannot operate it due to spasticity, abnormal movements, or tremor, among other causes. Hence, a VBI was adapted to the overall arrangement using the image of the user’s hand captured by a webcam. The image-processing algorithm detects the presence and orientation of the hand and uses this information to obtain the reference angles for generating the input commands to the mouse tracker. The first step in the processing algorithm is the lighting compensation. The second step involves the transformation and detection of the skin by segmentation in the color space YCb- Cr, because this color space is more flexible and stable for the system due to their specificity against the skin detection. Segmentation was performed by thresholding, and the image obtained is binary, with white representing the detected skin.

After that, it is necessary to determine the characteristics that represent the position and orientation of the hand. The characteristics chosen are the coordinates of the center of mass and the axes (minor L1 and major L2) of the hand, using the moments of the image. These axes are good descriptors of the hand orientation, and they allow the click action of the cursor to be performed by closing the hand, as computed with the axes relation. The movement of the cursor is generated by the coordinates of the center mass and filtered to obtain a smooth, precise movement. A work plane fits the area focused on the webcam, which is located at a fixed distance. The HCI was tested with several volunteers and under various experimental conditions, essaying the robustness against different skin tones and colors, all with good results. The behavior was identical for all users because segmentation isolates only the skin. Some variations appear when there are changes in the ambient light conditions. To avoid this problem, we decided to incorporate a light source. More details about this interface can be found in [6].

Other alternative command devices are achieved through inertial sensors like those on a Nintendo Wii or other commercial console, such as the HP Swing. This is a plug-and-play solution, robust and ergonomic. Both HCIs were tested with healthy volunteers and one patient with tremors who cannot use a conventional mouse. Both commercial consoles are adequate, due to their easy connection, ergonomic shape, big buttons, and low price. The inertial sensor-based console is easy to use and does not require training, but its use is only possible for patients who maintain the ability to move their hand.

Interface Adapted for the Severely Disabled

For severely disabled people (for example, those with quadriplegia), we have developed a hybrid HCI based on the EOG and the electromyogram (EMG), as it allows mouse control using eye movement and the voluntary contraction of any facial muscle [7]. The bioelectrical signals are sensed with adhesive electrodes and are acquired by a custom-designed portable wireless system. The mouse can be moved in any direction— vertically, horizontally, and diagonally—by two EOG channels, and the EMG signal is used to perform the mouse-click action. Blinks are avoided by a decision algorithm, and the natural reading of the screen is possible with the designed software without interrupting the mouse tracker.

The device was tested with satisfactory results in terms of acceptance, performance, size, portability, and data transmission. Users can select a threshold between five levels of sensitivity to suit their requirements and also load, modify, and save their profiles. This choice allows users to make smooth eye movements, such as those involved in reading or looking beyond the screen, that will not be considered a control signal. A training stage is necessary for a complete HMI domain, especially for patients with severe diseases.

The choice of the HCI will depend on the user capabilities and other interfaces can also be used with the system.

System Specifics

The SICAA constitutes the main implementation of the system, allowing integration of the commands of the HCI with control of the peripheral devices. The software design must meet several constraints related to user accessibility and ease of operation [8].

An iconographic interface, big buttons, and vibrant colors were chosen for the software, developed with Microsoft Visual Studio 2008 and Basic .NET Express. The software has a principal panel (Figure 2) that includes the date, the hour, a control menu showing the output states, and icons representing the television control, home control, Internet access, voice synthesizer, nurse call, bed control, and diary. The menu’s icons are easy to interpret and relatively large on the screen, enabling positioning by patients with some degree of spasticity or tremors.

In addition, a button for turning off lights provides a direct access and a configuration entry to the program’s maintenance and recalibration, if necessary. Each icon allows access to another window, with a specific menu related to the task group selected.

A QWERTY keyboard was designed, which appears in the front panel when necessary for writing in the selected application (such as the Internet browser, text processor, chat, diary, or voice synthesizer). The keyboard is predictive and has two modes, basic and advanced. These two modes were implemented for users with different capabilities and training. The voice synthesizer panel shows preselected phrases, and it allows the addition of other expressions, defined by individual users. Configuration parameters are accessible by the programmer or authorized personnel to prevent mistakes and system failures. This menu allows the control of cursor sensitivity and language (Spanish, Portuguese, and English), among other functions.

The peripheral devices are controlled through a customized and isolated control board, with eight digital outputs by relays, three analogical and six digital inputs, and an ATMega 8-16PU microcontroller of 1 MHz. To avoid incompatibilities with previously installed home appliances and devices, a generic infrared transducer was used to control the air conditioning and television. The intercom must be adapted to a webcam, thereby providing visual information to the patient. The door opens with an electrical lock. The nurse call is a luminous sounding alarm, interrupted only by a physical button pressed by the caregiver, assuring the presence of the caregiver. Other devices such as the bed, home appliances, and lights only need a minimal electrical connection to be controlled by the SICAA. The peripheral devices used are objects whose operation is not affected by the type of control input and that require no structural modifications. The panel control shows the output state, offering a direct visual view of the entire system.

The costs, calculated for a 32-in television, a PC, and all the electronics components, comes to a total of US$5,000. This is a low-cost solution if we consider the psychological and physical benefits of the independence and ability to communicate the system allows for its potential users. There are commercial domotic systems available on the market and a lot of “smart homes” projects in research groups, but our objective was a design specifically for disabled people that provides a wide variety of plug-and-play HCIs, while also considering the economic condition of the potential users.

The use of this assistive technology helps in patients’ recovery, improving their relationship with those in attendance, as well as their comfort, self-esteem, and overall psychological condition.

Acknowledgments

The authors thank the Universidad Tecnológica Nacional, the Universidad Nacional de San Juan, and the National Council of Scientific Research of Argentina (CONICET) for supporting this research.

References

- D. Webster and O. Celik, “Systematic review of Kinect applications in elderly care and stroke rehabilitation,” J. Neuroeng. Rehabil., vol. 11, p. 108, July 2014.

- Organización Mundial de la Salud. (2014). Desafios sanitarios planteados por el envejecimiento de la población. [Online].

- A. Darekar, B. J. McFadyen, A. Lamontagne, and J. Fung, “Efficacy of virtual reality-based intervention on balance and mobility disorders post-stroke: A scoping review,” J. Neuroeng. Rehabil., vol. 12, p. 46, May 2015.

- C. Loconsole, F. Banno, A. Frisoli, and M. Bergamasco, “A new Kinect-based guidance mode for upper limb robot-aided neurorehabilitation,” in Proc. 2012 IEEE/RSJ Int. Conf. Intelligent Robots and Systems (IROS), Vilamoura, Portugal, pp. 1037–1042.

- L. Mertz, “Convergence revolution comes to wearables: Multiple advances are taking biosensor networks to the next level in health care,” IEEE Pulse, vol. 7, no. 1, pp. 13–17, Jan. 2016.

- E. Perez, C. Soria, N. M. López, O. Nasisi, and V. Mut, “Vision-based interfaces applied to assistive robots,” Int. J. Adv. Robot. Syst., vol. 10, pp. 116, 2013.

- N. M. Lopez, E. Orosco, E. Perez, S. Bajinay, R. Zanetti, and M. E. Valentinuzzi, “Hybrid human-machine interface to mouse control for severely disabled people,” Int. J. Eng. Innovative Technol., vol. 4, no. 11, pp. 164–171, May 2015.

- D. Piccinini, N. M. López, E. Pérez, S. Ponce, and M. Valentinuzzi, “Assistive home care system for people with severe disabilities,” presented in Proc. 4th IEEE Biosignals and Biorobotics Conf. (ISSNIP), Feb. 2013.