Your phone scans your face to unlock its screen. A social media app offers suggestions of friends to tag in photos. Airline check-in systems verify who you are as you stare into a camera. These are just a few examples of how facial recognition technology (FRT) is now ubiquitous in everyday lives. The industries of law enforcement, Internet search engines, marketing, and security have long harnessed FRT, but the technology is becoming increasingly explored in the health care setting, where its potential benefit—and risks—are much greater.

The technology generally involves scanning facial traits in two or three dimensions and plotting the physical characteristics of a person’s face: angle and distance from eyes to nose, for example, or the depth and contour of a cheekbone. Because the technology is generally accurate, hands-free, and usable from a distance (as opposed to fingerprint scans), it could be embedded in hospitals to both validate access by professionals to health care facilities and also to accurately authenticate patients to minimize fraud or error, or improve patient compliance.

A group at Seoul National University in South Korea, for example, has developed a facial recognition app to minimize hospital mistakes in confusing patients [1]. Such technology could also be used for patient check-ins or tracking (such as in assisted living facilities) and even to monitor patients’ moods and alert caregivers to instances of depression or pain.

Beyond improving hospital security and patient identification, FRT in health care is also being explored to diagnose and treat patients. By bolstering FRT technology with computational tools like machine-learning algorithms and neural networks, researchers are aiming to reduce pain, suffering and even save lives.

A doctor’s tool: Diagnosing disease at the snap of a photo

Karen Gripp, MD, a medical geneticist at Delaware-based Nemours/Alfred I. duPont Hospital for Children (Figure 1), had a problem. She was trying to identify a patient with unusual symptoms, and none of the typical genetic disorders were coming to mind.

She turned to an app she helped developed, Face2Gene, which allows clinicians to upload a smartphone image of a patient. The app maps the face with 130 landmarks and uses machine learning techniques to match facial characteristics to rare genetic disorders. It then offers a list of possible diseases and probabilities to the clinician. With Face2Gene, Gripp was able to identify an unusual case of the rare Wiedemann–Steiner syndrome for her mystery patient, as reported in Nature last year [2].

“Sometimes the typical facial features [of a genetic disorder] are present, but they are so mild that a human expert has trouble identifying them. An algorithm is at times superior at identifying these,” says Gripp, who is also a professor of pediatrics at the Sidney Kimmel Medical School at Thomas Jefferson University.

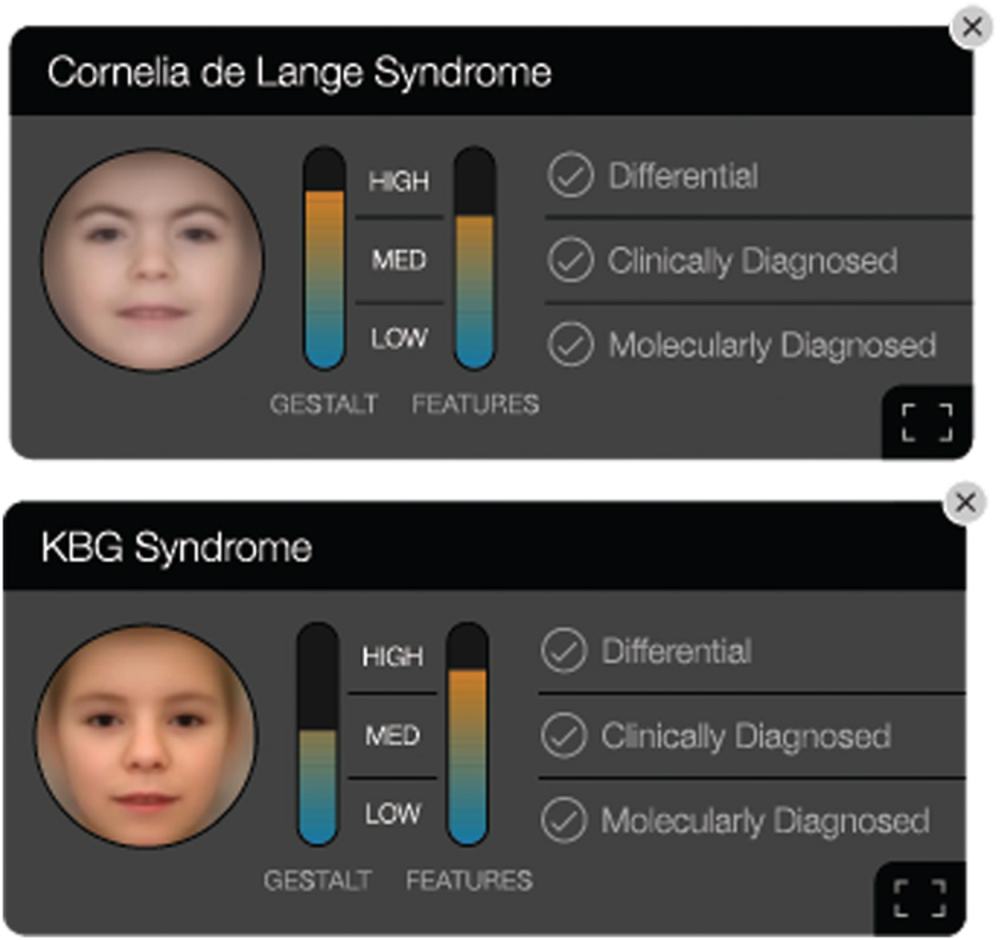

Developed by Boston-based FDNA, where Gripp is chief medical officer (CMO), the Face2Gene app has made headlines for FRT success in health care, with geneticists across the world using the platform [Figure 2(a) and (b)]. While this app is not the first to use facial imagery to help identify genetic disease, it has, to date, helped evaluate roughly 250,000 patients spanning more than 7,000 diseases, according to the company. And in a recent report, the app’s underlying artificial intelligence (AI) system, called Deep Gestalt, outperformed clinicians in three experiments involving identifying patients’ syndromes or subtypes [3]. Gripp says the team next plans to incorporate signals from other imaging technologies that inform about potential genetic conditions, such as brain magnetic resonance imaging (MRI) or hand radiographs.

“Face2Gene is definitely becoming more common as a tool for diagnosing cases. Like many clinical geneticists, I personally use the Face2Gene app when seeing patients,” says Paul Kruszka, MD, clinical geneticist at the National Human Genome Research Institute. He, along with researchers at the National Institutes of Health

(NIH) and Children’s National Health System (CNHS), developed a facial analysis technology to recognize Down Syndrome in non-Caucasian races, after Kruszka witnessed firsthand in Uganda how differences in physical appearance across race—and complicating factors from additional diseases—can impact the accuracy of diagnosis in developing countries [4].

Indeed, bias in training FRT can lead to inaccurate results for groups of patients. If researchers train the software only on certain groups—all males, or all Caucasians, for example—then other populations won’t get the same benefits from the technology and may actually be put more at risk. Gripp says this is addressed in Face2Gene and that the algorithm works well for all ethnicities, providing an advantage over human bias.

Kruszka, who recently used Face2Gene in a study on diagnosing diverse populations [5], hopes that this technology will allow for medical providers to make accurate genetic syndrome diagnoses in developing countries, but he cautions it’s not a panacea. “This technology is only a tool, like the stethoscope, and requires considering the entire clinical picture such as brain and heart imaging studies and laboratory evaluations,” says Kruszka.

Detecting quiet pain

While efforts to diagnose disorders with FRT are gaining steam, another promising area of medical FRT involves gathering more information about a patient’s state or environment to assist caregivers—and patients themselves.

Several efforts are aiming to detect patient pain when patients can’t express it. For example, infants don’t always cry in pain, contrary to popular belief, and untreated pain in newborns can lead to a host of neurological and behavioral issues.

“Assessing pain in neonates is highly subjective and often results in misdiagnosis with the unwanted consequences of unnecessary interventions and failures to treat,” says Sheryl Brahnam, Ph.D., an information scientist at Missouri State University. “Studies have shown problems with observer bias based on professional attitudes when assessing pain and desensitization due to overexposure to neonatal pain in the clinic.”

Other factors, such as culture and gender, can also affect pain assessments, says Brahnam, who believes facial recognition systems that can automatically alert caregivers to pain in infants is one way to counter observer bias and provide better treatment. She developed one of the first systems to measure infant pain over a decade ago, called Classification of Pain Expressions (COPE), which analyzed hundreds of images of infant expressions both in pain and with similar expressions. The system was 90% effective in identifying pain in subsequent tests.

A recent system designed by a University of South Florida team similarly incorporated facial analysis and pattern recognition along with other data to autonomously detect pain in infants. The group is now working on monitoring—and even predicting—pain in infants after surgery using FRT coupled with other technology.

“We are developing a fully automated multimodal system that continuously measures pain and predicts the occurrence of future events of pain based on analysis of behavioral and physiological signals,” says Ghada Zamzmi, Ph.D., currently a research fellow at the National Institutes for Health. “Our experiments in procedural pain showed that [facial expression] is the most important indicator of pain in most cases.”

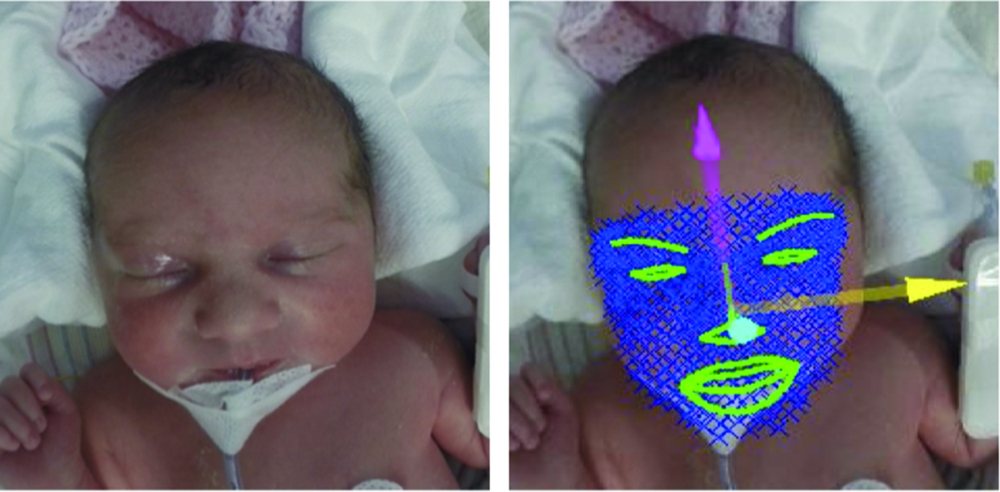

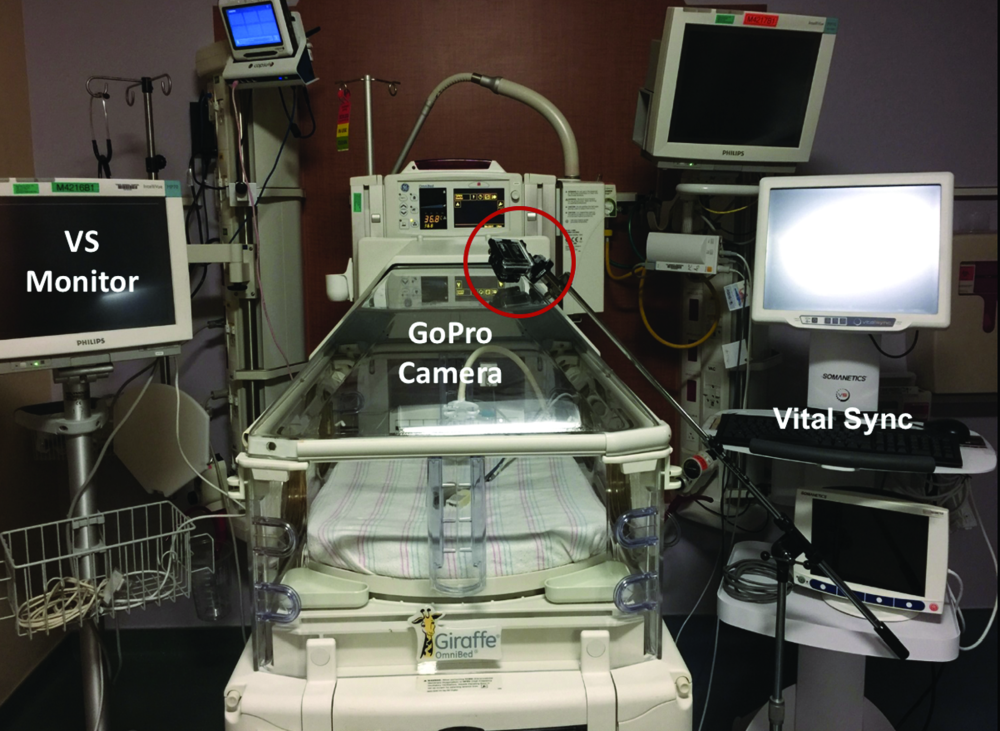

Zamzmi, along with University of South Florida faculty Dmitry Goldgof, Ph.D., Rangachar Kasturi, Ph.D., Yu Sun, Ph.D., and Sirajus Salekin, developed a facial recognition software they call neonatal convolutional neural network (N-CNN), for neonatal convolutional neural networks [6], [7]. The team also developed an algorithm for analyzing the facial expression of newborns with postoperative pain, with results publishing in an upcoming IEEE International Conference on Automatic Face & Gesture Recognition (FG). By using multiple avenues of analysis—such as visual and vocal cues and vital signs—to sense and predict pain, the team’s system aims to ensure that it won’t miss a signal of pain regardless of factors like infant’s position or sedation (Figures 3 and 4). While the group has made promising progress, Zamzmi says N-CNN is not quite ready for widespread use yet.

“Although our current approach has shown excellent assessment performance—up to 98% accuracy—we believe it is essential to evaluate it using large and well-annotated multimodal datasets collected, under different settings and configurations, from different sites prior to using it as a technology in [neonatal intensive care units (NICUs)] or homes,” she says.

Elderly or incapacitated patients may also benefit from a similar system. An Australia-based company developed an app called PainChek that uses FRT to alert caregivers if a nonverbal patient is in pain, geared toward those with dementia as well as infants. There are also efforts to use FRT to detect risks in intensive care units, such as when adult patients are under anesthesia and having surgery. Subtle, involuntary facial expressions could indicate if patients are feeling pain or even consciousness during intense surgeries. Like infant pain detection systems, these could be useful tools in flagging issues to minimize patient harm.

A new kind of therapy

In addition to giving voice to patients who can’t express pain, FRT is also being explored to assist patients with autism spectrum disorder.

Researchers at Stanford University developed a computer vision system to run on Google Glass, a headset with a peripheral display monitor on the side. The device, called Superpower Glass, tracks facial expressions and prompts the user with cues like “frustrated” or “interested.” The device aims to coach children with autism to recognize facial expressions with the goal of improving patients’ navigation of social exchanges and interactive tasks.

In an article published in March 2019 [8], the team—led by senior author Dennis Wall, Ph.D., and first author Catalin Voss, Ph.D.—detailed a randomized clinical trial of 71 children aged 6–12, who were diagnosed with autism spectrum disorder. Patients who used the device at home in addition to standard care showed improvement in socialization versus the control group, who received the standard care of behavioral therapy.

“Kids with autism often struggle with making eye contact, understanding faces and expressing emotions appropriately, so social therapies often focus on these skills,” says Wall, who is an associate professor of Pediatrics, Psychiatry, and Biomedical Data Sciences at Stanford Medical School. “If you can help teach them these skills early it increases their confidence in and enjoyment of positive social exchange.”

The team licensed the technology to Cognoa, a company Wall co-founded that focused on mobile diagnosis. Superpower Glass was granted Breakthrough Device designation by the Food and Drug Administration (FDA) and will be used in a clinical study later this year (Figure 5). Meanwhile, Wall’s lab at Stanford has developed a complementary phone app called Guess What, where kids guess and act out facial expressions (Figure 6). Wall aims to introduce FRT to the app so it can give feedback on whether the right facial expression is identified and mimicked.

“We aim to bring wearable or digital therapy to this much needed pediatric health condition where there are many issues with access,” says Wall. “Patients sometimes wait a year or more for diagnosis and treatment, so technology like this could safeguard against time gaps that can occur with waiting lists and ultimately serve as a complement to—but not necessarily a replacement for—cognitive behavioral therapies.”

Pitfalls and risks

The uses of FRT, like any technology, has its share of privacy and ethical concerns, particularly when it comes to health care. While the Health Insurance Portability and Accountability Act (HIPAA) provides a framework for protecting patient privacy, FRT, like any patient data, can always be reidentified even once anonymized.

“The availability of online datasets and technology for re-identification make it difficult to eliminate risk of re-identification of data,” says Nicole Martinez-Martin, Ph.D., an assistant professor of pediatrics at the Stanford Center for Biomedical Ethics (Figure 7), who recently wrote about the ethical implications of FRT in health care [9]. Developers and users of FRT and similar systems, she says, should be educated about the technology and offer a clear consent process so that patients are appropriately informed of limitations of the technology, how data might be used and any risks of that data. A few states have biometric data privacy statutes, she points out, with more likely to follow suit to help bolster protections for patient privacy.

Aside from informed consent and an awareness of the risks of patient privacy, the issue of bias in training FRT is something researchers also need to guard against. “Certainly there has been a concerted effort as AI is utilized in health care to address the potential for bias,” confirms Martinez-Martin. “Efforts include aiming at recruiting diverse participants and collecting more diverse datasets, as well as finding ways to account for existing biases in the machine learning process.”

With ethical and practical considerations taken into account, FRT offers a potentially powerful tool to improve patient experiences. And though still a relatively new technology in the health care space, FRT could enable ways to save heartache, money, and even lives.

References

- B. Jeon et al., “A facial recognition mobile app for patient safety and biometric identification: Design, development, and validation,” JMIR Mhealth Uhealth, vol. 7, no. 4, p. e11472, Apr. 2019. [Online]. Available: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6475824/

- E. Dolgin, “AI face-scanning app spots signs of rare genetic disorders,” Nature, Jan. 7, 2019. [Online]. Available: https://www.nature.com/articles/d41586-019-00027-x#ref-CR1

- Y. Gurovich et al., “Identifying facial phonotypes of genetic disorders using deep learning,” Nature Med., vol. 25, pp. 60–64, 2019. [Online]. Available: https://www.nature.com/articles/s41591-018-0279-0

- P. Kruszka et al., “22q11.2 deletion syndrome in diverse populations,” Amer. J. Med. Genet., vol. 173, no. 4, pp. 879–888, Mar. 2017.

- P. Kruszka et al., “Turner syndrome in diverse populations,” Amer. J. Med. Genet., vol. 182, no. 2, pp. 303–313, Feb. 2020.

- G. Zamzmi et al., “A comprehensive and context-sensitive neonatal pain assessment using computer vision,” IEEE Trans. Affective Comput., early access, Jul. 10, 2019, doi: 10.1109/TAFFC.2019.2926710.

- G. Zamzmi et al., “Convolutional neural networks for neonatal pain assessment,” IEEE Trans. Biometrics, Behavior, Identity Sci., vol. 1, no. 3, pp. 192–200, Jul. 2019.

- C. Voss et al., “Effect of wearable digital intervention for improving socialization in children with autism spectrum disorder,” JAMA Pediatr., vol. 173, no. 5, pp. 446–454, 2019, doi: 10.1001/jamapediatrics.2019.0285. [Online]. Available: https://jamanetwork.com/journals/jamapediatrics/fullarticle/2728462

- N. Martinez-Martin, “What are important ethical implications of using facial recognition technology in health care?” AMA J. Ethics, vol. 21, no. 2, pp. E180–187, 2019.