In the computing community, people look at the brain as the ultimate computer. Brain-inspired machines are believed to be more efficient than the traditional Von Neumann computing paradigm, which has been the dominant computing model since the dawn of computing. More recently, however, there have been many claims made regarding attempts to build braininspired machines. But one question, in particular, needs to be thoroughly considered before we embark on creating these socalled brain-inspired machines: Inspired by what, exactly? Do we want to build a full replica of the human brain, assuming we have the required technology?

Within the relevant research communities, the human brain and computers affect and interact with each other in three different ways. Therefore, the first model would be to build a computer that can simulate all the neurons in the brain and their interconnections. If we can create the technology, the resulting machine would be a faithful brain simulator—a tool that can be used by neuroscientists, for example. The second effort would be to use implants to increase the capability of the brain. As an example of this, a team from Duke University used brain implants to allow mice to sense infrared light [1]. Although this represents integrating a sensor with the brain, we can expect more sophisticated integration in the future—a trend that has the potential to increase the ways computers and the brain interact together to get the best of the two worlds. This can be thought of as part of the vast field of human–computer interaction. Finally, the third model would be to study the brain’s characteristics, as far as our knowledge reaches, and decide which characteristics we want to implement into our machines to build better computers [2].

But before we dig deeper, let us agree on a set of goals one should expect to find in the ultimate computing machine.

The Ultimate Computer

When the first electronic computers were built more than seven decades ago, correctness was the main goal. As more applications were implemented, performance/speed became a necessity. Power efficiency was added to the list with the widespread use of battery-operated devices (battery life) and the spread of data centers and supercomputers (electricity costs). Transistors got smaller, following Moore’s law with the enabling technology of Dennard scaling, but they became less reliable (see “Moore’s Law and Dennard Scaling”). So reliability was also added to the list, raising the question of how to build reliable machines from unreliable components [3]. Security joined the list because of the interconnected world in which we live.

[accordion title=”Moore’s Law and Dennard Scaling”]

In 1965, Gordon Moore, one of the two cofounders of Intel, published a short article that made a prediction. This prediction has turned out to be very accurate. He predicted that the number of transistors in integrated circuits will double every 18–24 months and that any company in the semiconductor industry that does not follow what we now call Moore’s law will fall out of the competition and leave the market or be satisfied with commodity products. What is more interesting is that any company that tries to out-pace Moore’s law will also fail; this is due to economic reasons such as time to market and the high cost of fabrication at that bleeding edge of the technology. But how can semiconductor companies follow Moore’s law? The enabling technology is called Dennard scaling, after Robert Dennard, one of the coauthors of the article that presented these phenomena in 1974. What Dennard scaling says is that, as transistors get smaller (following Moore’s law), the voltage and current scale down with the transistor length, i.e., as transistors get smaller, they dissipate less power. Unfortunately, Dennard scaling phenomena stopped around 2004. So now we can pack more transistors per chip, but they will increasingly dissipate more power.

[/accordion]

We now have five items in this wish list of goals: correctness, performance, power efficiency, reliability, and security. The first two are crucial for any computer to be useful. The other three are the result of technological constraints and functional requirements. Consequently, the ultimate computer is one that can fulfill the correctness and performance requirements and adequately address those of power efficiency, reliability, and security.

This raises other important questions: Can the way the brain works inspire us to envision ways to deal with this list? Will understanding how the brain works lead us to the conclusion that we must grow out of the current Von Neumann architecture to stand any chance of achieving these rather conflicting goals? Can it inspire us to find ways to bring the Von Neumann architecture closer to making the items on the wish list all possible? Or could it even push us to reconsider this wish list altogether?

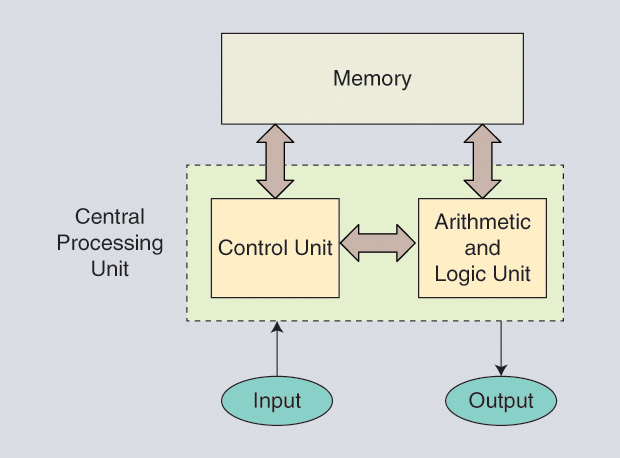

The Von Neumann Model

The huge majority of computers follow the Von Neumann model, where the machine fetches instructions from the memory and executes them on data (possibly brought from memory) in the central processing unit. The Von Neumann model has undergone numerous enhancements over decades of use, but its core architecture remains fundamentally the same.

The brain is not a Von Neumann machine. However, the brain can still inspire us to deal with several items of the wish list.

- The brain is an extensively parallel machine. Within the Von Neumann model, we have already moved to multicore and many-core processors. The number of cores keeps increasing. However, the degree by which parallelism is exploited in these parallel Von Neumann machines depends on the application type, the expertise of the programmer, the compiler, the operating system, and the hardware resources.

- The brain is decentralized. This is not yet the case in a Von Neumann model or in the whole design of the computer system. Decentralization has an effect on reliability (plasticity in the brain) and performance. Even though we have several cores working in a parallel computer system, they are all under the control of the operating system that runs on some cores. A computer needs to be able to detect a failure and then move the task to another part to continue execution. This has been implemented, to some degree, with what are called thread-migration techniques. But can we implement these on the whole computer system (storage, memory, and so forth)?

- Current computers are precise and have a finite memory. The brain has a virtually infinite memory that is approximate. If we find a new memory technology that provides a huge amount of storage relative to current stateof- the-art memory [static random-access memory (RAM), dynamic RAM, phase-change memory, spin-transfer torque RAM, magnetoresistive RAM, and so on] but is not 100% precise, can we design software that makes use of this memory?

The Von Neumann model puts a lot of restrictions on how much we can learn from the way the brain works. So how about exploring non- Von Neumann models?

Non-Von Neumann Models

Von Neumann computers are programmed, but brains are taught. The brain accumulates experience. If we remove the restrictions of a Von Neumann model, can we get a more brain-like machine? We need to keep in mind a couple of issues here.

First, we do not fully know, at least at this point, how the brain works exactly. We have many pieces of the puzzle figured out, but many others are still missing. The second issue is that we may not need an actual replica of the brain for computers to be useful. Computers were invented to extend our abilities and help us do more, just like all other machines and tools invented by humanity. We don’t need a machine with free will—or do we? The answer is debatable, and this is assuming we can build such a machine!

But what many would agree on, or at least debate less, is that we need machines that do not require detailed programs. We need machines that can accumulate experience. We need machines that can continue to work in the presence of a hardware failure.

The Von Neumann model is perfect for many tasks, and, given the billions of dollars invested in software and hardware for this model, there is no practical chance of moving immediately and fully to a non-Von Neumann model. A good compromise may be to have a hybrid system, for example [4], similar to the way digital systems and analog systems are used together. For instance, a Von Neumann machine executes a task; gathers information about performance, power efficiency, and so forth; and submits that information to a non-Von Neumann machine that learns from this information and, in the next execution of the Von-Neumann machine, it is reconfigured to best execute this piece of software. This is just one scenario, but the potential in this direction is very high.

What Did We Learn from Artificial Neural Networks?

Once we mention computers and the brain, one of the first terms that comes to mind is the artificial neural network (ANN). ANNs are considered a very useful tool despite being an overly simplified model of the brain. They have been used for decades with demonstrable success in a number of areas. However, the brain is far more sophisticated than an ANN. For instance, the neurons fire at different rates depending on the context. Hence, there is information not only in the weights but also in the rates. This is not implemented in traditional neural networks. There is some processing in the connections among neurons. Can we make use of that to build better machines?

The Storage Challenge and Other Fundamental Questions

One of the main bottlenecks for performance in traditional computers is storage. The whole storage hierarchy suffers from low speed (as we go down the hierarchy from the different levels of caches to disks), high power consumption, and bandwidth problems (once we go off chip). There are several reasons for this poor performance relative to the processor. First, the interconnection between the processor and the storage devices is slow (the traveling speed of the signals as well as the bandwidth of the interconnection). Second, in building those storage devices, capacity is taking the front seat relative to speed. Can we build a new memory system based on how the brain stores information— or at least on how we think the brain stores information? And how far do we have to deviate from the traditional Von Neumann model to be able to achieve that? There are several hypotheses about how the brain stores information (for example, the strength of the interconnection among neurons).

The question of consciousness is discussed in neuroscience, cognitive science, and philosophy. Now, it is time to discuss it in computer design. Consciousness is not the same as being selfaware. It is the awareness of being self-aware. Do we need computers to be conscious? What do we gain from that? Computers are already self-aware with all of their sensors, cameras, and so on. But what will we gain if we take this one step further and make them aware they are self-aware? In my opinion, we may not gain much, assuming we can make it.

There’s another fundamental question: Do we need machines with emotions? We are talking about several steps beyond affective computing [5]. My answer for this is different than my answer for the consciousness question. Here, computers with emotions could be very useful—for example, in helping elderly people.

A third question relates creativity. If we can build machines that can learn, then we give them a degree of creativity. Human beings are creative in problem solving and also in defining problems. Do we want machines to be creative only in solving a problem? Or do we also want machines to be able to identify problems? What if a human and a machine have a conflict defining the same problem? Maybe in such cases we can use humans and machines as a diverse group for brainstorming and problem solving (the wisdom of the crowds).

For Further Discussion

This article presents many more questions than it answers. The goal is to think deeply before deciding what exactly we need to get inspired by about the brain as we build machines. Our wish list for an ultimate computer changes over time, based not only on technology but also on the way we use computers. How, then, might we envision the future?

References

- E. E. Thomson, R. Carra, and M. A. L. Nicolelis, “Perceiving invisible light through a somatosensory cortical prosthesis,” Nat. Commun., vol. 4, Feb. 2013.

- S. Navlakha and Z. Bar-Joseph, “Distributed information processing in biological and computational systems,” Commun. ACM, vol. 58, no. 1, pp. 94–102, Dec. 2014.

- S. Borkar, “Designing reliable systems from unreliable components: The challenges of transistor variability and degradation,” IEEE Micro, vol. 25, no. 6, pp. 10–16, Nov. 2005.

- G. Banavar, “Watson and the era of cognitive computing,” in Proc. 20th Int. Conf. Architectural Support for Programming Languages and Operating Systems (ASPLOS’15), Mar. 2015.

- [Online]. Available: http://affect.media.mit.edu/ (accessed 30 Jan. 2016).