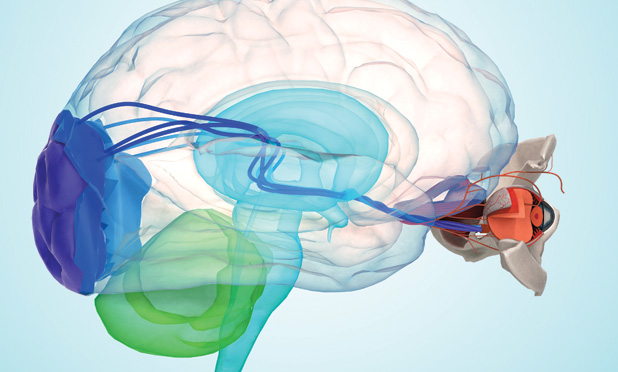

The retina is a sophisticated neural network that provides humans with high-resolution vision. And for those who suffer from retinal disease or deterioration, particularly age-related macular degeneration (the leading cause of blindness among people over the age of 50 in the United States), a better understanding of how to stimulate the retina or completely override its path to the area of the brain that processes vision may offer hope to restore sight.

James Weiland, Ph.D., professor of biomedical engineering at the University of Michigan and an IEEE Fellow, is at the forefront of this research (Figure 1, right). His main goal and primary challenge—and those of the field generally—are to restore a sense of vision using electronic devices called retinal prostheses. Weiland, who focuses on developing retinal and neural prostheses and wearable and implantable technology systems, received his degrees in biomedical engineering and electrical engineering from the University of Michigan, was previously affiliated with the Wilmer Ophthalmological Institute at Johns Hopkins University, and was a professor with the Keck School of Medicine at the University of Southern California. Through his research, he has strived to address the many unique challenges related to introducing restorative neurotechnology into the brain and eye. Although scientists have had long-term success with other types of biological implants, such as pacemakers, the eye presents a unique challenge.

“The retina simultaneously processes multiple colors and light in a parallel fashion,” explains Weiland. “To create natural vision, you’d need to be able to tap separately all of these channels and integrate them, which is difficult to do with an electronic device. Pacemakers have only a few separate channels of input and output; to get high resolution in an electronic retina, you’d need a thousand.”

Creating a high-density device small enough to implant is the challenge. However, he goes on, “you can’t simply take a computer chip and put it in the eye and hope it functions.” For one, the saltwater environment of the eye means that any electronics would need to be in a protective coating to prevent corrosion. But once a device is coated, it’s isolated from the eye and needs to connect to the organic tissue. It is, according to Weiland, a materials problem: any retinal implant needs a thin, yet water-resistant coating that is selectively conductive without water or ions getting to the electronics.

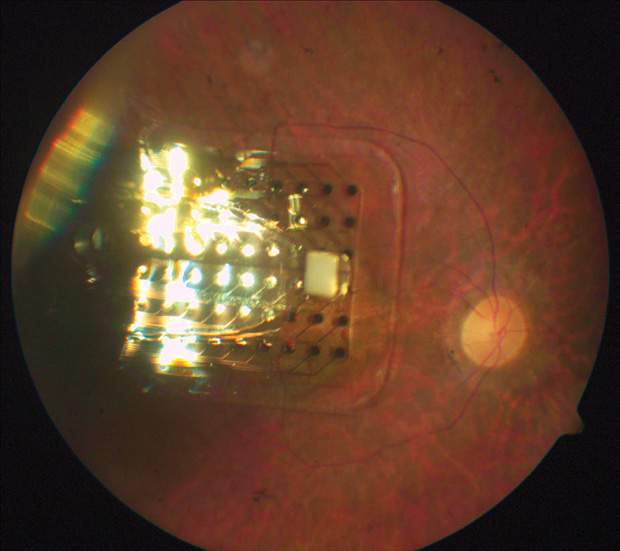

Some companies have started to overcome these problems. Weiland has teamed up with one in particular, Second Sight, which has developed the first Food and Drug Administration (FDA)- approved retinal prosthetic for those with retinal blindness. Weiland collaborated on the preclinical and clinical testing of the prosthesis, the Argus II (Figure 2). The device consists of an external system (a camera that processes image information and passes it on to the user) and an internal system (an array that stimulates remaining cells in the retina to create a signal the user can interpret as a form of vision). In this way, the device can provide a sense of vision to someone who has very limited sight and lets users localize sources and recognize large objects—for example, an exit door.

“The processing approach is rudimentary right now,” Weiland says. “We take the information— including number of pixels—and apply that to the retina. We think much more work can be done in that area with computer vision, by combining deep learning and augmented reality to better understand the user’s environment and to highlight the important objects.” Ultimately, if the system could contextualize the information in a scene and provide only that which is most critical (for example, the location of furniture or a face), the function of the device could be improved, he adds. The next challenge, he says, is to miniaturize the device, putting the internal system in the eye but somehow still protecting the electronics.

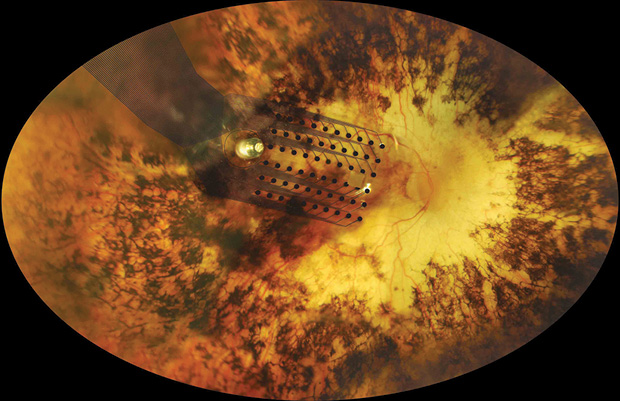

In addition to integrating computer processing techniques, Weiland develops sophisticated animal models to study the retina, and corresponding implants, in more detail (Figures 3 and 4). Specifically, he looks at ways for using microelectrode arrays to stimulate and record different parts of the retina and ultimately mimic what goes on in a human. He’s finding that some of the responses he measures match the perceptions people report. This is important, because it enables the team to develop accurate animal models that allow them to test different stimulation protocols and translate ideas from the bench to the clinic quickly. “It’s hard to develop new implant technology to test in a person,” Weiland elaborates. “While we have lots of ways to improve the implant in terms of the number of electrodes and size of electrodes, we can’t simply make it and put it into a person without FDA approval. That’s why having a precise animal model is so critical.”

A key part in determining how well retinal devices work is understanding the retinal signaling between the tissue and the brain itself. To do this, Weiland measures retinal responses to electrical stimulation by looking at the visual evoked potential or visual evoked response, which is similar to an electroencephalogram (EEG) signal (essentially a response of the visual part of the brain evoked by activity in the retina). “While EEG is a free-running record of the measure of activity in the brain, the evoked response is measuring a reaction to specific stimuli,” he explains. “Essentially, we are stimulating the retina and seeing where in the brain activity results.”

In another interesting line of research, Weiland is studying how devices like the Argus affect the human brain. Patients with the Argus II undergo functional magnetic resonance imaging, which shows Weiland and his collaborators the active areas of the brain and measures whether there are any anatomical or functional changes that result from long-term use of retinal implants.

The preliminary data, Weiland says, suggest there’s no change in the structure of the brain, because after adulthood the brain changes very little in terms of wiring and connectivity (although he allows the data don’t have as fine a resolution as he’d like). But he expects functional changes as the brain begins to process information from different sources. “Early data suggest that, once you restore even the smallest amount of vision, the part of the brain that normally pays attention to vision, the visual cortex, will also begin to show more activity,” he adds.

Aside from his collaboration with Second Sight, Weiland is also looking at other ways to help people with low vision, such as developing wearable cameras to translate the information through nonvisual cues, like speech or vibrations. For example, a device could guide users as they walk along a path by vibrating left or right to move them correctly.

While retinal prosthetics create signals in the eye that get transmitted to the brain, in cases of optical nerve disease or brain trauma, a retinal implant will not be an option. But you could, Weiland says, have someone wear a mobile computing system that can process the scene to tell users what’s in front of them and where to cross the street, for example. Such a guidance system could use three-dimensional imaging, cameras, and a global positioning system that taps into online databases. “That’s something someone could intuitively follow, but we need to make sure the camera system is very reliable,” he adds.

Aside from giving people more independence, restoring some sense of vision also provides an important social element for users. “Even if you can’t see with acuity to recognize the details of a face, the ability to look at someone when you’re talking to them enables you to feel connected,” Weiland explains. One Argus II user in particular struck him as an example of how restoring vision can provide social comfort: “She reported that when she goes to her grandkid’s soccer game, she is able to see movement and flashes of light. Knowing one of those points of movement is her grandkid lets her feel more connected to that experience.”

He adds, “Retinal prostheses are but one of a number of promising and exciting approaches to vision restoration. We have shown that small improvements in vision can make a dramatic difference in the quality of life of blind patients. New technology will provide even greater benefit and may someday restore near-natural vision.”