Approximately 2% of Americans have a visual disability— vision that cannot be corrected even with the strongest prescription—and in developing countries where infectious disease or untreated cataracts are more common, the percentage is often higher. Many different diseases and conditions can cause low vision, including age-related macular degeneration, diabetic retinopathy, and cone dystrophy (a genetic mutation affecting the cone cells of the retina).

People with low vision find everyday activities more challenging. They may not be able to decipher small type, especially text on busy or colored backgrounds; see a plastic toy or other trip hazard left lying on the sidewalk; distinguish faces from more than a few feet away; or read street signs or the route number on a bus to help them get around town.

Increasingly, however, technologies and devices are making their way to the market to assist visually impaired individuals to do all the little things that fully sighted people take for granted, according to experts at the University of Michigan (UM) Kellogg Eye Center, a facility that includes 100 faculty (both clinical and research) and in 2015 alone handled more than 163,000 patient visits and performed nearly 7,700 surgical procedures.

Electronic Magnifiers to Video Apps

Some of the most commonly used new devices are electronic magnifiers. “We aren’t getting rid of the old handheld magnifiers that patients have used for decades, but we are adding new alternatives,” says Kellogg optometrist Donna Wicker (Figure 1). In addition to these magnifiers that enlarge the e-book type on an iPad or Kindle—and boost the type size even more with a large computer monitor—some patients now benefit from advances in video magnifiers (often called closed-circuit televisions, or CCTVs). A common setup includes a desktop monitor equipped with a downward-facing video camera. The user places the reading material under the camera, which relays it to the monitor and adjusts the magnification, contrast, and reverse polarity. The contrast control gives users the ability to lighten a colored background, for instance, to allow the type to stand out and become legible, while the reverse-polarity control can swap black letters on a white background to white on black for easier reading.

“Some of the newer CCTVs even have the option of text to speech, so you can press a button and within a few seconds they can read the type to you,” Wicker adds. Small hand-held video magnifiers are now available as new cell phone apps that both magnify and illuminate, providing help, for example, with reading a menu or verifying the change received from a cashier.

Besides magnifying apps, other smartphone apps are constantly rolling out, adds Ashley Howson, low-vision and visual rehabilitation occupational therapist at the Kellogg Eye Center (Figure 2, right). “There are a lot of choices, and we try to keep an updated list on the Kellogg Eye Center website [1]. One of the more interesting—and one that has gotten good feedback—is called ‘Be My Eyes,’ which is an app that connects visually impaired people via live video chat to sighted volunteers [2],” she says. “The person can ask questions using a video feed, such as ‘Am I at the right address?’ or ‘What is the expiration date on this carton of milk?’ A sighted volunteer looks at the photo and answers. That’s a nice app and a great idea.” As of March 2016, the Be My Eyes website listed nearly 300,000 sighted volunteers and more than 25,000 users.

Another of Howson’s favorites is the Ariadne GPS app [3], which can report on and monitor the user’s position and provide an alert via a vibration, sound, or voice when the user approaches a preset location, such as a bus station or a friend’s house. An additional map function also announces street names and numbers as the user moves his or her finger around the display, so the user can create a mental image of the surroundings. “It’s really nice if you’re on a bus or train, where it’s hard to tell what stop you’re at,” notes Howson.

Other Innovations

Howson and Wicker highlight a few other recent innovations.

OrCam MyEye

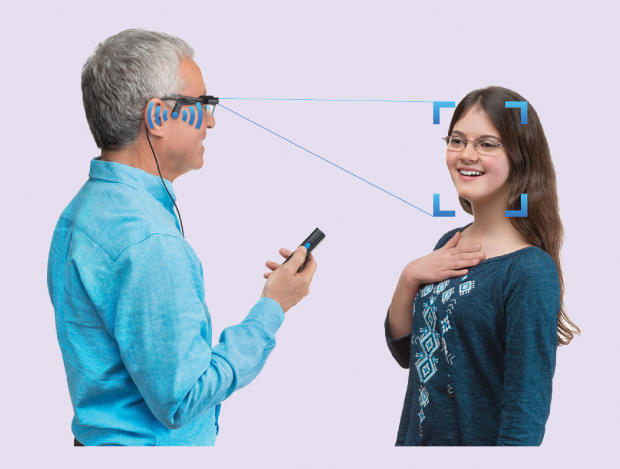

The OrCam MyEye assistive technology device [4] mounts on the frame of a pair of eyeglasses and connects to a pocket-size computer (Figure 3). The user aims the device at something to be read, such as a street sign, newspaper, or book, and the device’s camera reads the type aloud. If a user inputs images of supermarket products or human faces, the device can also announce the identity of the product or person (Figure 4).

eSight

eSight [5] is a wearable headset that captures the user’s field of view, processes it, and nearly immediately displays it on LED screens in front of the user’s eyes (Figure 5). “I know people who are wearing the headset all the time, because the glasses are able to tilt up and down. That means they can tilt the glasses up and out of the way when they want to use their own peripheral vision, and then bring the glasses back down to read addresses or street signs or to identify faces,” Wicker says, noting that the Kellogg Eye Center will soon begin clinical trials of eSight.

Argus II Retinal Prosthesis System

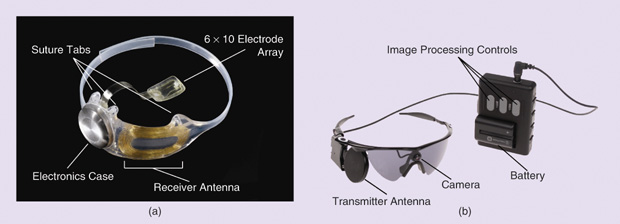

The Argus II Retinal Prosthesis System (Figure 6) is a surgically implanted retinal implant made by Second Sight, of Sylmar, California [6]. Designed for individuals who have severe to profound retinitis pigmentosa, this device processes an image from a glasses-mounted camera and sends the information wirelessly to the retinal implant. The implant relays the information to retinal cells, and the information ultimately passes along to the brain. “The implant doesn’t provide vision like a sighted person has, so it won’t replace a cane or a guide dog,” explains Wicker. “With rehabilitation, however, patients can do things like making out different shapes and objects so that they can avoid obstacles while they’re walking.”

Both Howson and Wicker are also enthusiastic about a smart cane that UM engineering students are developing. “It is an attachment for a mobility cane that alerts the user about obstacles such as ice, a low-hanging branch on a tree, a curb, or stairs,” Howson explains. “I’m excited to see that technology progress, because unseen obstacles are a really common concern we hear about at our monthly Living with Low Vision support group.”

An Ongoing Effort

The smart cane is part of a larger program that promotes projects for the low-vision population, according to program coordinator Lauro Ojeda (Figure 7), an assistant research scientist in the UM Department of Mechanical Engineering. “The idea for the smart cane is to equip it with a three-dimensional camera that can provide range feedback from different angles and signal to the user in specific ways, depending on where it identifies an obstacle,” he explains. One issue the team has yet to solve is how best to communicate with patients. “These independent 3-D range sensors can take single shots 20–30 times per second and provide that information in a digital format, but how do you convey that information to the patient through vibration, sound, or electronic stimulus so patients can understand it?” he asks. “That’s where we’re focusing a lot of attention now.”

Currently, the program includes three teams, each with six to seven students from different engineering disciplines and from other fields, such as computer science. Each team takes on a separate project. “With all of these projects, we hope to make something beneficial for the patients and also give the students a real-life problem to solve,” Ojeda says.

Visual disability is a field ripe for innovation, in part because it doesn’t draw the kinds of research funding that more common health issues do. In addition, patients often have different needs depending on the specific cause of their vision problem. “The problem may not be huge when compared to something like cancer or diabetes,” Ojeda remarks, “but we still have 1– 1.5 million Americans who are legally blind [with vision that cannot be corrected to better than 20/200]. This could be one of my kids. This could be my parent. Somebody has to work on low-vision technology not from the economic point of view but from the social point of view, and that’s what we’re doing.”

References

- University of Michigan Kellogg Eye Center. iPad and iPhone Apps for Low Vision [Online].

- Be My Eyes. [Online].

- Ariadne GPS. [Online].

- OrCam. [Online].